3360

MoCo Cycle-MedGAN: Unsupervised correction of rigid MR motion artifacts1University Hospital Tübingen, Tübingen, Germany, 2University of Stuttgart, Stuttgart, Germany

Synopsis

Motion is one of the main sources for artifacts in magnetic resonance (MR) imaging and can affect the diagnostic quality of MR images significantly. Previously, supervised adversarial approaches have been suggested for the correction of MR motion artifacts. However,supervised approaches require paired and co-registered datasets for training, which are often hard or impossible to acquire. We introduced a new adversarial framework for the unsupervised correction of severe rigid motion artifacts in the brain region. Quantitative and qualitative comparisons with other supervised and unsupervised translation approaches showed the enhanced performance of the introduced framework.

Introduction

Due to long scanning times, Magnetic Resonance Imaging (MRI) is quite susceptible to motion artifacts. Non-rigid motion artifacts, for instance, due to cardiac motion, result in local deformations. On the other hand, the bulk motion of body parts causes rigid motion artifacts with global deformations. Most of the classic approaches for prospective and retrospective motioncorrection1–5 rely on a priori knowledge about the motion. In recent years, deep learning techniques, such as convolutional neural networks, have been successfully used to correct mild rigid motion artifacts in a retrospective manner6. In our previous work, we proposed a generative adversarial network (GAN) 7, named MedGAN, which achieves state-of-the-art performance for the correction of motion artifacts8–10. However, this approach demand supervised training datasets with paired and co-registered motion-free and motion-corrupted images.To overcome this disadvantage, we have developed a framework named Cycle-MedGAN11, using unpaired datasets. However, Cycle-MedGAN was not capable yet of eliminating severe rigid motion artifacts. In this work, we extend our previous work with a new loss function and an improved generator architecture. We applied the introduced framework to correct severe motion artifacts in brain MRIs and compared the performance of the proposed framework against other supervised and unsupervised adversarial approaches.

Material and Methods

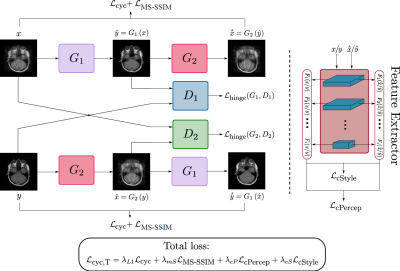

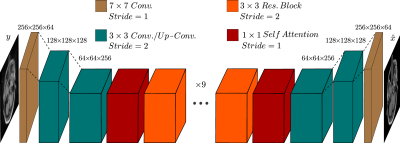

The Cycle-MedGAN framework (Fig. 1) is based on Cycle-GAN12, which performs the task of unpaired image-to-image translation from a source domain (motion-corrupted MR scans) to a target domain (motion-free MR scans). However, pixel-wise cycle consistency loss alone results in blurry and inconsistent results13. We introduce two new cyclic feature-based loss functions, namely the cycle perceptual loss and the cycle style loss, using a pre-trained feature extraction network to capture high-level perceptual differences and enhance textural similarity between images14-15. Additionally, we adapted the multi-scale structural similarity index (MS-SSIM)16 as a novel cycle loss function to penalize the structural discrepancy between the input images their cycle reconstructed counterparts. The total loss function is a combination of the four previously mentioned cycle losses (Fig. 1). The introduced hyperparameters have been empirically optimized by trial-and-error.Similar to Cycle-MedGAN, we utilize a generator network (Fig. 2) comprising of convolutional layers and several residual blocks17. We proposed a new generator architecture, inspired by Self-Attention (SA) GANs18, to capture long-range dependencies. For discriminators, we used the architecture proposed by Zhu et al.12, together with an additional SA-module.

We evaluated the framework for brain MRIs of 16 volunteers. For each participant, two unpaired brain MRI scans (motion free condition and free movement of the head) were acquired using a 3T MR scanner and a T1-weighted fast spin-echo sequence. For pre-processing, two-dimensional axial slices were extracted and zero-padded to a matrix size of 256x256 pixels. 1101 images from 12 volunteers were used for training, while the remaining 436 scans from 4 volunteers were used for validation. Explicit shuffling between all acquired motion-free and motion-corrupted scans was conducted to ensure no paired data was used for the training procedure.

For the feature extractor network, the discriminator of a bidirectional GAN (Bi-GAN) framework19 pre-trained on a distinct dataset was utilized. To improve training stability spectral norm20 was used as a discriminator regularizer. To evaluate the performance of our proposed framework, we compared it qualitatively and quantitatively against other unpaired image-translation techniques, namely, Cycle-GAN12 and our previous Cycle-MedGAN11 framework. The previous Cycle-MedGAN approach did not utilize the SA mechanism in the generator architecture not the MS-SSIM cycle loss function. Additionally, we compared the performance against the pix2pix framework21 trained in a supervised manner to investigate the drawbacks of misalignment and non-ideal registration on the performance of supervised GAN frameworks. For the quantitative comparisons, we used the Structural Similarity Index (SSIM)22, PeakSignal-to-Noise Ratio (PSNR), Mean Squared Error (MSE), and Universal Quality Index (UQI)23 as evaluation metrics.

Results and Discussion

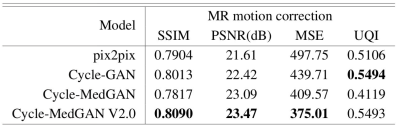

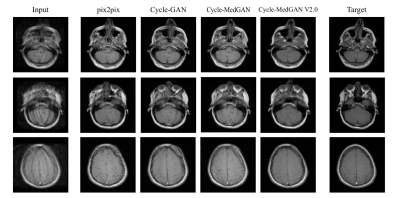

Quantitative and qualitative results of the proposed frameworks for unsupervised correction of severe rigid motion artifacts are presented in Fig. 3 and Table 1, respectively. The supervised translation approach, pix2pix, yields the worst performance. This agrees with previous works showing misalignment in the input training datasets (due to difficulty in aligning motion-free and motion-corrupted MR) results in a significant deterioration in the resultant images24. Within the unsupervised approaches, Cycle-GAN fails to eliminate motion blurring effects and produces the worst qualitative performance. Our previous Cycle-MedGANframework bypasses such problems by utilizing the cycle-style and the cycle-perceptual loss functions. However, traces of motion artifacts can still be observed. The advanced Cycle-MedGAN in this work overcomes these limitations by introducing a SA-based generator architecture as well as a new MS-SSIM term in the loss function. The qualitative results agreed with the quantitative results for the computed metrics (Table 1). Despite the first positive results, there are still limitations to our work: We plan to extend the framework to work on three-dimensional and complex-valued input data. The validity of the diagnostic information of the reconstructed images can not be guaranteed at this stage and has to be investigated in further studies by extensive blinded reading.Conclusion

In this work, we present an improved Cycle-MedGAN framework for the unsupervised retrospective correction of severe rigid motion artifacts. Quantitative and qualitative comparisons with other supervised and unsupervised translation approaches illustrated the positive performance of the proposed framework.Acknowledgements

No acknowledgement found.References

1: Speck O, Hennig J, Zaitsev M. Prospective real-time slice-by-slice motion correction for fMRI in freely moving subjects. Magma N Y N. 2006;19(2):55-61.doi:10.1007/s10334-006-0027-1

2: Küstner T, Schwartz M, Martirosian P, et al. MR-based respiratory and cardiac motion correction for PET imaging. Med Image Anal. 2017;42:129-144.doi:10.1016/j.media.2017.08.002

3: Zaitsev M, Maclaren J, Herbst M. Motion artifacts in MRI: A complex problem with many partial solutions. J Magn Reson Imaging JMRI. 2015;42(4):887-901.doi:10.1002/jmri.24850

4: Atkinson D, Hill DL, Stoyle PN, Summers PE, Keevil SF. Automatic correction of motion artifacts in magnetic resonance images using an entropy focus criterion. IEEE Trans MedImaging. 1997;16(6):903-910. DOI:10.1109/42.650886

5: Cruz G, Atkinson D, Buerger C, Schaeffter T, Prieto C. Accelerated motion corrected three-dimensional abdominal MRI using total variation regularized SENSE reconstruction.Magn Reson Med. 2016;75(4):1484-1498. DOI:10.1002/mrm.25708

6: Cao X, Yang J, Wang L, Wang Q, Shen D. Non-rigid Brain MRI Registration UsingTwo-stage Deep Perceptive Networks. Proc Int Soc Magn Reson Med Sci Meet Exhib IntSoc Magn Reson Med Sci Meet Exhib. 2018;2018.

7: Goodfellow IJ, Pouget-Abadie J, Mirza M, et al. Generative Adversarial Networks.ArXiv14062661 Cs Stat . June 2014. http://arxiv.org/abs/1406.2661. Accessed November 4, 2019.

8: Armanious K, Jiang C, Fischer M, et al. MedGAN: Medical Image Translation using GANs.ArXiv180606397 Cs . June 2018. http://arxiv.org/abs/1806.06397. Accessed November 4, 2019.

9: K. Armanious, S. Gatidis, K. Nikolaou, B. Yang, andT. Kustner, Retrospective correction of rigid and non-rigid MR motion artifacts using gans, in IEEE 16th International Symposium on Biomedical Imaging (ISBI), April 2019, pp. 1550–1554.

10: Küstner T, Armanious K, Yang J, Yang B, Schick F, Gatidis S. Retrospective correction of motion-affected MR images using deep learning frameworks. Magn Reson Med .2019;82(4):1527-1540. DOI:10.1002/mrm.27783

11: Armanious K, Jiang C, Abdulatif S, Küstner T, Gatidis S, Yang B. Unsupervised MedicalImage Translation Using Cycle-MedGAN. in IEEE European Signal Processing Conference EUSIPCO, April 2019.

12: Zhu J-Y, Park T, Isola P, Efros AA. Unpaired Image-to-Image Translation using cycle-Consistent Adversarial Networks. ArXiv170310593 Cs . November 2018.http://arxiv.org/abs/1703.10593. Accessed November 4, 2019.

13: Ledig C, Theis L, Huszar F, et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. ArXiv160904802 Cs Stat . May 2017.http://arxiv.org/abs/1609.04802. Accessed November 4, 2019.

14: Johnson J, Alahi A, Fei-Fei L. Perceptual Losses for Real-Time Style Transfer andSuper-Resolution .; 2016.

15: Gatys LA, Ecker AS, Bethge M. Image Style Transfer Using Convolutional Neural Networks. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition(CVPR) . ; 2016:2414-2423. DOI:10.1109/CVPR.2016.265

16: Wang Z, Simoncelli EP, Bovik AC. Multiscale structural similarity for image quality assessment. In: The Thrity-Seventh Asilomar Conference on Signals, Systems Computers, 2003. Vol 2. ; 2003:1398-1402 Vol.2. DOI:10.1109/ACSSC.2003.1292216

17: He K, Zhang X, Ren S, Sun J. Deep Residual Learning for Image Recognition.ArXiv151203385 Cs . December 2015. http://arxiv.org/abs/1512.03385. accessed November 4, 2019.

18: Zhang H, Goodfellow I, Metaxas D, Odena A. Self-Attention Generative AdversarialNetworks. ArXiv180508318 Cs Stat . June 2019. http://arxiv.org/abs/1805.08318. accessed November 4, 2019.

19: Donahue J, Krähenbühl P, Darrell T. Adversarial Feature Learning, in International Conference on Learning Representations (ICLR), 2017.

20: Miyato T, Kataoka T, Koyama M, Yoshida Y. Spectral Normalization for GenerativeAdversarial Networks. ArXiv180205957 Cs Stat . February 2018.http://arxiv.org/abs/1802.05957. Accessed November 4, 2019.

21: Isola P, Zhu J-Y, Zhou T, Efros AA. Image-to-Image Translation with ConditionalAdversarial Networks, 2016.

22: Zhou Wang, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process. 2004;13(4):600-612.doi:10.1109/TIP.2003.819861

23: Zhou Wang, Bovik AC. A universal image quality index. IEEE Signal Process Lett .2002;9(3):81-84. DOI:10.1109/97.995823

24: Wolterink JM, Dinkla AM, Savenije MHF, Seevinck PR, Berg CAT van den, Isgum I. DeepMR to CT Synthesis using Unpaired Data. ArXiv170801155 Cs . August 2017.http://arxiv.org/abs/1708.01155. Accessed November 4, 2019.

Figures