3343

Evaluation of motion correction capability in retrospective motion correction with MoCo MedGAN

Thomas Küstner1,2,3, Friederike Gänzle3, Tobias Hepp2, Martin Schwartz3,4, Konstantin Nikolaou5, Bin Yang3, Karim Armanious2,3, and Sergios Gatidis2,5

1Biomedical Engineering Department, School of Biomedical Engineering and Imaging Sciences, King's College London, London, United Kingdom, 2Medical Image and Data Analysis (MIDAS), University Hospital Tübingen, Tübingen, Germany, 3Institute of Signal Processing and System Theory, University of Stuttgart, Stuttgart, Germany, 4Section on Experimental Radiology, University Hospital Tübingen, Tübingen, Germany, 5Department of Radiology, University Hospital Tübingen, Tübingen, Germany

1Biomedical Engineering Department, School of Biomedical Engineering and Imaging Sciences, King's College London, London, United Kingdom, 2Medical Image and Data Analysis (MIDAS), University Hospital Tübingen, Tübingen, Germany, 3Institute of Signal Processing and System Theory, University of Stuttgart, Stuttgart, Germany, 4Section on Experimental Radiology, University Hospital Tübingen, Tübingen, Germany, 5Department of Radiology, University Hospital Tübingen, Tübingen, Germany

Synopsis

Motion is the main extrinsic source for imaging artifacts which can strongly deteriorate image quality and thus impair diagnostic accuracy. Numerous motion correction strategies have been proposed to mitigate or capture the artifacts. These methods require some a-priori knowledge about the expected motion type and appearance. We have recently proposed a deep neural network (MoCo MedGAN) to perform retrospective motion correction in a reference-free setting, i.e. not requiring any a-priori motion information. In this work, we propose a confidence-check and evaluate the correction capability of MoCo MedGAN with respect to different motion patterns in healthy subjects and patients.

Introduction

Motion is the main extrinsic source for imaging artifacts in MRI which can strongly deteriorate image quality and can thus impair diagnostic accuracy. Numerous prospective motion correction strategies have been proposed to minimize the artifacts1-10. These methods have in common that they need to be applied during running acquisition and require some a-priori knowledge about the expected motion type and appearance. Retrospective motion correction techniques on the other hand correct for motion-induced artifacts during or after image reconstruction11-13. However, these methods also rely on some a-priori knowledge about the motion such as external surrogate signal, motion models or motion-resolved images.We have recently proposed a deep learning-based approach for retrospective correction of motion-induced artifacts by means of a generative adversarial network (GAN), named Motion Correction with Medical GAN (MoCo MedGAN)14-18. This method does not require any a-priori knowledge about motion and can correct resulting artifacts only by taking the complex-valued (magnitude and phase) images as input. It learns from a database with pairs of motion-free and motion-affected images, the image-to-image translation task which is controlled by the chosen loss function. Initial results have shown great potential for correcting rigid motion artifacts in neurological cases and non-rigid respiratory artifacts in abdominal imaging.

In this work, we want to investigate the robustness and reliability of the proposed MoCo MedGAN with respect to different types of motion in a volunteer and patient cohort. To further examine the correction on a local scale, we combine the MoCo MedGAN with our previously proposed motion detection network19-21 to identify motion-affected regions.

Methods

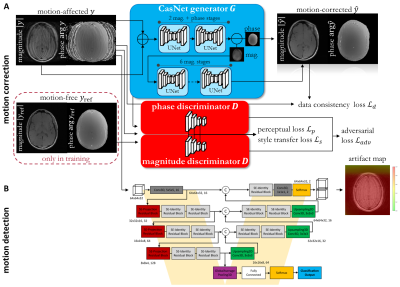

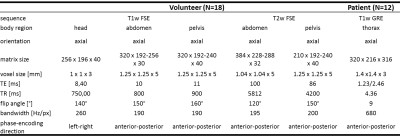

The proposed MoCo MedGAN is described in14,16 and shown in Fig.1a. It consists of a generator performing the correction task and a discriminator which learns how to classify and separate the motion and ensures data consistency of the corrected image to the input. The network is trained end-to-end with complex-valued (magnitude and phase) image pairs of motion-free and motion-affected images by minimizing an adversarial, data consistency, perceptual and style transfer loss. In order to obtain a feedback where motion actually occurred, we combine the correction network with our motion detection network19-21 as shown in Fig.1b. A voxel-wise significance map is returned to examine motion-affected regions and to check where motion will mostly likely be corrected. This can thus serve as a confidence-check of the motion correction.Training data was acquired in 18 healthy subjects scanned in the head, abdomen and pelvis with a T1w and T2w FSE under rest (motion-free) and under non-instructed free-movement (motion-affected for head/pelvis: rigid body motion, abdomen: non-rigid breathing). Images are first normalized to unit range and form then the training database of 2D axial motion-free and motion-affected image pairs in head: 240,500/1296, abdomen: 337,880/1116 and pelvis: 408,000/1440 with 3-fold subject left-out for cross-validation testing. For further testing, 12 patients with pathologies were scanned in the thoracic region (not included in training) with a T1w spoiled GRE (motion-free: breath-hold, motion-affected: free-breathing). MR acquisition parameters are stated in Tab.1.

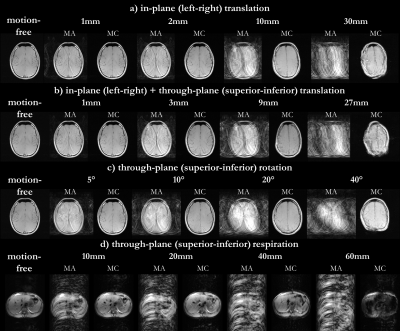

Motion correction capability can be better examined by a motion simulation pipeline which disturbs the motion-free images with motion on a per-frequency-line/TE level (Cartesian sampling). The simulated displacements originate from different user-defined motion trajectories: random or continuous translation/rotation, respiratory motion modelled by a modified raised cosine waveform (MRCW)22. The strength of the motion trajectories (translation displacement, rotation angle, amplitude MRCW) can be adjusted to investigate correction capability. Different testing scenarios with network trained on real motion are investigated for a) random head translation (left-right in-plane) b) random head translation (left-right in-plane + superior-inferior through-plane), c) rotation (out-of-plane rotation along longitudinal z-axis) and d) continuous respiration following MRCW (through-plane displacement).

Results and Discussion

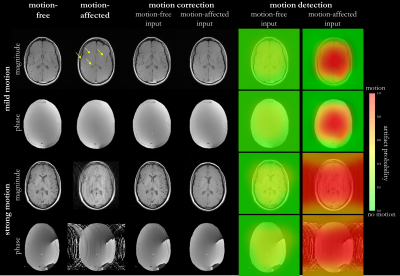

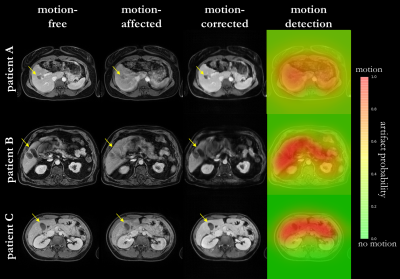

Fig.2 shows the motion correction and detection for a mild and strong head motion case with motion-free and motion-affected image as input. The motion-free image is unaffected by the network while the motion-affected image is reliably corrected with some microstructural changes in strong motion regions. Fig.3 depicts the correction of patient datasets (not included in training) for which pathologies are preserved by the correction. In Fig.4 the influence of various motion trajectories is investigated. In-plane motion can be better corrected (compare Fig.4a and 4b). Trustworthy correction capability of the network (trained only with real motion) is limited by maximal motion amplitude. Realistic motion correction can be achieved if motion stays below 20mm left-right translation in head (Fig.4a), 9mm in-plane+through-plane translation in head (Fig.4b), 30° through-plane rotation in head (Fig.4c) and 30mm through-plane in abdomen (Fig.4d). In general, no obvious in-painting from training database was observed.This study has limitations. Due to the 2D correction, any through-plane motion correction remains challenging. Although the network was not trained on patients with pathologies, correction can still be achieved with preserved structures. Training on patient data will be conducted in a separate study. No data augmentation in training with motion simulation pipeline was conducted which might in the future improve correction capability for various motion patterns, but can demand a more careful network tuning.

Conclusion

Retrospective motion correction with MoCo MedGAN is feasible and data consistency to input can be fulfilled if realistic motion patterns are occurring.Acknowledgements

No acknowledgement found.References

1. Cheng JY, Zhang T, Ruangwattanapaisarn N, Alley MT, Uecker M, Pauly JM, Lustig M, Vasanawala SS. Free‐breathing pediatric MRI with nonrigid motion correction and acceleration. Journal of Magnetic Resonance Imaging 2015;42(2):407-420.2. Cruz G, Atkinson D, Henningsson M, Botnar RM, Prieto C. Highly efficient nonrigid motion‐corrected 3D whole‐heart coronary vessel wall imaging. Magnetic Resonance in Medicine 2017;77(5):1894-1908.

3. Henningsson M, Koken P, Stehning C, Razavi R, Prieto C, Botnar RM. Whole‐heart coronary MR angiography with 2D self‐navigated image reconstruction. Magnetic Resonance in Medicine 2012;67(2):437-445.

4. Küstner T, Würslin C, Schwartz M, Martirosian P, Gatidis S, Brendle C, Seith F, Schick F, Schwenzer NF, Yang B. Self‐navigated 4D cartesian imaging of periodic motion in the body trunk using partial k‐space compressed sensing. Magnetic Resonance in Medicine 2017;78(2):632-644.

5. Maclaren J, Herbst M, Speck O, Zaitsev M. Prospective motion correction in brain imaging: a review. Magnetic Resonance in Medicine 2013;69(3):621-636.

6. Prieto C, Doneva M, Usman M, Henningsson M, Greil G, Schaeffter T, Botnar RM. Highly efficient respiratory motion compensated free‐breathing coronary MRA using golden‐step Cartesian acquisition. Journal of Magnetic Resonance Imaging 2015;41(3):738-746.

7. Skare S, Hartwig A, Martensson M, Avventi E, Engstrom M. Properties of a 2D fat navigator for prospective image domain correction of nodding motion in brain MRI. Magnetic Resonance in Medicine 2015;73(3):1110-1119.

8. Speck O, Hennig J, Zaitsev M. Prospective real-time slice-by-slice motion correction for fMRI in freely moving subjects. Magnetic Resonance Materials in Physics, Biology and Medicine 2006;19(2):55.

9. Wallace TE, Afacan O, Waszak M, Kober T, Warfield SK. Head motion measurement and correction using FID navigators. Magnetic Resonance in Medicine 2019;81(1):258-274.

10. Zaitsev M, Maclaren J, Herbst M. Motion artifacts in MRI: A complex problem with many partial solutions. Journal of magnetic resonance imaging : JMRI 2015;42(4):887-901.

11. Atkinson D, Hill DL, Stoyle PN, Summers PE, Keevil SF. Automatic correction of motion artifacts in magnetic resonance images using an entropy focus criterion. IEEE transactions on medical imaging 1997;16(6):903-910.

12. Batchelor P, Atkinson D, Irarrazaval P, Hill D, Hajnal J, Larkman D. Matrix description of general motion correction applied to multishot images. Magnetic Resonance in Medicine 2005;54(5):1273-1280.

13. Odille F, Vuissoz PA, Marie PY, Felblinger J. Generalized reconstruction by inversion of coupled systems (GRICS) applied to free‐breathing MRI. Magnetic Resonance in Medicine 2008;60(1):146-157.

14. Küstner T, Armanious K, Yang J, Yang B, Schick F, Gatidis S. Retrospective correction of motion‐affected MR images using deep learning frameworks. Magnetic resonance in medicine 2019.

15. Armanious K, Küstner T, Nikolaou K, Gatidis S, Yang B. Retrospective correction of Rigid and Non-Rigid MR motion artifacts using GANs. IEEE International Symposium on Biomedical Imaging (ISBI); 2019. p 1550-1554.

16. Armanious K, Yang C, Fischer M, Küstner T, Nikolaou K, Gatidis S, Yang B. MedGAN: Medical Image Translation using GANs. arXiv preprint arXiv:180606397 2018.

17. Küstner T, Mo K, Yang B, Schick F, Gatidis S, Armanious K. Retrospective deep learning based motion correction from complex-valued imaging data. Proceedings of the European Society for Magnetic Resonance in Medicine (ESMRMB); 2019.

18. Küstner T, Yang B, Schick F, Gatidis S, Armanious K. Retrospective motion correction using deep learning. Proceedings of the International Society for Magnetic Resonance in Medicine (ISMRM); 2019.

19. Küstner T, Jandt M, Liebgott A, Mauch L, Martirosian P, Bamberg F, Nikolaou K, Gatidis S, Schick F, Yang B. Automatic Motion Artifact Detection for Whole-Body Magnetic Resonance Imaging. Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP); 2018.

20. Küstner T, Jandt M, Liebgott A, Mauch L, Martirosian P, Bamberg F, Nikolaou K, Gatidis S, Yang B, Schick F. Motion artifact quantification and localization for whole-body MRI. Proceedings of the International Society for Magnetic Resonance in Medicine (ISMRM); 2018.

21. Küstner T, Liebgott A, Mauch L, Martirosian P, Bamberg F, Nikolaou K, Yang B, Schick F, Gatidis S. Automated reference-free detection of motion artifacts in magnetic resonance images. Magnetic Resonance Materials in Physics, Biology and Medicine 2018;31(2):243-256.

22. Hsieh C, Chiu Y, Shen Y, Chu T, Huang Y. A UWB Radar Signal Processing Platform for Real-Time Human Respiratory Feature Extraction Based on Four-Segment Linear Waveform Model. IEEE Transactions on Biomedical Circuits and Systems 2016;10(1):219-230.

Figures

Fig. 1: A) Proposed MoCo MedGAN: Retrospective motion correction

with generative adversarial network which is trained on pairs of motion-free

and motion-affected images. Training is controlled by adversarial, data

consistency, perceptual and style transfer loss. B) Proposed motion detection

network to infer motion-affected areas and to provide confidence-check

of the motion correction.

Fig. 2: Motion correction and detection in two healthy

subjects with real mild and strong motion (left-right head tilting) in the

head. Motion correction and detection network are tested with motion-free and

motion-affected input images. Respective artifact probabilty maps are shown as

overlay on motion-affected image. Motion-affected structrues in mild motion

case are marked by arrows.

Fig. 3: Motion correction and detection in three patients

with real non-rigid respiratory motion. Motion-free, motion-affected

(respiration) and motion-corrected images are shown as well as artifact

probabilty map overlaid on the motion-affected image. Motion correction and

detection networks are tested with motion-affected input images. Arrows mark

pathologies which are impaired by motion.

Fig. 4: Motion correction in two healthy subjects for

simulated motion of a) random head translation

(left-right in-plane) b) random head translation (left-right in-plane +

superior-inferior through-plane), c) rotation (out-of-plane rotation along

longitudinal z-axis) and d) continuous respiration following modified raised

cosine waveform (MRCW) (through-plane displacement). Motion-free image is

disturbed by motion with different strengths: a, b) translation, c) rotation,

d) amplitude MRCW. Motion-affected (MA) and motion-corrected (MC) images are

shown.

Table 1: MR acquisition parameters of

training and test subjects.