2799

A two-stage Convolutional neural network for meniscus segmentation and tear classification1Department of Radiology, The Third Affiliated Hospital of Southern Medical University, Guangzhou, China, 212Sigma Technologies, San Diego, CA, United States

Synopsis

Artificial intelligence for interpreting MRI meniscal tear could prioritize high risk patients and assist clinicians in making diagnoses. In this study, a two-stage end-to-end convolutional neural network, with Mask rcnn as backbone for object detecting and Resnet for classification, is proposed for automatically detecting torn in the meniscus on MRI exams. With training dataset of 507 MR images and validation dataset of 69 MR images, the meniscus detection achieves a recall of 0.95 when 1 false positive of 1 image, and the ROC for classification of torn meniscus get a AUC of 0.99.

Introduction

Magnetic resonance (MR) imaging is currently the modality of choice for detecting meniscal tear and planning subsequent treatment1. However, interpretation of MRI is time-intensive and subject to misdiagnosis and variability, resulting in delaying the timing of treatment2. Increased utilization has had several downstream effects for a radiology department or private practice, including an increased need for achieving operational efficiency while maintaining excellent accuracy and imaging report quality. Artificial intelligence based diagnosis system for interpreting MRI meniscal tear could prioritize high risk patients, assist clinicians in making accurate diagnoses, and reduces the risk of misdiagnosis or delayed diagnosis. A two-stage Convolutional neural network methods, in being able to automatically learn layers of features, are well suited for modeling the complex relationships between medical images and their interpretations3. In this study, we developed a two-stage Convolutional neural network model composed of Mask_Rcnn4 and Resnet5 for detecting meniscal tears on MRI exams.Methods

This study was approved by the ethics committee of the third affiliated hospital of southern medical university. 20 patients (25 men and 34 women; range, 9-64 years) with meniscal tear and 20 normal menisci (25 men and 28 women; range, 11-47 years) between November 2016 and July 2019.The training dataset was composed of 507 MR images of the knee, and the validation dataset was composed of 69 images. All these MR images were obtained from a 3T Philips Achieva scanner (Philips Healthcare, the Netherlands) with an 8-channel, knee coils. The dataset contained PDW_SPAIR sequence of the right or left knee passing through the anterior and posterior horns of the medial or lateral meniscus in Dicom format with the scan parameters of: TR = 3000ms, TE = 35 ms, slice thickness = 3 mm, and the matrix of 640*640. With a home made web-based labeling software system based on cornerstone. Js, 3 radiologists masked out the meniscus area and also labeled if there existed torn according to a unified criteria.

As the object is to find if there exists severe torn in the meniscus, we adopt the basic diagnosis pipeline of the radiologist. To detect the meniscus area as the first step, then classify if torn appears in the meniscus area.

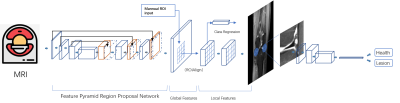

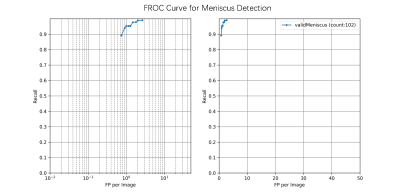

To design an end-to-end algorithm for the detection and classification of the meniscus, a two-stage CNN network is proposed. The first stage is focus on correctly detect the meniscus area, which would be considered as a typical object detection task. In this study, Mask-RCNN was used as the backbone. Based on a regional proposal algorithm (realized by a Feature Pyramid Network), category-independent regions of interest in a given image would be extracted, then a ROI Align algorithm is implied to focus on the specific area of the image feature map, then a mask task, as well as the category regression head are implied to obtain both of the most possible object to be detected in the image with an accurate binary mask and a most possible class would be assign. Free-response ROC is used in this study to evaluate the performance of trained network.

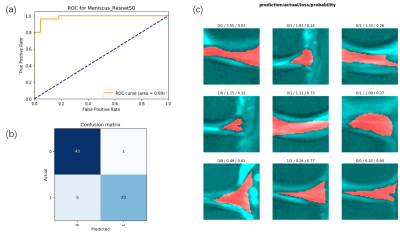

The second stage is using a Resnet34 as the backbone to classify if the detected meniscus area contains any torn signatures. To let the CNN spend more attention on the meniscus area, a 64*64 rectangular area is cropped with the meniscus located in the center, and also the meniscus mask is concatenated as one single channel with the cropped patch as the network input. ROC curve and Confusion matrix is used to evaluate the validation set.

Results

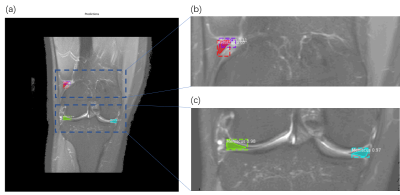

A typical meniscus detection example is shown in figure 2. Figure 2(a) shows that four suspected meniscus areas were detected, however, two of these detections as shown in figure 3(b) have possibility of 0.97 and 0.98, respectively, could be proven to be true positive detection results, while two detection areas located at the front corner of the meniscus with possibility of 0.07 and 0.1 could be proven as true negative. Figure 3 shows the FROC curve for the entire valid dataset inference results, the FROC curve show that the detection recall is 0.95 with 1FP per image. Figure 4 shows the results for the meniscus tear lesion classification network results. As shown in figure 4 (a), a AUC of 0.99 was obtained for the validation dataset, with a confusion matrix showing that only 1 FP and 5 FN detection results were found for all the 69 validation results. All error images were shown in figure 4(c)Conclusion and Discussion

In this study, a two-stage convolutional neural network was proposed for the automatically detect the meniscus area and also classify the torn meniscus from healthy ones. A 2D Mask_RCNN was used for the backbone of the detection network and Resnet 34 was used for the classification work. The results show that a detection recall of 0.95 at 1 FP rate and 0.99 AUC for the classification could be obtained. This study showed quite promising results for implying a 2-stage end-to-end CNN for the automatically detection of the meniscus torn. In the future study, more knee illness will be studied with modified network architecture with multitask abilities.Acknowledgements

The authors acknowledge grant support from the National Natural Science Foundation of China (Grant No. 81871510).References

1. Nguyen JC, De Smet AA, Graf BK, et al: MR imaging–based diagnosis and classification of meniscal tears. Radiographics 34:981-999, 2014

2. Bolog NV, Andreisek G: Reporting knee meniscal tears: technical aspects, typical pitfalls and how to avoid them. Insights into imaging 7:385-398, 2016

3. V. Roblot, Y. Giret, M. Bou Antoun, C. Morillot,X. Chassin, A. Cotten, J. Zerbib, L. Fournier. Artificial intelligence to diagnose meniscus tears on MRI. Diagnostic and Interventional Imaging: 100(4):243-249, 2019

4. Kaiming He, Georgia Gkioxari, Piotr Dollár, Ross Girshick. Mask R-CNN. arXiv:1703.06870. 2018

5. Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun. Deep Residual Learning for Image Recognition. arXiv:1512.03385. 2015

6. Rosas HG. Magnetic resonance imaging of the meniscus. Magn Reson Imaging Clin N Am 22:493-516, 2014

Figures