2594

Automatic Bone Segmentation on Whole body Diffusion-Weighted MRI using Deep Learning

Asha K Kumaraswamy1,2, Punith B Venkategowda1, Chandrashekar M. Patil2, and Robert Grimm3

1Siemens Healthcare Private Limited, Bengaluru, India, 2Vidyavardhaka College of Engineering, Mysuru, India, 3MR Application Predevelopment, Siemens Healthcare, Erlangen, Germany

1Siemens Healthcare Private Limited, Bengaluru, India, 2Vidyavardhaka College of Engineering, Mysuru, India, 3MR Application Predevelopment, Siemens Healthcare, Erlangen, Germany

Synopsis

Whole body diffusion-weighted MR imaging is a promising technique for the evaluation of bone metastases e.g. in prostate and breast cancer. Segmenting and quantifying tumor burden and treatment response based on DWI and corresponding ADC has been proposed previously. However, treatment effects may influence the actual segmentation and signal intensities. Here, a more reproducible approach would be preferred for segmenting and analyzing consistent regions of interest also in follow-up examinations. We present a deep learning method for automatic bone segmentation, based on T1-weighted imaging or DWI. Feasibility of the new approach is shown, resulting in significantly reduction in computation time.

Introduction

Diffusion-weighted MRI (DWI) is extensively used in oncology for disease evaluation. Whole body diffusion-weighted MRI can be used for tumor staging, bone metastases tumor assessment from breast, prostate, lung, thyroid, and melanoma tumors and in treatment response monitoring 1. Segmenting bony structures from whole body DWI images helps in fast and easy reading of MR images with the purpose of screening for bone metastases2,3. In previous work, apparent diffusion coefficient (ADC) histograms were analyzed by segmenting bone tumors based on high DWI signal intensity. However, the DWI signal intensity may vary depending on the treatment response, and a more reproducible way of segmenting the bone is desired. One common approach for this is to automatically segment the bones based on T1-weighted Dixon imaging or on Zero-TE (ZTE) or ultrashort echo-time (UTE) images. However, for evaluation of the ADC, these methods may suffer from anatomical mismatch due to imperfect registration between the different image contrast. This work presents a new approach to benefit from the use of deep learning techniques to segment the bone directly from diffusion-weighted images. In the proposed method, a Convolution Neural Network (CNN)-based model is trained on weak labels generated by a state-of-the-art template-based bone segmentation algorithm on T1-weighted Dixon images4. In this work we compared two approaches: training on T1-weighted 3D Dixon water images and training on DWI. We evaluated the method on 12 whole-body MRI scans and achieved mean dice scores of 0.866 and 0.812 on water and diffusion images, respectively.Materials and Methods

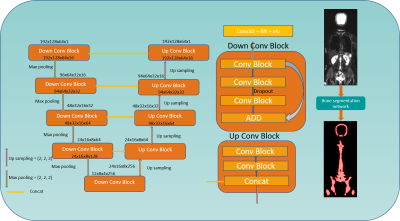

Figure 1 presents an overview of the 3D CNN-based model used for bone segmentation. For training the CNN-based algorithms, whole body diffusion-weighted images at b=50 s/mm² and Dixon water images from 68 subjects were used, which were acquired on a 3 T MRI scanner (Biograph mMR, Siemens Healthcare, Erlangen, Germany) at a single institution. The data from 12 additional subjects was used for evaluation. The reference bone segmentation for each scan was automatically generated by using prototype software (MR Bone Scan; Siemens Healthcare, Erlangen, Germany) thus providing “weak labels” for training. This application used a template-based algorithm on T1-weighted Dixon images for extraction of a bone mask4 that is normally used for estimating positron emission tomography (PET) attenuation correction maps. Each whole body scan is converted to axial orientation and reformatted to a dimension of 192x128x384 while maintaining the aspect ratio. The image volume is then divided into 6 training data slabs of dimension 192x128x64. Each image’s intensities were normalized to zero mean and unit standard deviation. Data augmentation was performed by randomly rotating ±15° with respect to X, Y, Z axes and translating along X and Y directions. A total of 2096 3D volumes of both DWI and water images were used for training. The 3D CNN model architecture for bone segmentation uses the 3D resized volumes as input. Each Conv Block of Figure 1 performs a 3D convolution followed by batch normalization and exponential linear unit activation with negative slope alpha value 1.0. We used the Xavier normal initializer5 for weights. The network is implemented using Keras with a TensorFlow backend on a DGX-1 with 2, 16 GB Tesla V1 GPUs, batch size = 2, and learning rate = 10-4. Dice coefficient between the network output and target mask was used as loss function. The network was trained for 200 epochsResults

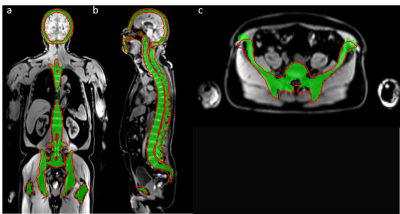

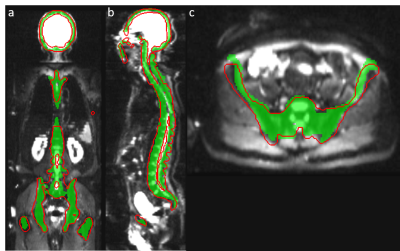

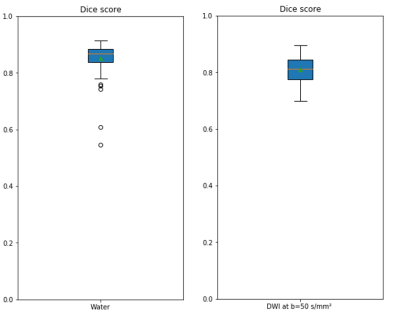

Visual assessment of the bone mask showed strong similarities between CNN and template-based method (Figure 2 and Figure 3). The overall segmentation quality and shape of the bone regions were properly preserved in both methods. The evaluation for segmentation accuracy on 12 whole body MRI scans showed a dice value of 0.812 on DWI and 0.866 on Dixon water images (Figure 4). The reference template-based algorithm took 5 to 6 minutes on a CPU, whereas the CNN approach takes 72 to 80 seconds. CNN launched in a GeForce GTX 1080 Ti GPU took less than 2 seconds.Discussion and Conclusion

This work presents a novel approach for bone segmentation using CNN trained on results previously segmented by a registration-based method. These results show that training a model on weak labels generated by a classical template-based method is a good approach to obtain comparable results in less time. Due to the variability of the training data and the power of generalization of the network, this study shows better segmentation accuracy even on DWI images. Results can be further optimized and the dependency on the weak labels can be reduced by including the manually corrected images. With the proposed approach, bone segmentation speed is increased by nearly 4 times. This method can be used to segment bone directly from DWI images and the results can be used to analyze corresponding ADC images for tumor response.Acknowledgements

All imaging data are courtesy of Dr. Markus Lentschig, ZEMODI, Bremen.References

- Stecco, A., Trisoglio, A., Soligo, E., Berardo, S., Sukhovei, L., & Carriero, A. (2018). Whole-body MRI with diffusion-weighted imaging in bone metastases: a narrative review. Diagnostics, 8(3), 45.

- Blackledge, Matthew D., et al. "Assessment of treatment response by total tumor volume and global apparent diffusion coefficient using diffusion-weighted MRI in patients with metastatic bone disease: a feasibility study." PloS one 9.4 (2014): e91779.

- Padhani, A. R., Lecouvet, F. E., Tunariu, N., Koh, D. M., De Keyzer, F., Collins, D. J., ... & Schlemmer, H. P. (2017). Rationale for modernising imaging in advanced prostate cancer. European urology focus, 3(2-3), 223-239.

- Paulus DH, Quick HH, Geppert C, Fenchel M, Zhan Y, Hermosillo G, Faul D, Boada F, Friedman KP, Koesters T. “Whole-Body PET/MR Imaging: Quantitative Evaluation of a Novel Model-Based MR Attenuation Correction Method Including Bone”, J Nucl Med. 2015 Jul;56(7):1061-6.

- X. Glorot and Y. Bengio. Understanding the difficulty of training deep feedforward neural networks. In International Conference on Artificial Intelligence and Statistics. 2010: 249–256.

Figures

Figure 1: 3D CNN architecture considering

a 192x128x64 volume as input and performing conv3d, batch normalization and elu

in all the layers. Last layer with sigmoid activation and adam optimizer for

back propagation.

Figure 2:

Result of CNN bone segmentation trained on water images (shown as red contour)

using template-based

segmentation on T1-weighted images (green mask) as

reference

Figure 3:

Result of CNN bone segmentation trained on DWI (shown as red contour) using template-based segmentation on T1-weighted images

(green mask) as reference.

Figure 4: Dice plot of water and Diffusion

test images with mean value of 0.866 and 0.812 respectively