2392

Prostate MRI Image Quality Control using Deep Learning

Jing Zhang1, Yang Song1, Ying Hou2, Yu-dong Zhang2, Xu Yan3, Yefeng Yao1, and Guang Yang1

1Shanghai Key Laboratory of Magnetic Resonance, Department of Physics, East China Normal University, shanghai, China, 2Department of Radiology, The First Affiliated Hospital with Nanjing Medical University,, Nanjing, China, 3MR Scientific Marketing, Siemens Healthcare, shanghai, China

1Shanghai Key Laboratory of Magnetic Resonance, Department of Physics, East China Normal University, shanghai, China, 2Department of Radiology, The First Affiliated Hospital with Nanjing Medical University,, Nanjing, China, 3MR Scientific Marketing, Siemens Healthcare, shanghai, China

Synopsis

Deep learning-based computer aided diagnosis (CAD) has been proposed to detect and classify prostate cancer lesions in multi-parametric Magnetic Resonance Imaging (mp-MRI) images. CAD requires their input images meet certain quality standards. In this work, we proposed a ResNet50-based model to filter out images not suitable as the input to the following lesion detection network. Taking unqualified images as positive cases, we obtained an area under ROC curve (AUC) of 0.8526 in test cohort, which helped to improve the performance of detection model and increased the interpretability by rejecting unqualified images with a reason instead of giving wrong results.

INTRODUCTION

Prostate cancer (PCa) is one of the most common cancer in the world and the number of patients increased significantly in recent years1 . Multi-parametric magnetic resonance imaging (mp-MRI) is widely used to diagnose PCa4. Computer –aid-diagnosis systems have been developed to detect and classify the lesions in prostate mp-MRI images2,3,4. All these detection or classification networks pose some implicit requirement on the quality of the input images. For example, excessive deformation in DWI images, super-sized lesions causing the glands difficult to identify, unsuitable FOV, poor image SNR or motion artifacts, may influence the performance of a typical detection / classification network. So, we proposed a classification model based on ResNet505 to filter out unqualified images.METHODS

Data Set: 833 patients (mean age, 38.6 years; age range, 26–50 years) with prostate cancer were reviewed with T2W(TSE,0.3×0.3×4.2mm3), low-DWI(SSEP,0.72×0.72×5.5mm3,b is 0s/mm2), high-DWI(SSEP,0.72×0.72×5.5mm3,b is 1000s/mm2 or 800s/mm2) and ADC map sequences by Siemens 3T MR scanners from Jiangsu Province Hospital. Total 987 volume of interest (VOI) were manual labeled in T2W by experienced radiologists.Data Preprocess and check: DWI and ADC map were registered to T2W, then all images were labeled by two radiologists with 5 years’ and 2 years’ experiences (A and B) as either qualified or unqualified. Images were labeled as qualified if they are: (1) with the proper body part, FOV, resolution, SNR; (2) not containing over-sized lesions which makes the prostate difficult to identify; (3) not overly imposed by artifacts; (4) not overly-deformed DWI images. Where two radiologists disagreed, the label made by more experienced radiologist A was used. In addition, we also used the intersection of labels of A and B to compare with single annotation of A and B.

Model training and evaluation: Slices intersects with VOIs together their neighboring slices were selected and resized to 240 × 240 to build the qualification model. Selected images were split randomly into training set (2556), validation set (530), and test set (561). Structure of ResNet50 is illustrated in Figure 2. We used Adam (.0001) for optimizer and binary cross-entropy for loss function. Receiver operating characteristic curve was constructed by changing the threshold of the probability to separate qualified and unqualified images. AUC was used to evaluate illustrate the model performance in the training, validation and test cohort.

RESULTS

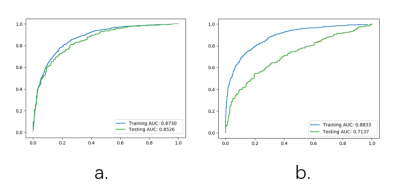

It can be seen from Figure 3, compared to the labels of radiologist A, AUC values are 0.8730, and 0.8526 for training and test cohort respectively. Compared with radiologist B, the AUC values are 0.8823 and 0.7137 for training and test cohort respectively. Since the model was trained using radiologist A’s label, the result quite understandable. There are more details about the performance of the model in Table 2, the model build from annotation of radiologist A could obtain highest accuracy of 0.7843 with sensitivity of 0.8251 and specificity of 0.7646 in test cohort by using Youden index.DISCUSSION

As the optimal model with AUC value of 0.8256 and accuracy 0.7843 in test set, it can be used to filter out unqualified images before images are fed into the detection or classification network. Further, the optimal model has sensitivity of 0.8251 and NPV of 0.9003, which mean it is more efficient to figure out unqualified images. However, in clinical environment, it is not desirable to prevent eligible images from being used by detection / classification networks. So, point on the ROC other than indicated by Youden index might be more desirable. We don’t want to throw out a lot of images than can be used for network, so the detection of positive may not be most important. In addition, the probability of qualified could notice the detection network that the current image maybe problematic, and help the detection network to optimize prediction.CONCLUSION

Quality control plays a key role in medical imaging, especially when artificial intelligence has been introduced. Deep learning models can be used to differentiate qualified and unqualified mp-MRI images before they are used for prostate cancer detection and classification. The quality control model can be used to provide better interpretability by rejecting the unqualified images with a reason. It can also provide information to detection / classification network which they can use to improve their result. We can expect this kind of quality control network works with different functional deep learning models in the future.Acknowledgements

This project is supported by National Natural Science Foundation of China (61731009, 81771816).References

- Canadian Cancer Society, Prostate Cancer Statistics, 2015.

- Song Y, Zhang YD, Yan X, et al. Computer-aided diagnosis of prostate cancer using a deep convolutional neural network from multiparametric MRI. J Magn Reson Imaging. 2018 Dec;48(6):1570-1577.

- Harmon SA, Tuncer S, Sanford T, Choyke PL, Türkbey B. Artificial intelligence at the intersection of pathology and radiology in prostate cancer. Diagn Interv Radiol. 2019 May;25(3):183-188

- Chen T, Li M1, Gu Y, Zhang Y, et al. Prostate Cancer Differentiation and Aggressiveness: Assessment With a Radiomic-Based Model vs. PI-RADS v2. J Magn Reson Imaging. 2019 Mar;49(3):875-884

- A. Stangelberger, M. Waldert, and B. Djavan, Prostate cancer in elderly men, Rev. Urol, 2008; vol. 10, no. 2, pp. 111–119.

Figures

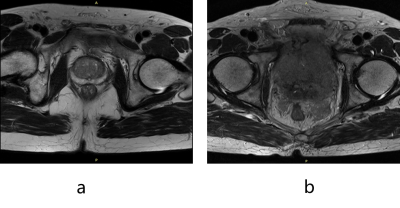

Figure 1. (a) A qualified image; (b) An unqualified image for prostate-segmentation

model. The structure of the prostate disappears in the image, thus the image

cannot be processed properly by a following prostate-segmentation model.

Table 1 Labels of two radiologists

Figure

2 Network architecture

of Resnet50. Note the existence of shortcut connections makes it trainable

while having a very deep structure.

Figure

3 ROC Result. (a)Model

with label of radiologist A. (b) Model with label of radiologist B.

Table 2 performance of model