2232

Tag Removal in Cardiac Tagged MRI using Edge-guided Adversarial Learning1Department of Biomedical Engineering, University of Southern California, Los Angeles, CA, United States, 2Division of Cardiology, Children's Hospital Los Angeles, Los Angeles, CA, United States

Synopsis

Tagging in cardiac MRI could cause great challenges to subsequent downstream analysis. Modeling tag removal task as an image recovery problem, we propose a GAN-based method constrained by edge prior, termed EG-GAN, to remove tags progressively without model collapse, and it outperforms conventional approaches.

Introduction

Tagged Magnetic Resonance Imaging (tMRI), uses radiofrequency stimulation to presaturate tissue with tagging patterns such as parallel lines or grids, to quantitively assess tissue deformation, typically in the heart1. However, tagging could cause great challenges to the subsequent image processing and downstream analysis, such as myocardial segmentation2. Therefore, several tag removal approaches have been proposed. Band-pass filtering3, as an early phase technique, can be easily implemented but its performance depends heavily on filter design and parameter setting. Additionally, due to the loss of high frequency information, the results are overly smoothed or blurry. To improve the performance, a band-stop filtering approach4 was proposed to eliminate the harmonic peaks produced by the tags in frequency domain, but the performance heavily depends on the patterns of harmonic peaks which shift during the cardiac cycle. Recently, applications of neural networks have been increasing dramatically in image processing. In particular, generative adversarial networks (GAN)5 have revealed considerable advantages in image restoration tasks6,7. In this paper, we model tag removal task as image recovery problem by treating tag lines as “artifact”, and start with a GAN-based approach, context encoder6, to generate tagging-free images by recovering corrupted area. We propose a multi-adversarial network using edge as a constraint, named edge-guided GAN (EG-GAN), to remove tag lines by retrieving high frequency edge information and low frequency contrast information progressively.Methods

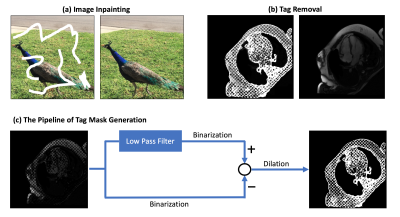

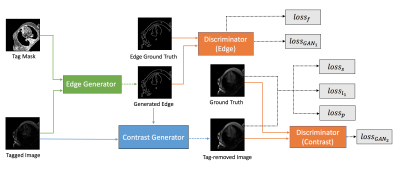

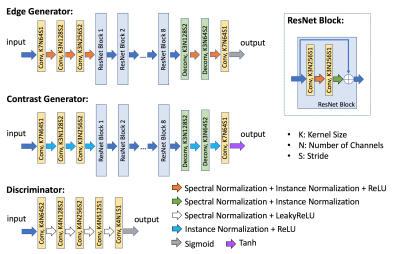

Data Representation and Pre-processing: Inspired by image inpainting6,8,9 in computer vision, shown as fig.1 a, which fills in the undetectable corrupted region with surrounding information, we model tagging in MRI as missing information and can be further filled in using untagged adjacent areas (fig.1 b). To generate the mask of tagging pattern, we first obtain a rough sketch of tagging by subtracting binarized original tagged image from binarized low frequency information of the tagged image. Then we perform morphological dilation to guarantee that the mask can fully cover the tagged area. The pre-processing pipeline is shown in fig.1 c.Network Architecture and Parameters: As shown in fig. 2, our proposed EG-GAN contains two steps. The first step is to connect all the contour of cardiac tissue boundaries interrupted by tagging grid. Then the retrieved contours are filled in with the contrast information from the untagged region of original tagged images. Both steps follow an adversarial model consisting of a generator and discriminator pair. The design details and parameters of each component in EG-GAN are illustrated in fig. 3. The two generators have similar layout modified from ResNet10. Spectral normalization (SN)11 and instance normalization12 are applied across all the layers in edge generator. The parameters of the discriminators in both steps are the same. Except for adversarial loss5, feature-matching loss13 is applied in the edge connection to enhance stability, while the contrast completion utilizes L1 loss, perceptual loss14, and style loss15 to achieve less reconstructed error and higher visual quality.

Data Description and Training Procedure: Secondary use of data acquired for clinical reasons was approved by the IRB. Eight scans were acquired using 1.5T Philips Achieva and a General Electric HDxT scanner. Each scan contained both tagged image and reference images with 20 frames in each cardiac cycle. Due to the different contrast between tagged image (Spoiled Gradient Echo) and reference image (Steady State Free Precession), we linearly registered all the tags onto reference images to mimic tagged image so that reference images can be used as ground truth to evaluate tag removal performance. To enable edge training, Canny detector16 was applied on all the images to generate the contour maps. The ratio of training and test set was 4:1. All the networks were implemented in 2D images and trained frame by frame with the ADAM optimizer on two NVIDIA Tesla P100 GPUs.

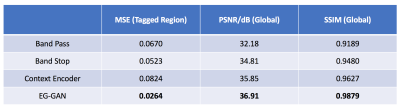

Evaluation: We compared our method with band-pass filtering3, band-stop filtering4, and context encoder6. We evaluated the performance on the test set by comparing the tag-removed images with reference. To measure the tag removal error, we calculated the mean square error (MSE) in the tagged region. Due to global downgrading image quality induced by the modification in frequency domain, we calculated peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) to evaluate the global perceptual quality.

Results and Discussion

Fig. 4 shows the tagging removal results using four different methods. Band-pass and band-stop filtering methods globally blur the images due to modifying high frequency information, while GAN-based methods can preserve more anatomical details by only recovering the tagged region. However, GAN with less constraints might be more vulnerable to model collapse and lose image contrast consistency, which leads to the residual tagging grids in the prediction, while edge constraint can effectively alleviate the remained tagging. The quantitative results shown in table 1 indicate that our EG-GAN outperforms conventional methods. More specifically, both filter-based methods yield lower PSNR and SSIM, due to the global degrading of the image, yet lower MSE in tagged region compared to context encoder. However, guided with edge information, our proposed method showed 50%-60% lower MSE and achieved higher PSNR and SSIM than filter-based methods, which proved the effectiveness of retrieving tagging corrupted images.Acknowledgements

Computation for the work described in this abstract was supported by the University of Southern California’s Center for High-Performance Computing (hpcc.usc.edu).References

1. Zerhouni EA, Parish DM, Rogers WJ, Yang A, Shapiro EP. Human heart: Tagging with MR imaging--a method for noninvasive assessment of myocardial motion. Radiology 1988;169(1):59-63.

2. Makram AW, Rushdi MA, Khalifa AM, El-Wakad MT. Tag removal in cardiac tagged MRI images using coupled dictionary learning. IEEE EMBC. 2015; 7921-7924.

3. Osman NF, Kerwin WS, McVeigh ER, Prince JL. Cardiac motion tracking using CINE harmonic phase (HARP) magnetic resonance imaging. Magnetic Resonance in Medicine. 1999;42(6):1048-60.

4. Qian Z, Huang R, Metaxas D, Axel L. A novel tag removal technique for tagged cardiac MRI and its applications. IEEE ISBI. 2007; 364-367.

5. Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y. Generative adversarial nets. Advances in Neural Information Processing Systems. 2014. 2672-2680.

6. Pathak D, Krahenbuhl P, Donahue J, Darrell T, Efros AA. Context encoders: Feature learning by inpainting. CVPR. 2016. 2536-2544.

7. Iizuka S, Simo-Serra E, Ishikawa H. Globally and locally consistent image completion. ACM Transactions on Graphics (ToG) 2017;36(4):107.

8. Bertalmio M, Sapiro G, Caselles V, Ballester C. Image inpainting. Annual Conference on Computer Graphics and Interactive Techniques. 2000. 417-424.

9. Nazeri K, Ng E, Joseph T, Qureshi F, Ebrahimi M. Edgeconnect: Generative image inpainting with adversarial edge learning. arXiv preprint arXiv:1901.00212 2019.

10. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. CVPR. 2016. 770-778.

11. Miyato T, Kataoka T, Koyama M, Yoshida Y. Spectral normalization for generative adversarial networks. arXiv preprint arXiv:1802.05957 2018.

12. Ulyanov D, Vedaldi A, Lempitsky V. Improved texture networks: Maximizing quality and diversity in feed-forward stylization and texture synthesis. CVPR. 2017. 6924-6932.

13. Wang T, Liu M, Zhu J, Tao A, Kautz J, Catanzaro B. High-resolution image synthesis and semantic manipulation with conditional gans. CVPR. 2018. 8798-8807.

14. Johnson J, Alahi A, Fei-Fei L. Perceptual losses for real-time style transfer and super-resolution. ECCV. 2016. 694-711.

15. Sajjadi MS, Scholkopf B, Hirsch M. Enhancenet: Single image super-resolution through automated texture synthesis. ICCV. 2017. 4491-4500.

16. Canny J. A computational approach to edge detection. IEEE Trans Pattern Anal Mach Intell 1986(6):679-98.

Figures