2229

Verification of fully automated deep learning-based 4D segmentation of the thoracic aorta from 4D flow MRI1Northwestern University, Chicago, IL, United States, 2Lurie Children's Hospital of Chicago, Chicago, IL, United States, 3University of Colorado, Anschutz Medical Campus, Aurora, CO, United States

Synopsis

A convolutional neural network (CNN) originally implemented for time-averaged 3D segmentation of the thoracic aorta from 4D flow MRI was retrained to generate time-resolved segmentations without generating additional reference data. To validate the segmentations, automatically generated time-resolved segmentations were compared against two 2D cine acquisitions in 20 patients. The CNN achieved average Dice scores 0.87±0.04 and 0.88±0.04 for candy-cane and cross-section views of the aorta across all patients and timepoints. Automated time-resolved segmentation of 4D flow MRI data will enable calculation of metrics such as wall shear stress and aortic compliance that are sensitive to wall location.

Introduction

4D flow MRI provides time-resolved information on 3D flow dynamics throughout the cardiac cycle and has shown to be a promising tool for the assessment of aortic pathologies such as aortic valve disease or coarctation. However, analysis of hemodynamics in the aorta is most often based on a time-averaged 3D segmentation of the aortic lumen. Manual 4D segmentation (3D+time) accounting for aortic motion during the cardiac cycle (up to 2cm movement of aortic root, cyclic changes of aortic diameter/area) are difficult to obtain in a clinically feasible timeframe1. Accounting for aorta motion is particularly important when computing imaging biomarkers that are sensitive to wall location such as wall shear stress2,3 and oscillatory shear index4. Information about wall motion could also provide valuable information about aortic distensibility5. To speed up segmentation and reduce interobserver variability, we recently developed a convolutional neural network (CNN) based segmentation algorithm which generated expert-level time-averaged (3D) segmentations of the aorta in 1 second6,7. Here, we utilize our previously developed CNN to generate 4D aortic segmentations and verify segmentation accuracy by comparing against 2D cine MRI (reference standard).Methods

For CNN training and validation, 669 adult subjects (465M/204F, age 51±15) who underwent 4D flow MRI of the thoracic aorta (1.7-3.6 mm3, 33-43 ms, venc 150-500 cm/s, 561 at 1.5T/108 at 3T) were retrospectively included (359 training/310 validation). This included 354 bicuspid aortic valve (BAV) patients, 219 ascending aortic aneurysm patients, and 96 controls who were manually preprocessed to correct for eddy currents, denoise and correct velocity aliasing before manual time-averaged segmentation (Materialize, Mimics) from a calculated phase contrast MR angiogram (PCMRA).A CNN (3D U-Net8 with denseNet9 layers replacing the convolutional layers) that was developed to generate 3D segmentations of the thoracic aorta from PCMRA was retrained for 4D segmentation. Since we lack manually performed time-resolved segmentation reference standards, we used a weakly supervised approach (using an indirect, substitute ground-truth to train)10, retraining the CNN to perform time-averaged segmentations on time-averaged 4D flow magnitude data. Magnitude data was used due to relatively consistent contrast throughout the cardiac cycle compared to a time resolved PCMRA. The validation data was used to assess the model’s performance for time-average segmentation. To generate a 4D segmentation, the CNN is run on each timeframe of the magnitude data.

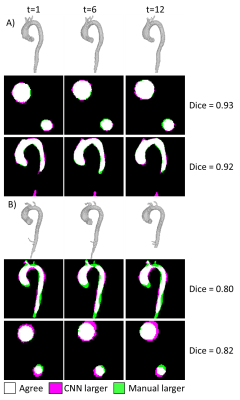

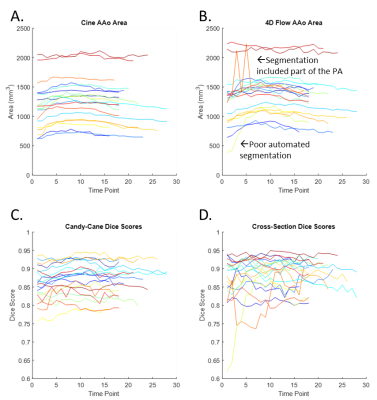

For time-resolved segmentation testing, an additional 20 patients with two 2D cine views as shown in Figure 1B were included: 1) a cross-section perpendicular to the aorta at the level of the right pulmonary artery including the ascending and descending aorta, 2) a “candy-cane” view including the ascending aorta, arch, and descending aorta were retrospectively included in this study. Subject and scan parameters are shown in Table 1. Cine scans were acquired at end-expiration to reduce registration errors with the 4D flow scan (navigator gated to end-expiration). The analysis workflow is shown in Figure 1: A) a 4D segmentation was generated by inputting the 4D flow magnitude data into the CNN, B) 2D cine scans were segmented at each timepoint using the segmentation editor in Fiji11, C) the 4D flow segmentation was interpolated onto the 2D cine planes for comparison with the cine segmentations. 2D cine data was interpolated to the 4D flow temporal resolution and segmentations were compared using a Dice score. The area of the ascending aorta in the cross-section view was also compared using Bland-Altman analysis.

Results

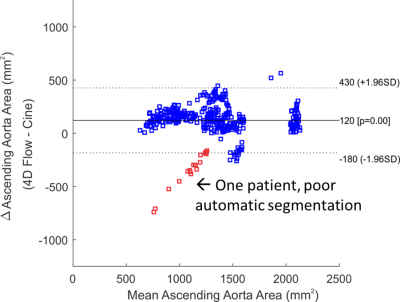

4D segmentation using the CNN took 196±56 seconds per subject, with average Dice scores 0.87±0.04 and 0.88±0.04 for candy-cane and cross-section views. Figure 2 shows two example subjects, with the 3D automated segmentation from the 4D flow as well as a comparison for both cine views at three timepoints in the cardiac cycle. Figure 2A shows a subject where the 4D flow and cine segmentations agree well, while Figure 2B shows a subject with among the worst agreement, likely showing residual misalignment between the scans. Figure 3A and 3B show the ascending aorta cross sectional area from cine and 4D flow masks, with 4D flow masks generally overestimating the area compared to the cine scan. Figure 3C and 3D show the Dice score for each subject at each timepoint from the candy cane and cross-sectional views with stable performance in most patients. A Bland-Altman plot showing the agreement of the ascending aorta area between 4D flow and cine segmentations at each timepoint is shown in Figure 4 with good agreement for most patients.Discussion

We show that without any additional manual training data, a deep learning-based network designed for time-averaged segmentation can be used to perform time-resolved segmentations. Cine scans served as a reference standard, but challenges remain with registration to 4D flow data due to difficulties with registering slices to volumes and different contrast between the sequences. Acquiring the 2D cine data at end-expiration improved spatial co-registration, but some datasets still required further manual rigid registration due to patient bulk motion between the scans or imperfect breath holding.Conclusion

Fully automated 4D segmentation of the thoracic aorta will enable robust quantification of hemodynamics and distensibility metrics that rely on accurate knowledge of wall motion. Future work could compare segmentations against 3D cine with respiratory navigation.Acknowledgements

NIH grants R01HL115828, R01HL133504, T32GM008152, F30HL145995References

1. Wang Y, Riederer SJ, Ehman RL. Respiratory Motion of the Heart: Kinematics and the Implications for the Spatial Resolution in Coronary Imaging. Magnetic Resonance in Medicine. 1995(33):713-719.

2. Potters WV, van Ooij P, Marquering H, vanBavel E, Nederveen AJ. Volumetric arterial wall shear stress calculation based on cine phase contrast MRI. J Magn Reson Imaging. 2015;41(2):505-516.

3. van Ooij P, Potters WV, Nederveen AJ, et al. A methodology to detect abnormal relative wall shear stress on the full surface of the thoracic aorta using four-dimensional flow MRI. Magn Reson Med. 2015;73(3):1216-1227.

4. Callaghan FM, Grieve SM. Normal patterns of thoracic aortic wall shear stress measured using four-dimensional flow MRI in a large population. Am J Physiol Heart Circ Physiol. 2018;315(5):H1174-H1181.

5. Harloff A, Mirzaee H, Lodemann T, et al. Determination of aortic stiffness using 4D flow cardiovascular magnetic resonance - a population-based study. J Cardiovasc Magn Reson. 2018;20(1):43.

6. Berhane H, Scott M, Elbaz M, et al. Artificial intelligence-based fully automated 3D segmentation of the aorta from 4D flow MRI. SMRA2019. 2019.

7. Berhane H, Scott M, Robinson JD, Rigsby CK, Markl M. 3D U-Net for Automated Segmentation of the Thoracic Aorta in 4D-Flow derived 3D PC-MRA. ISMRM2019. 2019.

8. Çiçek Ö, Abdulkadir A, Lienkamp SS, Brox T, Ronneberger O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. 2016; Cham.

9. Huang G, Liu Z, Maaten vdL, Weinberger KQ. Densely Connected Convolutional Networks. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017.

10. Ibrahim MS, Vahdat A, Ranjbar M, Macready WG. Weakly Supervised Semantic Image Segmentation with Self-correcting Networks. arXiv. 2018:1811.07073.

11. Schindelin J, Arganda-Carreras I, Frise E, et al. Fiji: an open-source platform for biological-image analysis. Nat Methods. 2012;9(7):676-682.

Figures