1896

Rapid brain segmentation in T1w MRI with fully convolutional networks: development and comparison of different network constructions1Imaging Physics, University of Texas MD Anderson Cancer Center, Houston, TX, United States, 2Neuroradiology, University of Texas MD Anderson Cancer Center, Houston, TX, United States, 3Cancer Systems Imaging, University of Texas MD Anderson Cancer Center, Houston, TX, United States

Synopsis

Neurocognitive function is often associated with structural differences in the brain for patients with neurofibromatosis type-1 (NF1), and studies have shown that NF1 is associated with larger subcortical volumes and thicker cortices of certain brain structures. Routine monitoring of NF1 patients would be possible with tools that enable rapid whole-brain segmentation in standard of care T1w MRI. Modern machine learning techniques, including fully convolutional networks (FCNs), have demonstrated the ability to rapidly perform segmentation tasks across a range of applications. In this work, we investigate the performance of different FCNs for rapid whole-brain segmentation in pediatric T1w brain MRI.

Introduction

Neurocognitive function is often associated with structural differences in the brain for patients with neurofibromatosis type-1 (NF1), and studies have shown that NF1 is associated with larger subcortical volumes and thicker cortices of certain brain structures [1]. Routine monitoring of NF1 patients would be possible with tools that enable whole-brain segmentation in standard of care T1w MRI. Methods exist that will allow whole-brain segmentation in T1w MRI, but these methods are currently time and resource-intensive. Modern machine learning techniques, including fully convolutional networks (FCNs), have demonstrated the ability to perform segmentation tasks rapidly across a range of applications. The purpose of this work was to investigate the performance of different FCNs for rapid and automated whole-brain segmentation in pediatric T1w brain MRI.Methods

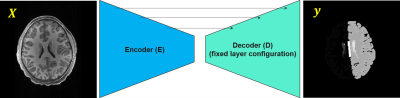

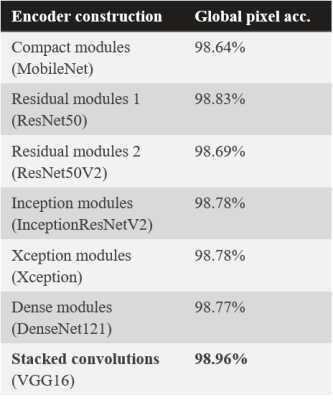

Data used in the preparation of this article were obtained from the Adolescent Brain Cognitive Development (ABCD) Study (https://abcdstudy.org), held in the NIMH Data Archive (NDA). This is a multisite, longitudinal study designed to recruit more than 10,000 children age 9-10 and follow them over ten years into early adulthood. 107 pediatric patients from this archive were scanned with a 3D spoiled gradient-echo MR sequence. Typical scan parameters were TR/TE = 7.0/2.9 ms, flip angle = 8°, FOV = 26 × 21 cm, and voxel size = 1 × 1 × 1 mm. Brain segmentation masks for each patient volume were computed using FreeSurfer [2]. A total of 43 tissue classes were segmented (plus an additional class for background for a total of 44). Each slice of the patient image volumes was normalized to the range [0, 1]. The patient images were randomly split into 82 and 25 cohorts for training/validation and testing, respectively.Seven different constructs of FCNs were constructed (Figure 1). Each was created with a convolutional encoder and a convolutional decoder, with skip connections between the encoder and decoder at equivalent resolution scales. We investigated 7 different convolutional encoders including compact modules (MobileNet), residual modules (ResNet50 and ResNet50V2), inception modules (IncepionResNetV2), xception modules (Xception), dense modules (DenseNet121), and stacked convolutions (VGG16). The decoder remained fixed across all of the models and was similar to that of the original U-Net architecture [3].

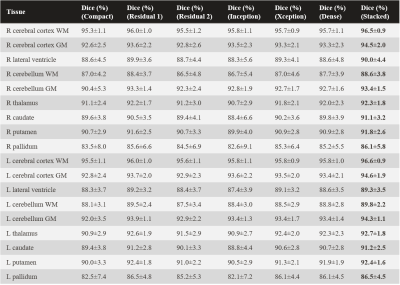

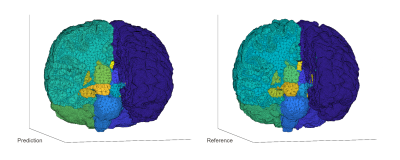

Eighteen different brain structures of primary interest were identified. These included the right (R) and left (L) cerebral cortex white matter (WM), R and L cerebral cortex grey matter (GM), R and L lateral ventricle, R and L cerebellum WM, R and L cerebellum GM, R and L thalamus, R and L caudate, R and L putamen, and R and L pallidum. The predicted segmentation masks were evaluated for pixel accuracy, Dice similarity, and intersection over union. For visualization, an open-source mesh generation package [4] was used to create 3D volumetric meshes of the ground truth and predicted brain segmentation masks.

All models were constructed and trained with a deep learning application engine [5] on an NVIDIA DGX-1 workstation. The mini-batches of size 22 were distributed across 2 of the V100 GPUs during training. All models were trained using cross-entropy loss and an Adam optimizer with a learning rate (LR) of 0.0001. The LR was decayed by 20% when no improvement in the validation loss occurred after five epochs, and training was terminated after 11 epochs with no improvement in the validation loss.

Results

Using stacked convolutions for the convolutional encoder produced the highest overall pixel accuracy of 98.96% across all models and brain structures (Table 1). Stacked convolutions also yielded the highest accuracy across the organ-wise similarity metrics (Table 2, Dice coefficient). Of the 18 brain structures analyzed, 12 of the predicted brain structure segmentation masks had a mean Dice similarity of ≥ 90.0% with the FreeSurfer predictions. The lowest Dice similarity was observed for the R palladium (86.1%±5.8%), whereas the highest Dice similarity was observed for the L cerebral cortex WM (96.6%±0.9%). All predictions (volumetrically) took <5 s on an NVIDIA V100 GPU, and <1 min on an NVIDIA GTX 1050 GPU.Discussion and conclusion

Software tools exist that enable accurate, whole-brain segmentation in T1w MRI. However, these techniques are currently resource-intensive and time-consuming, which reduces their feasibility for routine implementation in clinical workflows. We have investigated FCNs for rapid whole-brain segmentation in T1w pediatric MRI. This approach provides accurate segmentation masks in a fraction of the time required by currently available open-source software techniques. It may enable implementation in routine clinical workflows for multiple applications, including NF1 evaluation and image quality evaluation in T1w MRI. Future work will focus on incorporating adult T1w MRIs into the model for whole-brain segmentation across a range of ages and human brain morphologies.Acknowledgements

No acknowledgement found.References

[1] Barkovich MJ, Tan CH, Nillo RM, et al. “Abnormal morphology of select cortical and subcortical regions in neurofibromatosis type 1,” Radiology, 2018; 289(2): 499-508.

[2] Fischl B. “FreeSurfer,” Neuroimage, 2012; 62(2), 774–781.

[3] Ronneberger O, Fischer P, Brox T. “U-Net: Convolutional networks for biomedical image segmentation,” arXiv:1505.04597, 2015.

[4] Fang Q, Boas D, "Tetrahedral mesh generation from volumetric binary and gray-scale images," Proc IEEE International Symposium on Biomedical Imaging, 2009; 1142-1145.

[5] Sanders JW, Fletcher JR, Frank SJ, et al. “Deep learning application engine (DLAE): development and integration of deep learning algorithms in medical imaging,” SoftwareX, 2019; 10:100347.

Figures