1882

A Machine Learning Based Method to Distinguish between Tumor and Non-Tumor within FLAIR Non-Enhancing Regions of Brain Lesions

Robert Wujek1,2, Melissa Prah3, Mona Al-Gizawiy3, and Kathleen Schmainda3

1Graduate School, Medical College of Wisconsin, Milwaukee, WI, United States, 2Graduate School, Marquette University, Milwaukee, WI, United States, 3Biophysics, Medical College of Wisconsin, Milwaukee, WI, United States

1Graduate School, Medical College of Wisconsin, Milwaukee, WI, United States, 2Graduate School, Marquette University, Milwaukee, WI, United States, 3Biophysics, Medical College of Wisconsin, Milwaukee, WI, United States

Synopsis

There is a clinical need for a biomarker that can distinguish between infiltrative tumor and non-tumor within FLAIR non-enhancing brain lesions. In this study, we used multi-parametric MRI and FLAIR non-enhancing biopsy ground truths to train a 3D convolutional neural network to address this need.

Introduction

Glioblastoma is an especially aggressive form of malignant brain tumor with a median overall survival of only 15 months[1]. A contributing factor to this poor prognosis is the inability to determine true tumor extent since treatment can alter how a lesion presents on imaging. For example, bevacizumab reduces blood brain barrier permeability[2], resulting in a reduced T1 weighted contrast enhancement (T1+C) within regions of FLAIR hyperintense edema, or non-enhancing lesion (NEL). This pseudo-response within NEL presents a clinical need for a biomarker capable of distinguishing between tumor and edema. Due to recent success with respect to MRI segmentation and reconstruction tasks, a machine learning based approach is proposed to address this problem. By using multiparametric MR inputs as predictive features, it is hypothesized that a trained convolutional neural network (CNN) can classify tissue as tumor vs non-tumor on a voxel-by-voxel basis.Methods

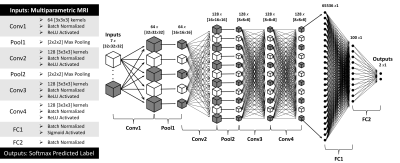

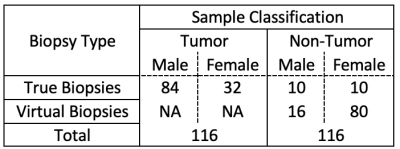

Dataset: This study used biopsy samples from 78 brain tumor patients with pre-operative MRI including T1, T1+C, T2, FLAIR, DWI, and DSC[WR1] . “True biopsies” came from patients with invasive brain tumor having co-localized tissue samples from NEL regions (n=72). To achieve a balanced class representation, “virtual biopsies” were sampled from patients with meningioma (n=1) and metastasis (n=5) which are considered non-infiltrative. Sample distributions are given in TABLE 1. Imaging was resampled to 1mm3, bias corrected using the N4ITK algorithm[3], and normalized to white matter using methods described by Goetz et al[4]. ADC, nRCBV, and nRCBF maps were extracted from DWI and DSC, respectively. 3D patches centered at each biopsy site were extracted from the imaging (excluding DWI and DSC) and distributed between train and test datasets (70:30) along with corresponding tissue classification. To artificially increase the training dataset size by a factor of 8, flipping and rotation augmentation methods were used. The final dataset size was 1312 training to 68 testing samples. Model and Training: A 3D convolutional neural network capped with fully connected layers (FIGURE 1) was trained to discriminate between tumor and non-tumor tissue using the previously described imaging as inputs and corresponding biopsy results as ground truth outputs. Softmax cross entropy with an L2 regularization penalty (lambda=1e-4) was used for the loss function. RMSProp (lr=1e-3, decay=.9, momentum=.9) was the chosen optimizer due to previous experimental success[5]. The model was trained in batch sizes of 20 for 64 epochs on a single Nvidia Tesla K40 gpu.Results

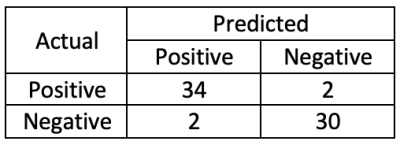

Model performance was evaluated using the 68 samples from the testing dataset. TABLE 2 shows the results from the experiment. Statistics are as follows: Accuracy=0.941, Precision=0.944, Recall=0.944, Specificity=0.038.Discussion/Conclusions

These preliminary results demonstrate the potential for neural networks to distinguish tumor from edema in non-enhancing lesions and provide motivation for continued investigation. In future iterations of this experiment, k-fold cross validation will be used for an unbiased evaluation of model performance while also accounting for the small dataset size. Additionally, it is anticipated that the dataset will increase with continued patient recruitment. Finally, this voxel-wise classification method can be expanded to generate predictive infiltrative tumor burden maps over NEL regions without the need for invasive biopsies. Clinically, such a map would improve patient outcome by guiding treatment plans and reducing overall patient burden.Acknowledgements

Funding support: NIH/NCI U01 CA176110 and NIH/NCI R01 CA221938

Computational Resources: Research Computing Center at Medical College of Wisconsin

COI: IQ-AI (KMS, ownership interest) and Imaging Biometrics (KMS, financial interest).

References

[1] Thakkar JP et al. Epidemiologic and molecular prognostic review of glioblastoma. Cancer Epidemiol Biomarkers Prev. 2014;23(10):1085-96. [2] Brandsma D, vandenBent MJ. Psedoprogression and pseudoresponse in the treatment of gliomas. Curr Opinion in Neurology. 2009;22:633-8. [3] Tustison, Nicholas J et al. “N4ITK: improved N3 bias correction.” IEEE transactions on medical imaging vol. 29,6 (2010): 1310-20. doi:10.1109/TMI.2010.2046908 [4] Goetz M et al. Proceedings MICCAI BraTS (Brain Tumor Segmentation Challenge), pp. 6-11, 2014. [5] Wujek R, Schmainda K, editors. Standardization of MRI as pre-processing method for machine learning based segmentation. Proc Int Soc Magn Reson Med; 2019; Montreal, Canada.Figures

FIGURE 1. Model Architecture. The

network consists of 4 convolutional layers, 2 pooling layers, and 2 fully

connected layers as described at the left and schematized above. A softmax

function applied to the output determines tumor vs non-tumor.

TABLE 1. Dataset Description. Virtual biopsies were acquired to balance the dataset with a final count of 116 tumor samples and 116 non-tumor samples.

TABLE 2. Confusion Matrix.