1873

Predicting the brain state index, pupil dynamics, with rs-fMRI signal-trained models1Translational Neuroimaging and Neural Control Group, High Field Magnetic Resonance Department, Max Planck Institute for Biological Cybernetics, Tuebingen, Germany, 2Graduate Training Centre of Neuroscience, International Max Planck Research School, University of Tuebingen, Tuebingen, Germany, 3Forschungszentrum Jülich, Jülich, Germany, 4Athinoula A. Martinos Center for Biomedical Imaging, Massachusetts General Hospital and Harvard Medical School, Charlestown, MA, United States

Synopsis

Lately, we have acquired the resting state fMRI (rs-fMRI) signal with pupillometry from anesthetized rats to investigate specific resting-state network correlations with brain state-specific pupil dynamics. Here we used the acquired data to estimate the instantaneous arousal index based on the rs-fMRI signal. We evaluated predicting pupil dynamics using three methods: linear regression (LR), gated recurrent unit (GRU) neural networks and a previously proposed correlation-based (CC) approach. LR and GRU provided much better predictions than CC method. Also, using weighted PCA components, we can identify specific regions of the brain related to pupil dynamics as the brain state index.

INTRODUCTION

The mammalian eye can, through unconscious movements, being open or closed and altering pupil size, reveal information about the subject's attention1, level of cognition2,3, fear4 and arousal5,6. Furthermore studies correlating the eye open/close index or pupil dynamics with electrophysiological recordings7 and global fMRI signal fluctuation8,9,10 suggest the measurement of involuntary eye movements as a brain state-specific index. In particular, the linkage of the pupil dynamics with neuronal firing has been extensively studied at varied brain states in rodents11. Lately, we have acquired the resting state fMRI (rs-fMRI) signal with pupillometry from anesthetized rats to investigate specific resting-state network correlations with brain state-specific pupil dynamics10. Here we used the acquired data to estimate the instantaneous arousal index based on the rs-fMRI signal. We evaluated predicting pupil dynamics using three methods, including the correlation-based approach proposed by Chang et al.9METHODS

fMRI acquisition and pre-processingData were acquired on a 14.1T / 26cm magnet and a 12cm diameter gradient with a elliptic trans-receiver surface coil (~2x2.7cm). Functional whole-brain 3D EPI scans had following parameters: 1s TR, 12.5ms TE, 48x48x32 matrix size, 400x400x600µm resolution, 925 TRs (15 min 25 s). An anatomical RARE images were additionally acquired for registration purposes with: 4s TR, 9ms TE, 128x128 matrix size, 32 slices, 150µm in-plane resolution, 600µm slice thickness, 8x RARE factor. Co-registered volumes were blurred by applying a 0.8 mm FWHM Gaussian filter, normalized with their mean and variance and were temporally filtered (0.005 to 0.15Hz).

Pupil tracking

Videos were processed using the DeepLabCut toolbox12 to track pupil diameter on all video frames (29.97 Hz sampling rate). The toolbox network was retrained using ~1300 frames extracted from 70 eye monitoring videos. Video frames were clustered based on their visual appearance using k-means and distinct training frames were automatically selected from all clusters. In each training frame 4 pupil edge points were manually labeled. After training coordinates of the 4 points were found on all recorded frames and used to calculate the pupil diameter based on the equation:

$$d=(√((𝑥_2 − 𝑥_1)^2 + (𝑦_2 − 𝑦_1)^2)+√((𝑥_4−𝑥_3)^2+(𝑦_4−𝑦_3)^2))/2$$

Finally, the pupil diameter signals were averaged over 1 s windows to match the fMRI temporal resolution and to reduce noise.

GRU

The gated recurrent unit (GRU)13 network is a recurrent neural network architecture that utlizes a gating mechanism controlling the flow of information and allowing the network to capture dependencies at different time scales in the processed data. It is trained using error backpropagation. The GRU encodes each element of the input PCA-fMRI sequence 𝒙 into a hidden state vector 𝒉(𝑡) by computing the following function:

𝒓(𝑡) = 𝜎(𝑾𝑖𝑟𝑥(𝑡) + 𝒃𝑖𝑟 + 𝑾ℎ𝑟𝒉(𝑡 − 1) + 𝒃ℎ𝑟)

𝒛(𝑡) = 𝜎(𝑾𝑖𝑧𝑥(𝑡) + 𝒃𝑖𝑧 + 𝑾ℎ𝑧𝒉(𝑡 − 1) + 𝒃ℎ𝑧)

𝒏(𝑡) = tanh(𝑾𝑖𝑛𝑥(𝑡) + 𝒃𝑖𝑛 + 𝒓(𝑡)⨀(𝑾ℎ𝑛𝒉(𝑡 − 1) + 𝒃ℎ𝑛))

𝒉(𝑡) = (1 − 𝒛(𝑡) ⨀ 𝒏(𝑡) + 𝒛(𝑡) ⨀ 𝒉(𝑡 − 1)

The hidden state value is then linearly weighted at every time point to generate the arousal index prediction. Hyperparameter values were found using Bayesian optimization14.

CC template arousal estimation

Both pupil and fMRI signals were concatenated across trials. Then the pupil signal was correlated with each brain voxel's time course to create a spatial arousal correlation template. This template was then spatially correlated with each fMRI volume to summarize the similarity between the template and the fMRI signal at every time point. This process generated a time course of the estimated arousal index.

RESULTS

Here, we used 70 rs-fMRI trials with concurrently acquired pupil diameter size signals (n=70) (Fig. 1). We compared three methods of estimating the instantaneous arousal based on rs-fMRI signals:- the previously proposed Correlation (CC) template9,10,

- linear regression (LR),

- a GRU neural network.

DISCUSSION

We compared three methods to predict brain-state dependent pupil dynamics based on rs-fMRI data. LR and GRU provided much better predictions than a previously proposed method. Also, using weighted PCA components, we can identify specific regions of the brain related to pupil dynamics as the brain state index. In future work the internal features of the GRU and also human applications should be explored.Acknowledgements

This research was supported by NIH Brain Initiative funding (RF1NS113278-01), and the S10 instrument grant (S10 RR023009-01) to Martinos Center, German Research Foundation (DFG) Yu215/3-1, BMBF 01GQ1702, and the internal funding from Max Planck Society. We thank Dr. N. Avdievitch and Ms. H. Schulz for technical support, Dr. E. Weiler, Ms. M. Pitscheider and Ms. S. Fischer for animal protocol and maintenance support, the teams of Mr. J. Boldt and Mr. O. Holder for mechanical and electrical support.

References

1. Wainstein, G., Rojas-Libano, D., Crossley, N.A., Carrasco, X., Aboitiz, F., and Ossandon, T. (2017). Pupil Size Tracks Attentional Performance In Attention-Deficit/Hyperactivity Disorder. Sci Rep 7, 8228.

2. Eckstein, M.K., Guerra-Carrillo, B., Miller Singley, A.T., and Bunge, S.A. (2017). Beyond eye gaze: What else can eyetracking reveal about cognition and cognitive development? Dev Cogn Neurosci 25, 69-91.

3. Knapen, T., de Gee, J.W., Brascamp, J., Nuiten, S., Hoppenbrouwers, S., and Theeuwes, J. (2016). Cognitive and Ocular Factors Jointly Determine Pupil Responses under Equiluminance. PLoS One 11, e0155574.

4. Leuchs, L., Schneider, M., Czisch, M., and Spoormaker, V.I. (2017). Neural correlates of pupil dilation during human fear learning. Neuroimage 147, 186-197.

5. Chang, C., Leopold, D.A., Scholvinck, M.L., Mandelkow, H., Picchioni, D., Liu, X., Ye, F.Q., Turchi, J.N., and Duyn, J.H. (2016). Tracking brain arousal fluctuations with fMRI. Proc Natl Acad Sci U S A 113, 4518-4523.

6. Unsworth, N., and Robison, M.K. (2018). Tracking arousal state and mind wandering with pupillometry. Cogn Affect Behav Neurosci 18, 638-664.

7. Reimer, J., Froudarakis, E., Cadwell, C.R., Yatsenko, D., Denfield, G.H., and Tolias, A.S. (2014). Pupil fluctuations track fast switching of cortical states during quiet wakefulness. Neuron 84, 355-362.

8. Siegle, G.J., Steinhauer, S.R., Stenger, V.A., Konecky, R., and Carter, C.S. (2003). Use of concurrent pupildilation assessment to inform interpretation and analysis of fMRI data. Neuroimage 20, 114-124.

9. Chang, C., Leopold, D.A., Scholvinck, M.L., Mandelkow, H., Picchioni, D., Liu, X., Ye, F.Q., Turchi, J.N., and Duyn, J.H. (2016). Tracking brain arousal fluctuations with fMRI. Proc Natl Acad Sci U S A 113, 4518-4523.

10. Pais-Roldan, P., Takahashi, K., Chen, Y., Zeng, H., Jiang, Y., Yu, X.; Max Planck Inst. For Biol. Cybernetics, Tübingen, Germany. Simultaneous pupillometry, calcium recording and fMRI to track brain state changes in the rat. Program No. 613.03. 2019 Neuroscience Meeting Planner. Chicago, IL: Society for Neuroscience, 2019.

11. McGinley, M.J., David, S.V., McCormick, D.A., 2015. Cortical Membrane Potential Signature of Optimal States for Sensory Signal Detection. Neuron 87, 179–192.

12. Mathis, A., Mamidanna, P., Cury, K.M., Abe, T., Murthy, V.N., Mathis, M.W., Bethge, M., 2018. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nature Neuroscience 21, 1281.

13. Cho, K., van Merrienboer, B., Gulcehre, C., Bahdanau, D., Bougares, F., Schwenk, H., Bengio, Y., 2014. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. arXiv:1406.1078.

14. Bergstra, J., Bardenet, R., Bengio, Y., Kegl, B., 2011. Algorithms for hyper-parameter optimization, in: Proceedings of the 24th International Conference on Neural Information Processing Systems. Curran Associates Inc., Granada, Spain, pp. 2546–2554.

Figures

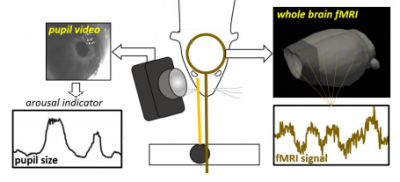

Fig. 1. Multi-modal data acquisition platform

rs-fMRI data acquisition is accompanied by simultaneous monitoring of the rat eye. Pupil diameter is extracted from each video frame using the DeepLabCut toolbox.

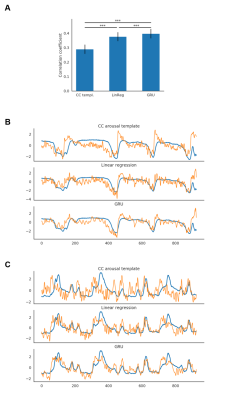

Fig. 2. Prediction results

A. GRU predictions obtained the best scores (0.40±0.03; mean±SE; pLR=0.0001; pCC=1.20*10-6). Linear regression performed better (0.38±0.03; mean±SE; p=1.13*10-5) than the CC template-based estimation (0.29±0.03; mean±SE).

BC. Example predictions of two trials. Blue traces show original pupil signals. Orange traces show predictions generated by each of the methods. Linear regression generates predictions similar to GRU's but noisier. The signals were variance normalized.

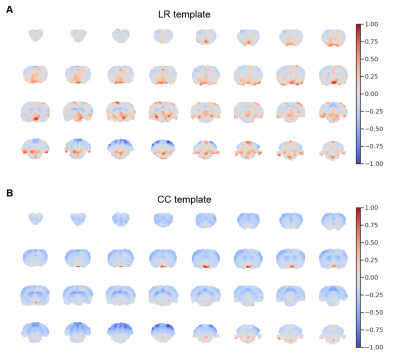

Fig. 4. LR and CC templates

After training the linear regression model, all of the 300 PCA spatial maps were multiplied by their corresponding linear regression weights and summed together. The resulting map (A) highlights areas that contributed to predictions. A pattern distinct from the CC template (B) was observed.