1866

Automatic Assessment of DWI-ASPECTS for Assessment of Acute Ischemic Stroke using Recurrent Residual Convolutional Network1Department of Radiology, Chonnam National University, Gwangju, Korea, Republic of, 2Department of Radiology, Chonnam National University Hospital, Gwangju, Korea, Republic of, 3Department of Radiology, Chonnam National University Hwasun Hospital, Gwangju, Korea, Republic of, 4Department of Electronics and Computer Engineering, Chonnam National University, Gwangju, Korea, Republic of, 5Department of Radiology, Chonnam National University Medical School and Hospital, Gwangju, Korea, Republic of

Synopsis

The purpose of this study was to demonstrate the feasibility of using deep learning algorithms for automatic classification of DWI-ASPECTS from patients with acute ischemic stroke. DWI data from 319 patients with acute anterior circulation stroke were used to train and validate recurrent residual convolutional neural network models for binary task of classifying low- vs high- DWI-ASPECTS. Our model produced the accuracy of 84.9 ± 1.5% and the AUC of 0.925 ± 0.009, suggesting that this algorithm may provide an important ancillary tool for clinicians in a time-sensitive assessment of DWI-ASPECTS from acute ischemic stroke patients.

Purpose

Alberta Stroke Program Early Computed Tomographic scoring using diffusion weighted image (DWI-ASPECTS) has been shown to be a simple and accurate tool in detection and semi-quantitative scoring of early ischemic changes in patients with anterior circulation stroke. This study aimed to demonstrate the feasibility of using deep learning algorithms for automatic binary classification of DWI-ASPECTS from patients with acute ischemic stroke using DWI images.Method and Materials

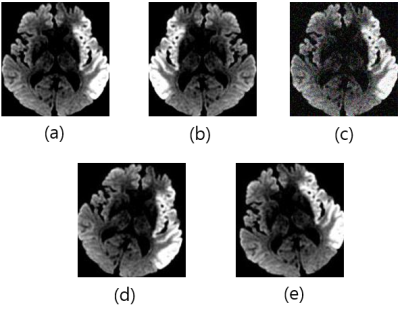

DWI data from 319 patients with acute anterior circulation stroke, who presented with acute anterior circulation stroke due to large vessel occlusion within 6 hours of symptom onset at a tertiary stroke center, were included. All patients underwent MRI with a 1.5T MRI scanner (Signa HDxt; GE Healthcare, Milwaukee, Wisconsin) and DWI data were obtained in the axial plane by using a single-shot, spine-echo echo-planar sequence (TR/TE=9000/80 ms, slice thickness=4 mm, FOV=260x260 mm, and b-values of 0 and 1000 s/mm2). DWI-ASPECTS were assessed by 2 neuroradiologists. Patients were classified into 2 groups according to their DWI-ASPECTS: DWI-ASPECTS of 0-6 (n=121) and 7-10 (n=203). The datasets were divided into training (80%) and test (20%) sets. The training data set was preprocessed using slice filtering, brain cropping and contrast stretching, after which augmented by horizontal flip, Gaussian noise, 15 degrees of rotation to left and right (Fig 1). We used the recurrent residual convolutional neural network (RRCN) for model training, which was adapted from VGG16 [1] and ResNet structure [2] with an addition of the skip connection to each convolution block and five blocks of convolutions. Each block of convolution had two or three convolution layers with the kernel size of 3x3 and one max pooling layer. The number of feature maps in each convolution block was 32, 64, 128, 256, and 512 respectively. The recurrent block contained one Long Short Term Memory (LSTM) layer with 256 hidden nodes [3]. Figure 2 shows the flowchart of the proposed model. In order to explore the effect of model fusion method and the type of ensemble model on model performance, our RRCN model underwent an additional training with five additional DropOut layers (One additional DropOut layer per each convolution block) [4]. Using two RRCN models, three fusion methods were used to evaluate the impact of fusion method on model performance. The first method utilized the fusion at feature level. In the second method, the average of the outputs from the two models was used as final outputs. In the third method, final outputs were obtained by voting the maximum score from the outputs of two model trainings.Results and Discussions

Table 1 shows the comparison of results between the proposed RRCN, pre-trained models, and 3DCNN for the classification of DWI data between patients with the low DWI-ASPECTS and the high DWI-ASPECTS. The results were expressed as a mean from three separate trainings ± standard deviation. The accuracy of the proposed RRCN was 84.4 ± 0.75% which was higher than those of pre-trained VGG16 [1] (72.8 ± 0.75%), pre-trained Inception V3 [6] (72.4 ± 2 %), and 3DCNN [7] (81.7 ± 2 %). The AUC of the RRCN was 0.91 ± 0.001 which was higher than that of 3DCNN (0.844 ± 0.012) (Fig 3). The Table 2 demonstrates the model performance between three different fusion methods. The fusion by maximum voting method achieved the highest model performance with the accuracy of 84.9 ± 1.5% and the AUC of 0.925 ± 0.009. The dichotomizing criteria used in this study was based on previous findings that showed marked differences in clinical outcome in the two groups. Several previous studies reported that patients with a DWI-ASPECTS greater than or equal to 7 had a distinct clinical outcome compared to those with a DWI-ASPECTS smaller than 7 after intra-arterial or IV pharmacologic thrombolysis [8,9]. The results in the current paper suggest that the CNN model developed in this study may provide an important ancillary tool for clinicians in a time-sensitive assessment of DWI-ASPECTS from acute ischemic stroke patients.Conclusion

Deep learning framework may be useful for assessment of DWI-ASPECTS in patients with acute anterior circulation stroke. Our results suggest that deep learning-based method may assist physicians in prompt decision making for treatment strategy.Acknowledgements

Support for the research came from the National Research Foundation (NRF) of Korea grants (NRF-2017R1C1B5018396, NRF-2019R1I1A3A01059201), and grants from Chonnam National University Hospital Biomedical Research Institute (CRI18019-1 and CRI18094-2).References

1. Karen, S., Andrew, Z., Very Deep Convolutional Networks for Large-Scale Image Recognition, https://arxiv.org/abs/1409.1556

2. Kaiming, H., Xiangyu, Z., Shaoqing, R., Jian, S.: Deep Residual Learning for Image Recognition. CVPR, 2016.

3. Hochreiter, S., Schmidhuber, J.,: Long short-term memory. Neural Computation. Vol 9 (8): 1735-1780, 1997.

4. Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.,: Dropout: A simple way to prevent neural networks from overfitting. JMLR, 15(Jun):1929−1958, 2014.

5. McTaggart, RA., Jovin TG, Lansberg, MG., Mlynash, M., Jayaraman, MV., Choudhri, OA., et al. Alberta stroke program early computed tomographic scoring performance in a series of patients undergoing computed tomography and MRI: reader agreement, modality agreement, and outcome prediction. Stroke 2015;46:407-412

6. Szegedy, C., Vanhoucke,V., Ioffe, S., Shlens, J. and Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2818–2826, 2016.

7. Du, T., etc, Learning Spatiotemporal Features with 3D Convolutional Networks, https://arxiv.org/abs/1412.0767

8. Singer, OC., Kurre, W., Humpich, MC., et al; MR Stroke Study Group Investigators. Risk assessment of symptomatic intracerebral hemorrhage after thrombolysis using DWI-ASPECTS. Stroke 2009;40:2743–48

9. Nezu, T., Koga, M., Kimura, K., et al. Pretreatment ASPECTS on DWI predicts 3-month outcome following rt-PA: SAMURAI rt-PA Registry. Neurology 2010;75:555–61

Figures