1845

Clinical evaluation of automated deep-learning based meningioma segmentation in multiparametric MRI1UKK, Cologne, Germany

Synopsis

We trained an established deep-learning-model architecture (3D-Deep-Convolutional-Neural-Network, DeepMedic) on manual segmentations from 70 meningiomas independently segmented by two radiologists. The trained deep-learning model was then validated in a group of 55 meningiomas. Ground truth segmentations were established by two further radiologists in a consensus reading. In the validation-group the comparison of the automated deep-learning-model and manual segmentations revealed average dice-coefficients of 0.91±0.08 for contrast-enhancing-tumor volume and 0.82±0.12 for total-lesion-volume. In the training-group, interreader-variabilities of the two manual readers were 0.92±0.07 for contrast-enhancing-tumor and 0.88±0.05 for total-lesion-volume. Deep-learning based automated segmentation yielded high segmentation accuracy, comparable to manual interreader-variability.

We hypothesized training an established deep-learning-model architecture (3D-Deep-Convolutional-Neural-Network, DeepMedic) that already performed well in brain tumor segmentation9–11 might allow for superior results and save resources than creating an entirely new deep learning model from scratch. The objective of our study was to train the above-mentioned architecture on manual meningioma segmentations and then validate its performance on an independent group of meningiomas from routine multiparametric MR imaging data.

METHODS: Our retrospective study was approved by the local institutional review board. Only patients with a complete MR-dataset, consisting of T1-/T2-weighted, T1-weighted contrast-enhanced [T1CE], and FLAIR as well as histopathological specimen were included. The final study population consisted of 125 patients classified according to the current World Health Organization (WHO) guideline12. Patients were split into two groups: (i) training data for the deep learning model (training-group, n=70) and (ii) comparison between manual and automated segmentations (validation group, n=55). MR images were acquired on different scanner types (from all major vendors) from referring institutions (n=86) and our own institution (n=39), ranging from 1.0 to 3.0 Tesla.

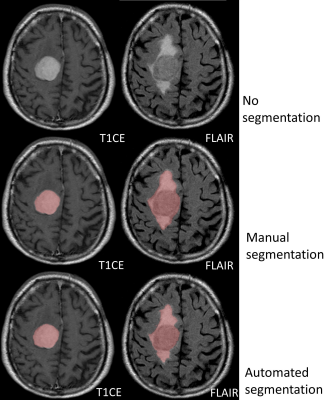

Manual segmentations were performed using IntelliSpace Discovery (Philips Healthcare). In T1CE, contrast-enhancing tumor was segmented. In FLAIR, solid tumor and surrounding edema were segmented. For evaluation, we applied a previously implemented method11 utilizing the following defined tumor volumes: (i) contrast-enhancing-tumor in T1CE and (ii) total-lesion-volume as the union of target volumes in T1CE and FLAIR, including solid contrast-enhancing tumor parts and surrounding edema. Manual segmentations in the training group were performed by two radiologists separately, resulting in two datasets of segmentations for each patient. To define optimal ground truth tumor volumes for the validation group, two additional radiologists segmented tumor volumes in consensus.

DeepMedic-architecture9 was used, including 3D-convolutional-neural-network for segmentation and a 3D-post-processing-step to remove false-positives. For training of the deep-learning-model, the two sets of manual segmentations from each reader in T1CE and FLAIR were used (totaling 140 image sets each including T1CE and FLAIR segmentations). For automated segmentation imaging data was pre-processed including registration, skull stripping, resampling, and normalization.

The dice-coefficient was used to assess similarity between manual ground truth segmentations (S1) and automated deep-learning based segmentations (S2) and in the validation group, DSC(S1,S2)=(2|S1∩S2|)÷(|S1|+|S2|). The dice-coefficient was also used to evaluate interreader-variabilities between the manual segmentations of the two readers of the training group13. Wilcoxon signed rank test was used to evaluate any statistical difference.

RESULTS: The 125 meningioma patients consisted of 97 WHO grade I meningioma and 28 patients with WHO grade II meningioma.

Training group: Manual segmentations for contrast-enhancing-tumor averaged 31.5±29.3 cm³ for the first and 31.1±28.9 cm³ for the second reader as well as 57.4±56.4 cm³ and 59.6±59.2 cm³ for total-lesion-volume. The dice-coefficients were 0.92±0.07 for contrast-enhancing-tumor and 0.88±0.05 for total-lesion-volume.

Validation group: Manual segmentations were 30.7±25.1 cm³ for contrast-enhancing-tumor volume and 71.3±66.0 cm³ for total-lesion-volume. Automated segmentations were comparable without significant differences, being 30.7±24.1 cm³ (p=0.95) in contrast-enhancing-tumor and 71.4±65.0 cm³ (p=0.94) for total-lesion-volume. The average dice-coefficients for comparing automated and manual segmentations were 0.82±0.12 (range: 0.54-0.97) for contrast-enhancing-tumor and 0.91±0.08 (range: 0.21-0.93) for total-lesion-volume. Automated segmentation performed significantly better in contrast-enhancing-tumor volume than the total-lesion-volume (0.91±0.08 vs. 0.82±0.12, p<0.001). There was no significant difference in dice-coefficients between grade I and II meningiomas or between skull base and convexity meningiomas.

DISCUSSION: Our trained deep-learning-model segmented contrast-enhancing-tumor and total-lesion-volume very accurately. The accuracy was comparable to the interreader-variabilities of the two readers from the training group. Further, our results are either comparable or superior to other recently published studies addressing automated brain tumor segmentation7,9–11,14–16 and general segmentation accuracies accounting for intra- and interreader-variabilities7,8.

Reliable automated meningioma segmentation as demonstrated in our study should relevantly increase availability of volumetric data as time consuming manual segmentations could be omitted. Further, automated segmentation should improve reproducibility of measurements7. Accessibility of volumetric data is clinically warranted for several reasons. Monitoring of conservatively treated meningioma could be improved as tumor growth can be detected with higher sensitivity by a volumetric assessment compared to conventional diameter methods5,6. Image-based tumor characterization by quantitative image analysis, e.g. radiomics-based or (deep) machine learning approaches, heavily rely on volumetric image segmentations7,17–19. Further, extent of peritumoral edema has a decisive impact on the clinical outcome as well as intraoperative performance. Assessment of edema by the total-lesion-volume therefore appears warranted20.

CONCLUSION: Automated meningioma segmentation by our trained deep learning is highly accurate and produces reliable results, which are comparable to the interreader-variabilities from manual readers.

Acknowledgements

No acknowledgement found.References

1. DeAngelis LM. Brain tumors. N. Engl. J. Med. 2001;344:114–123.

2. Schob S, Frydrychowicz C, Gawlitza M, et al. Signal Intensities in Preoperative MRI Do Not Reflect Proliferative Activity in Meningioma. Transl. Oncol. 2016;9:274–279.

3. Vernooij MW, Ikram MA, Tanghe HL, et al. Incidental Findings on Brain MRI in the General Population. N. Engl. J. Med. 2007;357:1821–1828.

4. Spasic M, Pelargos PE, Barnette N, et al. Incidental Meningiomas. Neurosurg. Clin. N. Am. 2016;27:229–238.

5. Chang V, Narang J, Schultz L, et al. Computer-aided volumetric analysis as a sensitive tool for the management of incidental meningiomas. Acta Neurochir. (Wien). 2012;154:589–597.

6. Fountain DM, Soon WC, Matys T, et al. Volumetric growth rates of meningioma and its correlation with histological diagnosis and clinical outcome: a systematic review. Acta Neurochir. (Wien). 2017;159:435–445.

7. Akkus Z, Galimzianova A, Hoogi A, et al. Deep Learning for Brain MRI Segmentation: State of the Art and Future Directions. J. Digit. Imaging. 2017:1–11.

8. Mazzara GP, Velthuizen RP, Pearlman JL, et al. Brain tumor target volume determination for radiation treatment planning through automated MRI segmentation. Int. J. Radiat. Oncol. 2004;59:300–312.

9. Kamnitsas K, Ledig C, Newcombe VFJ, et al. Efficient Multi-Scale 3D CNN with Fully Connected CRF for Accurate Brain Lesion Segmentation. Med. Image Anal. 2016;36:61–78.

10. Michael Perkuhn PSFTGSMMDGCKJB. Clinical Evaluation of a Multiparametric Deep Learning Model for Glioblastoma Segmentation Using Heterogeneous Magnetic Resonance Imaging Data From Clinical Routine. Invest. Radiol. 2018;53:647–654.

11. Laukamp KR, Thiele F, Shakirin G, et al. Fully automated detection and segmentation of meningiomas using deep learning on routine multiparametric MRI. Eur. Radiol. 2018:1–9.

12. Louis DN, Perry A, Reifenberger G, et al. The 2016 World Health Organization Classification of Tumors of the Central Nervous System: a summary. Acta Neuropathol. 2016;131:803–820.

13. Crum WR, Camara O, Hill DLG. Generalized overlap measures for evaluation and validation in medical image analysis. IEEE Trans. Med. Imaging. 2006;25:1451–61.

14. Havaei M, Davy A, Warde-Farley D, et al. Brain tumor segmentation with Deep Neural Networks. Med. Image Anal. 2017;35:18–31.

15. Farzaneh N, Soroushmehr SMR, Williamson CA, et al. Automated subdural hematoma segmentation for traumatic brain injured (TBI) patients. In: 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE; 2017:3069–3072.

16. Zhuge Y, Krauze A V., Ning H, et al. Brain tumor segmentation using holistically nested neural networks in MRI images. Med. Phys. 2017;44:5234–5243.

17. Ma D, Gulani V, Seiberlich N, et al. Magnetic resonance fingerprinting. Nature. 2013;495:187–192.

18. Badve C, Yu A, Dastmalchian S, et al. MR Fingerprinting of Adult Brain Tumors: Initial Experience. AJNR. Am. J. Neuroradiol. 2017;38:492–499.

19. Laukamp K, Shakirin G, Baeßler B, et al. Accuracy of radiomics-based feature analysis on multiparametric MR images for non-invasive meningioma grading. World Neurosurg. 2019;Ahead of p.

20. Latini F, Larsson E-M, Ryttlefors M. Rapid and Accurate MRI Segmentation of Peritumoral Brain Edema in Meningiomas. Clin. Neuroradiol. 2017;27:145–152.