1833

Automated Segmentation of Human Skull to plan Craniofacial Surgery using dual-Radiofrequency dual-Echo, 3D Ultrashort Echo Time MRI sequence1Department of Bioengineering, University of Pennsylvania, Philadelphia, PA, United States, 2Division of Plastic Surgery, Children’s Hospital of Philadelphia, Philadelphia, PA, United States, 3Department of Radiology, University of Pennsylvania, Philadelphia, PA, United States

Synopsis

Bone-selective Magnetic Resonance Imaging (MRI) produced by a Dual Radiofrequency (RF), dual‐echo, 3D Ultrashort Echo Time (UTE) pulse sequence and bone‐selective image reconstruction process provides a radiation-free imaging modality with high concordance to computed tomography (CT). This imaging technique is of specific interest to craniofacial surgeons. Here, we pilot the use of an automated segmentation pipeline on the bone-selective MR images with the goal of reducing time required for the 3D segmentation step. This automated segmentation pipeline, used with bone-selective MRI could eliminate the need for radiative CT thereby reducing the risk of malignancy in pediatric craniofacial patients.

Introduction

Bone-selective Magnetic Resonance Imaging (MRI) produced by a Dual Radiofrequency (RF), dual‐echo, 3D Ultrashort Echo Time (UTE) pulse sequence and bone‐selective image reconstruction process provides a radiation-free imaging modality with high concordance to computed tomography (CT). This imaging technique, though beneficial in many realms of clinical practice, is of specific interest to craniofacial surgeons. Patients with craniofacial anomalies may require multiple pre and post-operative CT scans at a young age, which leads to a higher cumulative risk of malignancy than an isolated CT scan1,2. The introduction of this MR sequence to obtain images would eliminate the acquisition of radiative CT images. To plan a complex craniofacial surgery, it is essential to delineate the human skull in the MR images and visualize the anatomy as a 3D rendering. As it stands, the manual image segmentation required to produce the bone-selective MR-based 3D skull segmentation is time and labor intensive. This acts as a bottleneck in clinical practice, especially in cases requiring rapid surgical intervention. The aim of this study is to pilot the use of an automated segmentation pipeline on the bone-selective MR images with the goal of reducing time required for the 3D segmentation step.Materials

Dual-RF, dual-echo, 3D UTE pulse sequence MR using 3T (TIM Trio; Siemens Medical Solutions, Erlangen, Germany) scanner at 1 mm3 isotropic resolution, and low-dose research CT images at 0.47 x 0.47 mm2 in-plane and 0.75 mm slice thickness were acquired in 21 healthy adult volunteers (n=8 male, n=13 female, median age 27.4 range 25.3-45.7). Two MR images were constructed; I1 = bone-selective (combined ECHO11, ECHO21), I2 = longer echo time (combined ECHO12 , ECHO22) which were subtracted to derive, Ibone = subtraction image (I1 - I2) described in3,4 [Figure 1]. Each of the CT images was thresholded and then manually corrected by an expert to segment the skull using ITK-SNAP5. CT images were then rigidly registered to image I1 using the mutual information metric via a software tool "greedy"6. The corresponding skull segmentations were transformed to the I1 image space. We thus obtain "groundtruth" segmentations of the skull as reference for the MR images.Methods

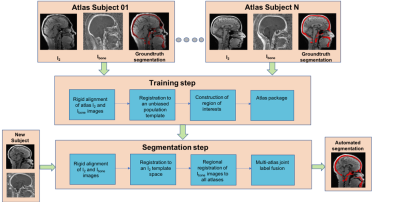

An automated multi-atlas segmentation pipeline7 [Figure 2] was used to segment the skull in the Ibone image. This pipeline consists of two steps: a training step and a segmentation step. The training step is used to produce a dataset called an ‘atlas package’. Each pair of I2 and Ibone images, forming a set of atlases, is an input to the training step. The training step consists of a series of operations: group-wise deformable registration of all I2 images to an unbiased population template8 and then pairwise registration between all images in the template space. The segmentation step is used to automatically segment the skull of new subjects using the atlas package created in the training step, and this step is as follows: image I2 is registered to the unbiased population template contained in the atlas package using greedy deformable registration. A consensus multi-atlas segmentation of the new subject’s weighted scan is computed using joint label fusion (JLF)9.Results

We removed one subject from the initial atlas building stage due to image acquisition artifacts, and tested the method using a ten-fold cross validation setting where we build each atlas package using images from ten individuals and then use that atlas package to segment the images from the other ten individuals. We computed several volume and distance metrics between the groundtruth segmentation and the corresponding obtained automated segmentation for all the 20 images. Dice similarity coefficient score was 77.47 % ± 3.52, the 95th percentile Haussdorff distance was 2.4178 mm ± 0.4672, and the average symmetric surface distance was 0.9626 mm ± 0.1453. It took around ten minutes to segment an image after the atlas had been built. We excluded one subject in the tabulation of the results due to the mis-registration of CT to MR space for that subject.Discussion

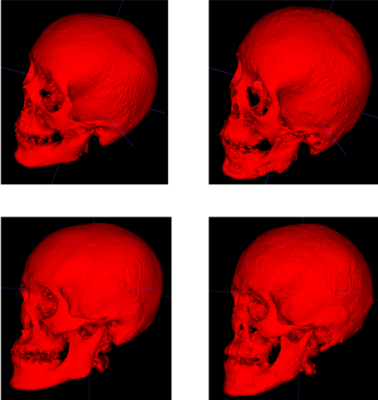

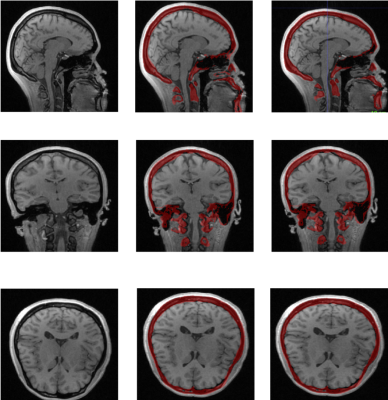

The results suggest that the automated segmentation pipeline could be used to segment the MR images to delineate the skull with a good accuracy. Figures 3 and 4 compare the automated segmentation with respect to the groundtruth segmentations. A potential source of error might be due to the motion of the patient between the CT and the MR scans. This segmentation method would help in reducing the time required to segment the skull, which is currently done manually. Without the time restrictions of manual segmentation, the demonstrated automated pipeline used with bone-selective MRI could eliminate the need for radiative CT thereby reducing the risk of malignancy in pediatric craniofacial patients.Acknowledgements

This work is funded by a National Institutes of Health R21 DE028417 as well as University of Pennsylvania Center for Human Appearance Grant. The work was approved by both the University of Pennsylvania and Children’s Hospital of Philadelphia Institutional Review Board.References

[1] Pearce, M. S., Salotti, J. A., Little, M. P., et al. Radiation exposure from CT scans in childhood and subsequent risk of leukaemia and brain tumours: a retrospective cohort study. Lancet (London, England) 2012;380:499-505.

[2] Mathews, J. D., Forsythe, A. V., Brady, Z., et al. Cancer risk in 680,000 people exposed to computed tomography scans in childhood or adolescence: data linkage study of 11 million Australians. BMJ (Clinical research ed) 2013;346:f2360.

[3] Lee, H., Zhao, X., Song, H. K., Zhang, R., Bartlett, S. P., Wehrli, F. W. Rapid dual-RF, dual-echo, 3D ultrashort echo time craniofacial imaging: A feasibility study. Magnetic resonance in medicine 2019;81:3007-3016.

[4] R. Zhang, S. Bartlett, H. Lee, X. Zhao, H. K. Song, F. Wehrli. Bone-Selective MRI as a Nonradiative Alternative to CT for Craniofacial Imaging Proceedings of the 18th Congress of International Society of Craniofacial Surgery; 2019 Sept 16-19; Paris, France; Abstract S15-09, 2019

[5] Yushkevich, P. A., Yang, G., Gerig, G. ITK-SNAP: An interactive tool for semi-automatic segmentation of multi-modality biomedical images. Conference proceedings: Annual International Conference of the IEEE Engineering in Medicine and Biology Society IEEE Engineering in Medicine and Biology Society Annual Conference 2016;2016:3342-3345.

[6] Xie, Long, Russell T. Shinohara, Ranjit Ittyerah, Hugo J. Kuijf, John B. Pluta, Kim Blom, Minke Kooistra et al. "Automated Multi-Atlas Segmentation of Hippocampal and Extrahippocampal Subregions in Alzheimer’s Disease at 3T and 7T: What Atlas Composition Works Best?." Journal of Alzheimer's Disease 63, no. 1 (2018): 217-225

[7] Yushkevich, P. A., Pluta, J. B., Wang, H., et al. Automated volumetry and regional thickness analysis of hippocampal subfields and medial temporal cortical structures in mild cognitive impairment. Human brain mapping 2015;36:258-287.

[8] Joshi, S., Davis, B., Jomier, M., Gerig, G. Unbiased diffeomorphic atlas construction for computational anatomy. Neuroimage 2004;23 Suppl 1:S151-160.

[9] Landman BA, Warfield SK, editors (2012): MICCAI 2012 Workshop on Multi-Atlas Labeling. CreateSpace.

[10] Abdel Aziz Taha and Allan Hanbury. Metrics for evaluating 3D medical image segmentation: analysis, selection, and tool. BMC Medical Imaging, 15:29, August 2015.

[11] Yushkevich PA, Piven J, Hazlett HC, Smith RG, Ho S, Gee JC, Gerig G. User-guided 3D active contour segmentation of anatomical structures: significantly improved efficiency and reliability. Neuroimage. 2006 Jul 1;31(3):1116-28.

[12] Avants, B. B., Epstein, C. L., Grossman, M., Gee, J. C. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Medical image analysis 2008;12:26-41.

[13] Smith, S. M. Fast robust automated brain extraction. Human brain mapping 2002;17:143-155.

[14] Wang H, Das SR, Suh JW, Altinay M, Pluta J, Craige C, Avants B, Yushkevich PA, Alzheimer’s Disease Neuroimaging Initiative (2011): A learning-based wrapper method to correct systematic errors in automatic image segmentation: Consistently improved performance in hippocampus, cortex and brain segmentation. Neuroimage 55:968–985.

Figures