1288

Deep Learning Detection of Penumbral Tissue on Arterial Spin Labeling in Stroke1University of Southern California, Los Angeles, CA, United States, 2University of California, Los Angeles, Westwood, CA, United States, 3Beijing Tiantan Hospital, Capital Medical University, Beijing, China, 4Stanford University, Stanford, CA, United States

Synopsis

A deep learning (DL)-based algorithm was developed to automatically identify the hypoperfusion lesion and penumbra in ASL images of arterial ischemic stroke (AIS) patients. A total of 167 3D pCASL datasets from 137 AIS patients on Siemens MR were used for training, using concurrently acquired DSC MRI as the label. The DL model achieved a voxel-wise area under the curve (AUC) of 0.958, and 92% accuracy for retrospective determination for subject-level endovascular treatment eligibility. The DL-model was cross validated on 12 GE pCASL data with 92% accuracy without fine-tuning of parameters.

Introduction

Arterial spin labeling (ASL) MRI techniques provide cerebral blood flow (CBF) measures without the use of contrast agent. It has provided largely consistent results with DSC perfusion MRI in delineating hypoperfused regions in arterial ischemic stroke (AIS)1, 2. However, the precise delineation of hypoperfusion lesion and penumbra in ASL images remains challenging due to the low SNR and delayed arterial transit. Deep learning (DL), an advanced Machine Learning (ML) method, captures the hierarchical features of the input image automatically and can identify, classify, and quantify patterns in medical images3, 4. In this study, we developed and evaluated a DL-based algorithm to automatically identify the hypoperfusion lesion and penumbra in ASL images using the lesions from DSC MRI as supervision.Methods

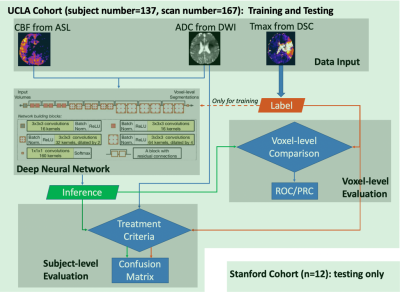

The flowchart of the DL algorithm deployment is shown in Figure 1, including modules of data input, DL model architecture, voxel-level and subject-level evaluation. The following describes each step in detail.1. Data Acquisition and Processing

The study included MRI data from two AIS cohorts: 167 image sets from 137 patients scanned on Siemens 1.5T Avanto or a 3T Tim Trio systems at UCLA (1.5T: n=93; 3T: n=74), using 3D GRASE pCASL with PLD of 2000ms; and 12 MRI from 12 patients scanned on GE 1.5T and 3.0T SIGNA systems at Stanford University (1.5T: n=1; 3T: n=11), using 3D pCASL with fast spin echo and stack-of-spirals readout trajectory and PLD of 2000ms. DSC images were acquired using a gradient-echo EPI sequence, and the Time-to-Maximum of the residue function (Tmax) map was generated using a cSVD method by the commercial software OLEA (La Ciotat, France) for the UCLA cohort. The RAPID software (iSchemaView Inc, Menlo Park, CA) was used for analyzing DSC data from the Stanford cohort. Following skull-stripping, brain segmentation, manual masking and thresholding by 6sec5-7, Tmax labels were generated from Tmax maps.

2. Network and Training

The HighRes3Dnet8 with 20 layers and residual connections was trained on 2 Nvidia GeForce GTX 1080 Ti GPUs via NiftyNet9. CBF and ADC images were used as input, and the Tmax label image served as the supervision. 48*48*48 volumes (batch size=4) were randomly extracted from 3D preprocessed images for training. Volume-level augmentation was employed including rotation and random spatial rescaling. The training process was performed with 70,000 iterations, with Dice loss and Adam optimizer (learning rate = 0.0001, β1=0.9, β2=0.999). Ten-fold cross-validation was used so that the whole dataset can be evaluated for inference performance.

3. Machine Learning Models and Training

For comparison, six commonly used ML classifiers were trained on the same UCLA cohort, including linear regression classifier, ridge regression classifier, kernel ridge regression classifier, neural network classifier, Support Vector Machine (SVM) with Radial Basis Function (RBF) and random forest classifier10. The same 10-fold cross-validation scheme as used in the training of the DL model was applied.

4. Model Performance Assessment

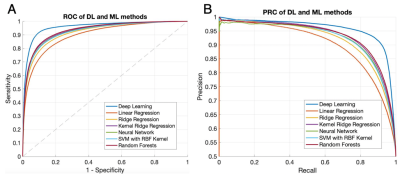

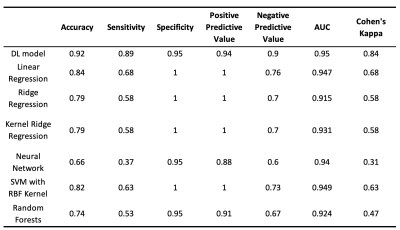

The DL model performance was evaluated on a subset of the UCLA cohort who met the inclusion criteria for DEFUSE 3 trial5. For voxel-level evaluation, the group average Dice between the inference and the Tmax label was calculated as the average of Dice coefficient of all subjects. The Receiver Operating Characteristic (ROC) curves and Precision-Recall (PR) curves were calculated. Subject-level performance was based on the criteria of perfusion/diffusion mismatch by the DEFUSE3 trial5. Confusion matrices, sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), the total accuracy, and Cohen’s kappa coefficient were also calculated11 for the DL and 6 ML models, respectively.

Results and Discussion

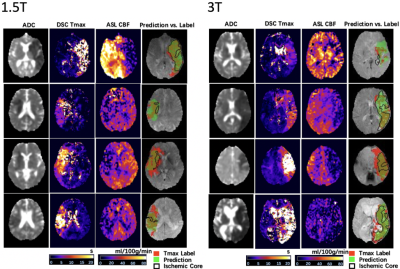

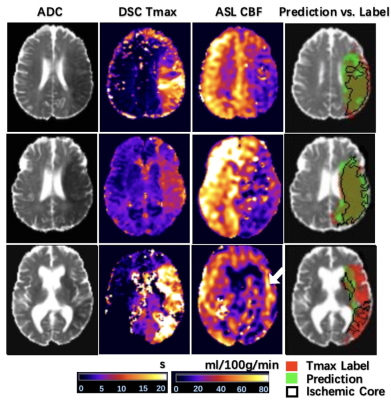

Figure 2 shows 4 representative cases at 1.5T and at 3T from the UCLA cohort, respectively. Only the second case of 1.5T was misclassified. Overall, the network could identify the perfusion lesion defined by Tmax images although there were discrepancies between the lesion volumes of ASL and DSC MRI. The group average Dice coefficient was 0.47±0.23. The ROC and PR curves showed our DL model achieved significantly superior performance compared to traditional ML methods (p<0.001). The AUC of the ROC and PR curve for our DL model was 0.958(Figure 3A) and 0.957 (Figure 3B). For endovascular treatment eligibility, Figure 4 shows that the accuracy, sensitivity, specificity, PPV, NPV, and Cohen’s kappa coefficient of our DL model were systematically superior than ML models. The overall accuracy was 0.92 (95% CI: [0.79, 0.98]).Our pretrained DL models were tested on the 12 ASL datasets of the Stanford cohort, without any fine-tuning of parameters. Three cases are shown in Figure 5. The average Dice coefficient was 0.43±0.25. Voxel-level evaluation showed that the AUC of the ROC and PR curve was 0.942 and 0.931, respectively. Subject-level evaluation yielded an accuracy of 0.92 (95% CI: [0.62, 0.99]), with sensitivity, specificity, PPV, and NPV of 0.75, 1.00, 1.00 and 0.89, respectively.

Conclusion

With a high accuracy of 92% for imaging-based criteria for endovascular treatment in two independent cohorts of AIS patients and superior performance compared to ML methods, the proposed ASL perfusion DL model provides a promising approach for assisting decision-making for endovascular treatment in AIS patients.Acknowledgements

The authors thank Drs. Yonggang Shi and Ben Duffy for helpful discussions. This work was supported by National Institute of Health (NIH) grants UH2-NS100614, R01EB028297, R01NS066506.References

1. Wang DJ, Alger JR, Qiao JX, Gunther M, Pope WB, Saver JL, et al. Multi-delay multi-parametric arterial spin-labeled perfusion mri in acute ischemic stroke - comparison with dynamic susceptibility contrast enhanced perfusion imaging. Neuroimage Clin. 2013;3:1-7

2. Wang DJ, Alger JR, Qiao JX, Hao Q, Hou S, Fiaz R, et al. The value of arterial spin-labeled perfusion imaging in acute ischemic stroke: Comparison with dynamic susceptibility contrast-enhanced mri. Stroke. 2012;43:1018-1024 3. Shen D, Wu G, Suk HI. Deep learning in medical image analysis. Annu Rev Biomed Eng. 2017;19:221-248

4. Zaharchuk G, Gong E, Wintermark M, Rubin D, Langlotz CP. Deep learning in neuroradiology. AJNR Am J Neuroradiol. 2018;39:1776-1784

5. Albers GW, Marks MP, Kemp S, Christensen S, Tsai JP, Ortega-Gutierrez S, et al. Thrombectomy for stroke at 6 to 16 hours with selection by perfusion imaging. N Engl J Med. 2018;378:708-718

6. Olivot JM, Mlynash M, Thijs VN, Kemp S, Lansberg MG, Wechsler L, et al. Optimal tmax threshold for predicting penumbral tissue in acute stroke. Stroke. 2009;40:469-475

7. Davis SM, Donnan GA, Parsons MW, Levi C, Butcher KS, Peeters A, et al. Effects of alteplase beyond 3 h after stroke in the echoplanar imaging thrombolytic evaluation trial (epithet): A placebo-controlled randomised trial. Lancet Neurol. 2008;7:299-309

8. Li W, Wang G, Lucas F, Sebastien O, Jorge CM, Tom V. On the compactness, efficiency, and representation of 3d convolutional networks: Brain parcellation as a pretext task. 2017:348-360

9. Gibson E, Li W, Sudre C, Fidon L, Shakir DI, Wang G, et al. Niftynet: A deep-learning platform for medical imaging. Comput Methods Programs Biomed. 2018;158:113-122

10. McKinley R, Hung F, Wiest R, Liebeskind DS, Scalzo F. A machine learning approach to perfusion imaging with dynamic susceptibility contrast mr. Front Neurol. 2018;9:717

11. Stehman SV. Selecting and interpreting measures of thematic classification accuracy. Remote Sens Environ. 1997;62:77-89

Figures