1249

Classifying Autism Spectrum Disorder Patients from Normal Subjects using a Connectivity-based Graph Convolutional Network

Lebo Wang1, Kaiming Li2, and Xiaoping Hu1,2

1Department of Electrical and Computer Engineering, University of California, Riverside, Riverside, CA, United States, 2Department of Bioengineering, University of California, Riverside, Riverside, CA, United States

1Department of Electrical and Computer Engineering, University of California, Riverside, Riverside, CA, United States, 2Department of Bioengineering, University of California, Riverside, Riverside, CA, United States

Synopsis

Traditional deep learning architectures have met with limited performance improvement on fMRI data analysis. Our connectivity-based graph convolutional network modeled fMRI data as graphs and performed convolutions within connectivity-based neighborhood. We demonstrate that our approach is substantially more robust in classifying Autism Spectrum Disorder (ASD) patients from normal subjects compared with those in published work. Extracting spatial features and averaging across frames are beneficial in reducing variance and improving classification accuracy.

INTRODUCTION

There has been increasing interest in applying deep learning techniques on resting-state functional magnetic resonance imaging (rs-fMRI) data for large-scale analyses. It is promising to derive neuroimaging biomarkers for the identification of subjects with psychiatric disorders. However, traditional deep learning architectures struggle with limited performance improvement on fMRI data analysis1-3. Previous work applied 3D convolutional neural networks on voxel-wise fMRI data1. However, the distant functional connectivity (FC) cannot be readily captured by traditional convolutional neural networks (CNNs) on grid images, in which localized image features (such as edges, corners, etc.) were considered as basic components in the Euclidean space. In addition, the fMRI time course from single voxels were usually noisy and unreliable. ROI-based fMRI data were preferred to decrease the computational complexity and reduce the noise in the time courses. The multilayer perceptron (MLP) model was applied on ROI-based fMRI data2, and the RNN model was further deployed to explore the temporal information between frames3. However, it is very difficult to avoid overfitting for MLPs during training. In this work, we introduce the connectivity-based graph convolutional network (cGCN) architecture on the graph representation of ROI-based fMRI data to classify Autism Spectrum Disorder (ASD) patients from normal subjects.METHODS

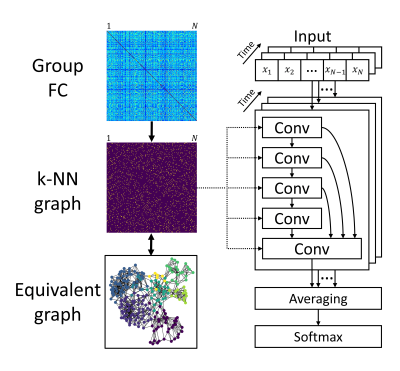

The FC matrix reflects the connectivity between all pairs of regions, which is equivalent to an undirected graph showing long-range interactions within the connectomic neighborhood. We extended traditional CNNs on graph data to extract spatial features based on FC. The architecture of cGCN is shown in Fig.1. Starting from the group FC matrix, we obtained the k-nearest neighbor (k-NN) graph to keep k top-correlative neighbors for each node, where k was a hyperparameter related to the graph structure. After spatial features were extracted from each frame, the averaging layer was applied to obtain latent representations for the whole fMRI data, leading to a robust estimation of spatial patterns that were classified by the softmax layer.We evaluated the performance of cGCN on the ABIDE (Autism Brain Imaging Data Exchange) dataset4 to classify ASD patients from healthy controls. The ABIDE dataset consists of 1035 subjects in total (505 ASD subjects and 530 normal controls) from 17 sites. The fMRI data were preprocessed with bandpass filtering (0.01–0.1 Hz) and without global signal regression. The Craddock 200 atlas5 was utilized to extract ROI signals. Considering the heterogeneity between different imaging sites, we chose the leave-one-site-out cross-validation to test the classification accuracy of our cGCN architecture.

RESULTS

The classification accuracy of cGCN was obtained for data from each site with a range of k values (3, 5, 10 and 20). As shown in Fig.2(a), the highest classification accuracy on average across sites was 97.0% with k=3, in which 14 out of 17 sites achieved the best performance among all k values. For all models with different k values, the classification accuracy (except the SBL site) was consistently above 86%. Though the lowest accuracy was obtained with k=10 on average across sites, the accuracy obtained is still much higher than the chance level. The relationship between the classification accuracy and the length of fMRI data is presented in Fig.2(b). For clarity, only sites whose classification accuracy less than 100% is shown along with their site names. Before saturation (less than 200 frames), the classification accuracy was proportional to the length of fMRI data.DISCUSSION

Group FC can reflect the underlying FC pattern with great robustness. The k-NN graph established the connectivity-based neighborhood for each node to define convolutional neighbors for different convolutional layers. Increasing the number of convolutional neighbors did not always increase the performance, as is typically the case with traditional CNNs. One possible reason is that convolutions on a great amount of neighbors may fail to generate features related to local FC with good generalization. The observed proportional relationship between classification accuracy and the length of fMRI data indicates it is useful to reduce variation by averaging spatial features from different frames. Compared with traditional deep learning approaches on the ASD classification, cGCN substantially outperformed the 3D CNN model (70.5%)1, the RNN model (70.1%)3 and the MLP model (70%)2.CONCLUSION

We introduced a connectivity-based graph convolution neural network for classifying ASD patients from controls with rs-fMRI data. Rather than performing convolution on rectilinear image grids, our architecture modeled the fMRI data as graphs and performed convolutions within connectivity-based neighborhood. Our results indicated that cGCN was effective in achieving accurate classification of ASD patients. It was also found that extracting spatial features from each frame and averaging across frames were beneficial in reducing variance and improving classification accuracy.Acknowledgements

Thanks to Alexandra Reardon for proofreading.References

- Zhao Y, Ge F, Zhang S, et al. 3d Deep Convolutional Neural Network Revealed the Value of Brain Network Overlap in Differentiating Autism Spectrum Disorder from Healthy Controls. International Conference on Medical Image Computing and Computer-Assisted Intervention. 2018. 172-180.

- Heinsfeld A S, Franco A R, Craddock R C, et al. Identification of Autism Spectrum Disorder Using Deep Learning and the Abide Dataset. NeuroImage: Clinical. 2018. 17: 16-23.

- Dvornek N C, Ventola P, Pelphrey K A, et al. Identifying Autism from Resting-State Fmri Using Long Short-Term Memory Networks. International Workshop on Machine Learning in Medical Imaging. 2017. 362-370.

- Di Martino A, Yan C-G, Li Q, et al. The Autism Brain Imaging Data Exchange: Towards a Large-Scale Evaluation of the Intrinsic Brain Architecture in Autism. Molecular psychiatry. 2014. 19: 659.

- Craddock R C, James G A, Holtzheimer Iii P E, et al. A Whole Brain Fmri Atlas Generated Via Spatially Constrained Spectral Clustering. Human Brain Mapping. 2012. 33: 1914-1928.

Figures

Fig.1. Overview of our cGCN

architecture. The group FC based on all data was obtained for stable estimation

of FC. Then a k-NN graph was built to keep top-correlative neighborhood for

each node. The k-NN graph defined the convolutional neighbors for all

convolutional layers. Spatial features were extracted by stacking multiple convolutional

layers with skip-connections. The latent representations were then averaged

across frames, followed by a softmax layer for the final classification.

Fig.2. (a) The leave-one-site-out

cross-validation was used to evaluate the classification performance of cGCN on

different sites of ABIDE dataset and with different k values. The best

classification accuracy of 97.0% was achieved on average with k=3. (b) the proportional

relationship between the classification accuracy before saturation (around 200

frames) and the length of fMRI was presented. Sites with less than 100%

classification accuracy were labeled with site names.