1138

Comparison of tumor autosegmentation techniques from an undersampled dynamic radial bSSFP acquisition on a low-field MR-linac1Medical Physics in Radiology, German Cancer Research Center (DKFZ), Heidelberg, Germany, 2Department of Physics and Astronomy, Heidelberg University, Heidelberg, Germany, 3Department of Radiation Oncology,, University Hospital of Heidelberg, Heidelberg, Germany

Synopsis

MR-linac hybrid systems can dynamically image a tumor during radiotherapy to aid in a more precise delivery of the radiation dose. Motion tracking of the target is required and is currently performed by a deformable image registration on Cartesian bSSFP images. This study compares three different tracking methods (convolutional neuronal network, multi-template matching, and deformable image registration) to track a lung tumor in Cartesian images, where the performance of the three methods did not differ significantly. The convolutional neuronal network provided minimal decrease in tracking accuracy in a healthy volunteer when undersampled radial images were used to accelerate image acquisition.

Introduction

New hybrid MRI-linear accelerator (MR-linac) systems allow imaging of tumors with high soft-tissue contrast in real-time during tumor irradiation for the first time. This can increase the treatment accuracy[1]. Healthy tissue is spared from radiation by either irradiating only when the tumor is in a predefined target volume or by updating the multileaf-collimator shape as the tumor moves. Both methods require fast imaging to avoid delays between target detection and beam delivery. The ViewRay system used here employs a Cartesian sampling scheme for imaging and a deformable image registration for tumor tracking in clinical application. Here we compare three different tumor tracking methods on clinical Cartesian images as well as on undersampled, high temporal resolution radial images.Methods

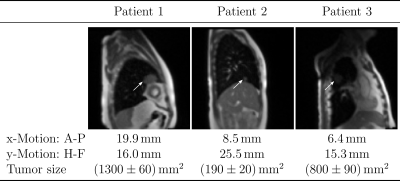

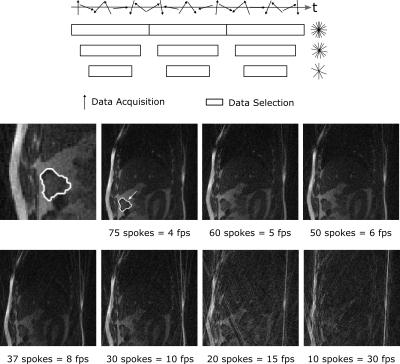

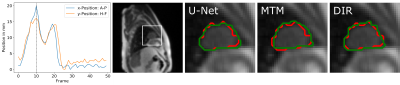

Imaging was performed on a 0.35T MRIdian Linac system (ViewRay Inc., Cleveland, Ohio, USA) using two 6-channel surface receive coils. A bSSFP sequence was used to maximize signal-to-noise ratio, resulting in a T2/T1-weighted contrast[2]. Patient data (Fig.1) were acquired with Cartesian sampling with 4 frames per second (fps). Imaging parameters: (Δx)³=3.5x3.5x7mm³; FOV=350x350mm²; TR/TE=2.10ms/0.91ms. Images were resampled for analysis from 100 to 128 pixels, leading to an in-plane pixel size of 2.7x2.7mm². Manual contouring was performed twice for an inter-observer comparison.Images of a healthy volunteer (Fig.2) were taken using radial tiny golden angle (Ψ10=16.95...°) sampling[3]. Imaging parameters: (Δx)³=2.3x2.3x5mm³; FOV=300x300mm²; TR/TE=3.34ms/1.67ms. Videos with seven different reconstruction windows between 75 spokes (4 fps) down to 10 spokes (30 fps) were analyzed. Radial images were reconstructed using a non-uniform fast Fourier transform[4]. The cut surface of the intestine was used for tracking (Fig.2, middle left), as it represents a typical tumor size and has a high contrast to the surrounding similar to lung cancers. Contours were manually defined from the images with 75 spokes.

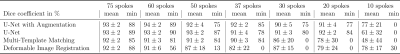

For all videos, manual contours were created in 50 images. The first 10 images were used as reference frames to represent different breathing states. The following 40 images were used to test the tracking performance with the Dice coefficient[5], which measures the overlap of two areas A and B between 1 for perfect and 0 for no overlap (Dice=2(A∩B)/(A+B)).

The following three auto-contouring methods were applied for motion tracking:

Convolutional neuronal network with a U-net[6] architecture: The 10 training images were split for learning and validation within a 5-fold cross validation. Network parameters: batch size=1, epochs=150, initial learning rate 10−5, which was reduced by a factor of 10 when the Adam optimizer did not improve the cross-entropy loss during the preceding 3 epochs. Image augmentation to generate more diverse training images was performed with the following parameters: rotation range=5°, height shift range=10%, width shift range=5%, shear range=0.2°.

Multi-template matching[7] (MTM): Ten same-sized templates of the tracked object were cut out from the reference frames. In every new video frame, the normalized cross correlation coefficient was used to find the position of the best match with the templates, and the contour of the frame with the highest correlation was used as contour of the new image.

Deformable image registration[8] (DIR): Firstly, the most similar reference image to the current frame was estimated using cross correlation. Secondly, a non-rigid deformation map was calculated from these two pictures. The contour in the new image was given by applying the same deformation on the contour of the chosen reference image.

Computation times per frame were: 2.8ms (MTM), 55ms (U-Net) and 7.9s (DIR). U-Net needs 30min for learning on a 64-bit Windows 10 computer with Intel Core i7-6700 CPU, 32GB RAM. The code was not time-optimized.

Results

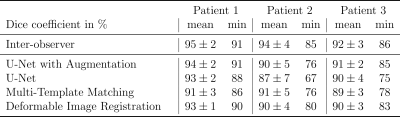

The Dice coefficient mean and minimum values from the three tumor patients are shown in the table of Fig.4. The results show that the three tested tracking methods do not show significant differences in the tracking precision and accuracy, as the standard deviations of the Dice coefficient overlap with each other and with the inter-observer test (Fig.4). Data augmentation improved the mean and minimum Dice coefficient of the U-Net for all patients slightly but not significantly.Results for the radial images of the healthy volunteer are shown in Fig.5. With 75 spokes, all tracking methods perform very similarly. Going to smaller reconstruction windows, the minimum Dice coefficient drops rapidly below 60 spokes for the deformable image registration and below 30 spokes for the multi-template matching. The U-Net implementation achieves superior results for all numbers of spokes above 10.

Discussion

In the Cartesian images of lung tumors, all three tracking methods obtain similar results. In the radial images of the healthy volunteer, the U-Net is more robust for undersampled images, which are accompanied by streaking artifacts and reduced signal-to-noise ratio. Data augmentation during U-Net training can improve the segmentation by artificially increasing the variability of the training images, as can be seen for the Cartesian images. The augmentation parameters for the radial images have to be further adapted to show an improvement. Moreover, the undersampling artifacts could be compensated for with iterative reconstructions.Conclusion

All three evaluated tracking methods are applicable for tumor tracking in Cartesian images for MR-guided radiotherapy. Radial acquisition has the potential to speed up the imaging, with only a small decline of the tracking results when a U-Net is used.Acknowledgements

No acknowledgement found.References

1. Wen

N, et al. Evaluation of a magnetic resonance guided linear accelerator for

stereotactic radiosurgery treatment. Radiotherapy and Oncology 127.3

(2018): 460-466

2. Scheffler K, Lehnhardt S. Principles and

applications of balanced SSFP techniques. Eur Radiol.

2003;13(11):2409-2418. doi:10.1007/s00330-003-1957-x

3. Wundrak S, Paul J, Ulrici J, et al. Golden ratio

sparse MRI using tiny golden angles. Magn Reson Med.

2016;75(6):2372-2378. doi:10.1002/mrm.25831

4. Fessler JA, Sutton BP. Nonuniform fast Fourier

transforms using min-max interpolation. IEEE Trans Signal Process.

2003;51(2):560-574. doi:10.1109/TSP.2002.807005

5. Dice, Lee R. Measures of the amount of ecologic

association between species. Ecology 26.3 (1945): 297-302

6. Ronneberger O, Fischer P, Brox T. U-net:

Convolutional networks for biomedical image segmentation. Med Image Comput

Comput Interv. 2015;9351:234-241. doi:10.1007/978-3-319-24574-4_28

7. Thomas LS, Gehrig J. Multi-Template Matching: a

versatile tool for object- localization in microscopy images. bioRxiv 619338.

2019. doi:https://doi.org/10.1101/619338

8. Avants BB, Epstein CL, Grossman M, Gee JC.

Symmetric diffeomorphic image registration with cross-correlation: evaluating

automated labeling of elderly and neurodegenerative brain. Med Image Anal.

2008;12(1):26-41. doi:10.1021/nn300902w.Release

Figures