1027

Deep Learning-based Fetal-Uterine Motion Modeling from Volumetric EPI Time Series1Department of Engineering Physics, Tsinghua University, Beijing, China, 2Computer Science and Artificial Intelligence Laboratory (CSAIL), Massachusetts Institute of Technology, Cambridge, MA, United States, 3Department of Electrical Engineering and Computer Science, Massachusetts Institute of Technology, Cambridge, MA, United States, 4Fetal-Neonatal Neuroimaging and Developmental Science Center, Boston Children’s Hospital, Boston, MA, United States, 5Harvard Medical School, Boston, MA, United States, 6Institute for Medical Engineering and Science, Massachusetts Institute of Technology, Cambridge, MA, United States

Synopsis

We propose a three-dimensional convolutional neural network applied to echo planar EPI time series of pregnant women for the automatic segmentation of the uterus (placenta excluded) and fetal body. The segmentation results are utilized to create a dynamic model for the fetus for retrospective analyses. The 3D dynamic fetal-uterine motion model will provide quantitative information of fetal motion characteristics for diagnostic purposes and may guide future fetal imaging strategies where adaptive, online slice prescription is used to mitigate motion artifacts.

Introduction

Deep learning-based methods have shown impressive capabilities for MR segmentation1. In this study, we developed and applied a deep neural network to EPI time series in pregnancy for automatic segmentation of the uterus (placenta excluded) and the fetal body. The performance was evaluated by manually labeled EPI volumes. Dynamic 3D models of fetal and uterine motion were built from the consecutive volumetric segmentations.Method

Multi-slice EPI was used to acquire volumetric MR data (matrix size = 120×120×80, image resolution = 3 mm×3 mm×3 mm, TR = 3.5 s) from pregnant women with fetuses of gestational age between 25 and 35 weeks2. The boundaries of uterus and fetal body were manually labeled from raw volumetric images. Placental regions were excluded from the “uterus” boundary. The uterus was defined as the fetal motion region. Manual labeling was performed on 14 volumes drawn from 14 different mothers (within the dataset of 77 mothers, 200-300 time frames per mother) resulting in 1061 slices manually labeled from raw image volumes. The segmented labels (12 volumes for training, 1 for validation, and 1 for testing) were used as datasets for deep learning-based segmentation.The fetal-uterine motion modeling procedure contains two stages: 1. 3D U-Net-based segmentation of uterus (placenta excluded) and fetus, 2. fetal volumetric surface meshing for 3D visualization.

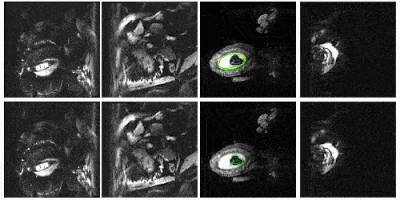

The 3D U-Net was trained on the manually segmented labels to generate volumetric segmentations. This network consists of an encoder-decoder structure to simultaneously extract high-dimensional features and produce labels at the original resolution3,4. The training was performed for 4000 epochs on a NVIDIA TITAN V GPU with an Adam optimizer of 0.0001 learning rate. The trained model was then used to predict the remaining time frames of the training volumes. Due to the distinct features of amniotic fluid around the fetus, the boundary of uterus was first segmented from raw images with higher accuracy. The automatic segmentation of fetal body tended to make false predictions outside the uterine boundary due to the similitude between maternal tissues and fetal body. To solve this problem, the volume mask of uterus was used as an ROI mask for the segmentation of fetal body to filter out the error predictions outside the uterus.

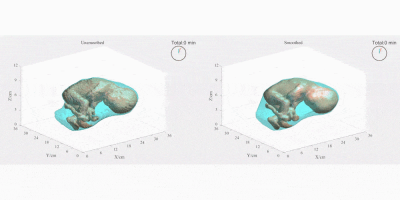

The output of the proposed 3D U-Net is a volumetric binary matrix that indicates the regions of uterus and of fetal body. However, direct meshing of the segmentation results causes “staircase” artifacts on the volumetric surface model due to the low spatial resolution of EPI volumes. In this case, we refined the models with smoothing. For the uterus, we extracted the alpha shape5 from the segmentation masks and reconstructed the uterus surface from its boundary facets. For fetal body, we smoothed the segmented volumetric mask with a 3×3 box convolution kernel and extracted the isosurface from the smoothed regions using Matlab 2019a. Ultimately, we acquired smooth surfaces from the input segmentation results and estimated motion of the uterus and fetal body by linking consecutive frames over time.

Results and Discussion

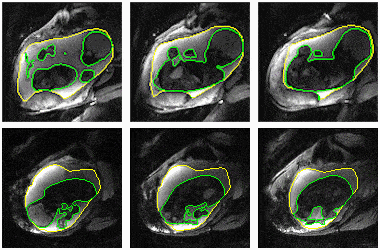

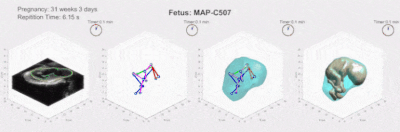

Figure 2 compares the automatic segmentations generated by 3D U-Net and those outlined by experts in a whole EPI volume at one independent time frame. The deep neural network performs well on the segmentation task throughout the volume. Figure 3 shows the automatically predicted boundaries of 6 single slices through consecutive time frames. The network dynamically tracks the boundaries with a computation time on a single volume of ~1 second. Manual segmentation through consecutive frames is over time-consuming, requiring more than one hour per frame for a general technician. The mean dice coefficient for uterus segmentation is 96.68%, while for fetal body is 95.04%. Prediction errors have ~5% probability to occur in certain slices when the boundary of maternal and fetal tissues is indistinct. Larger training datasets are needed to build a robust training model for more accurate predictions.Figure 4 shows the 3D visualization of dynamic fetal-uterine motion model built from automatic segmentations. The proposed modeling method smooths the staircase effects that occurred under direct meshing approaches and visualizes fetal motion in the space coordinate system. Uterine contractions and fetal displacements caused by maternal respiration are captured in our model. The reconstruction process from 3D segmentation results to smooth models took ~0.5 seconds per frame for a complete modeling task including uterus and fetal body. Figure 5 shows the progressive modeling steps for fetus and fetal motion. The skeleton-based model is acquired by a 3D hourglass network6.

Conclusions

Our deep learning-based method was capable of segmenting regions of interest in real-time. Reconstruction of 3D fetal-uterine motion models can be implemented with automatic segmentations and provides vivid visualizations of fetal motion in real-time. Future work will be focused on improving the segmentation accuracy of the neural network, extending the prediction results to larger datasets, and tracking fetal motion from the volumetric model thus combining its usage with clinical applications.Acknowledgements

NIH R01 EB017337, U01 HD087211 and R01HD100009. NVIDIA Corporation.References

1. Hesamian, M. H., Jia, W., He, X., & Kennedy, P. (2019). Deep Learning Techniques for Medical Image Segmentation: Achievements and Challenges. Journal of digital imaging, 1-15.

2. Luo, J., Turk, E. A., Bibbo, C., Gagoski, B., Roberts, D. J., Vangel, M., ... & Barth, W. H. (2017). In vivo quantification of placental insufficiency by BOLD MRI: a human study. Scientific reports, 7(1), 3713.

3. Ronneberger, O., Fischer, P., & Brox, T. (2015, October). U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention (pp. 234-241). Springer, Cham.

4. Çiçek, Ö., Abdulkadir, A., Lienkamp, S. S., Brox, T., & Ronneberger, O. (2016, October). 3D U-Net: learning dense volumetric segmentation from sparse annotation. In International conference on medical image computing and computer-assisted intervention (pp. 424-432). Springer, Cham.

5. Edelsbrunner, H., Kirkpatrick, D., & Seidel, R. (1983). On the shape of a set of points in the plane. IEEE Transactions on information theory, 29(4), 551-559.

6. Xu, J., Zhang, M., Turk, E. A., Zhang, L., Grant, P. E., Ying, K., ... & Adalsteinsson, E. (2019, October). Fetal Pose Estimation in Volumetric MRI Using a 3D Convolution Neural Network. In International Conference on Medical Image Computing and Computer-Assisted Intervention (pp. 403-410). Springer, Cham.

Figures