1023

StackGen-Net: A Stacked Generalization of 3D Orthogonal Convolutional Neural Networks for Improved Detection of White Matter Hyperintensities1Department of Electrical and Computer Engineering, University of Arizona, Tucson, AZ, United States, 2Department of Medical Imaging, University of Arizona, Tucson, AZ, United States, 3Siemens Healthcare USA, Tucson, AZ, United States, 4Department of Surgery, University of Arizona, Tucson, AZ, United States, 5Department of Biomedical Engineering, University of Arizona, Tucson, AZ, United States

Synopsis

Detection and quantification of White Matter Hyperintensities (WMH) on T2-FLAIR images can provide valuable information to assess neurological disease progression. We propose a fully automated stacked generalization ensemble of three orthogonal 3D Convolutional Neural Networks (CNNs), StackGen-Net, to detect WMH on 3D FLAIR images. Each orthogonal CNN predicts WMH on axial, sagittal, and coronal orientations. The posteriors are then combined using a Meta CNN. StackGen-Net outperforms individual CNNs in the ensemble, their ensemble combination, as well as some state-of-the-art deep learning-based models. StackGen-Net can reliably detect and quantify WMH in clinically feasible times, with performance comparable to human inter-observer variability.

Introduction

White matter hyperintensities (WMH) are brain tissues that appear hyperintense on T2-weighted FLAIR images1. These WMH are prominent features of demyelination and axonal degeneration in cerebral brain matter2,3, and are associated with increased risk of stroke or dementia4. Accurate and reliable detection, and quantification of lesion volumes can provide clinicians with valuable information to assess disease progression. In this work, we propose StackGen-Net, a fully automated stacked generalization ensemble of orthogonal 3D Convolutional Neural Networks (CNNs), to detect and quantify WMH on multi-planar reformatted 3D isotropic FLAIR images. We illustrate that StackGen-Net yields superior performance compared to the individual CNNs in the ensemble, their ensemble combination, as well as some state-of-the-art deep learning-based models.Methods

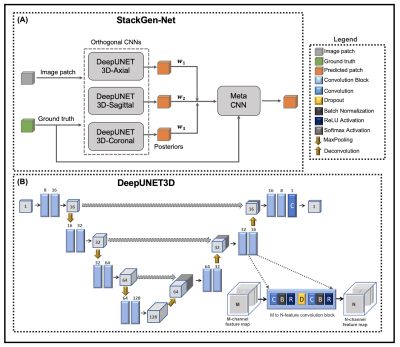

An overview of the proposed architecture is shown in Figure 1A. StackGen-Net consists of three DeepUNET3D CNNs followed by a Meta CNN. The proposed multi-scale DeepUNET3D architecture consists of a series of ‘convolutional blocks’ (shown in Figure 1B) that contain a dropout layer between a sequence of convolution, batch normalization, and rectified non-linear unit layers. Each DeepUNET3D is trained on 2.5D training patches (64x64x7) extracted from axial, coronal, and sagittal orientations respectively. The Meta CNN is trained to learn a weighted combination of WMH posteriors from the orthogonal CNNs to yield a final WMH posterior probability. 3D T2-FLAIR images (resolution: 1 mm isotropic, matrix size: 270x176x240) from 30 subjects with a history of vascular disease but no clinical history of Multiple Sclerosis, were acquired on a 3T (Siemens Skyra) scanner. The study cohort was randomly split into two groups, one for training (21 volumes) and another for testing (9 volumes). Two experienced neuro-radiologists (Observers 1 and 2) agreed on WMH annotation guidelines, and WMH were annotated on the 3D FLAIR volumes by Observer1. Inter- and intra-observer variability between the observers was measured on a subset of FLAIR images randomly selected from 3 test subjects.Each DeepUNET3D CNN was trained using a weighted binary cross entropy loss function with variable weights for the background and the foreground (WMH) pixels. The posteriors from the trained DeepUNET3D were used to train the Meta CNN, using a categorical cross-entropy loss function. All experiments were implemented in Python using Keras with a Tensorflow computing backend on a P100 GPU (NVIDIA). We compared the performance of StackGen-Net with previously published automated FLAIR lesion segmentation algorithms such as 2D UNET (UNET-2D)5, DeepMedic6, and Lesion Segmentation Toolbox (LST)7. The CNNs were trained to predict WMH on axially oriented FLAIR images. Additionally, the WMH posteriors from the orthogonal CNNs were also combined using ensemble averaging (Orthogonal E-A) and majority voting (Orthogonal E-MV) schemes. We also implemented a 2D version of DeepUNET3D (DeepUNET2D-Axial) to illustrate the performance benefits that come with the inclusion of convolutional blocks over convolutional layers in a traditional UNET-2D.

Results

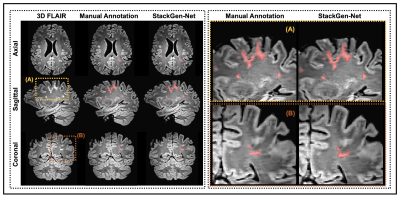

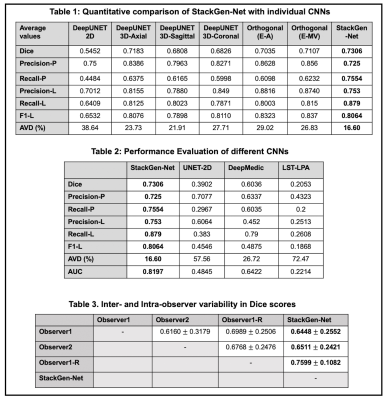

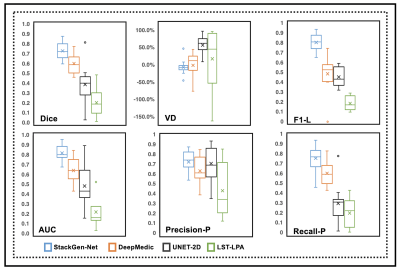

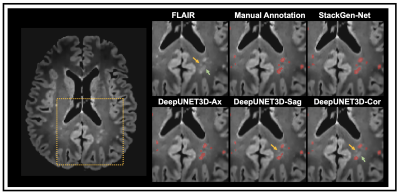

Figure 2 compares WMH predictions from StackGen-Net for representative multi-planar FLAIR images from a test subject, along with manual annotations for reference. A quantitative performance evaluation of the different lesion prediction algorithms as well as inter- and intra-observer variability is tabulated in Figure 3. On the test subjects, StackGen-Net achieved average dice, lesion-precision, lesion-recall, lesion-F1, and absolute volume difference (AVD) of 0.7306, 0.753, 0.879, 0.8064, and 16.6%, respectively. For comparison, the average dice and AVD for UNET-2D and DeepMedic were 0.39 and 57.56%, and 0.60 and 26.72%, respectively. The average dice and AVD values for the ensemble average and majority voting of the Orthogonal CNNs were 0.7035 and 29.02%, and 0.7107 and 26.83%, respectively. The average pairwise dice agreement between StackGen-Net predictions and the observers was higher (0.6853 ± 0.2132) compared to the agreement (0.6639 ± 0.2684) between observers; although this difference was not statistically significant (p=0.75, non-parametric Kruskal-Wallis test). StackGen-Net also achieved the highest area under the precision-recall curve (AUC) of 0.8197 compared to 0.4845 and 0.6422 from UNET-2D and DeepMedic, respectively. A box-plot comparison of StackGen-Net with DeepMedic, UNET-2D and LST (Figure 4) also shows that StackGen-Net provides superior performance in all evaluation metrics.Discussion

The performance improvements, in particular, of average dice and AVD as we move from UNET-2D to DeepUNET2D, and to DeepUNET3D illustrate the benefits of the proposed architecture and the use of 2.5D patches. The advantages of using 2.5D patches from orthogonal orientations is multifold: 1) reduced memory overhead associated with training a fully 3D network 2) additional spatial context along the through-plane dimension compared to a 2D network 3) provide training data diversity in ensemble. Figure 5 shows a FLAIR image from a test subject with WMH predictions from StackGen-Net and the individual CNNs in the ensemble. Note that StackGen-Net is able to predict WMH (highlighted by arrows) even when a majority in the ensemble predict a false-negative.Conclusion

A stacked generalization ensemble of 3D DeepUNET3D CNNs was proposed to detect WMH on 3D FLAIR images. We showed that our proposed model, StackGen-Net, performs better than the individual DeepUNET3D, their ensemble combination, and other state-of-the-art WMH detection algorithms. We also showed that we can reliably detect and quantify WMH in 3D FLAIR images, in clinically feasible times (45s per FLAIR volume), with performance comparable to the inter-observer variability in experienced neuro-radiologists.Acknowledgements

This work was supported by the Arizona Health Sciences Center Translational Imaging Program Project Stimulus (TIPPS) Fund. The authors would also like to acknowledge support from the BIO5 Team Scholar’s Program, Technology and Research Initiative Fund (TRIF) Improving Health Initiative, and the Arizona Alzheimer’s Consortium.

References

1. Wardlaw JM, Pantoni L, Pantoni L, Gorelick PB. Sporadic small vessel disease: pathogenic aspects. In: Cerebral Small Vessel Disease. Cambridge: Cambridge University Press; 2014. p. 52–63.

2. Maniega SM, Valdés Hernández MC, et al. White matter hyperintensities and normal-appearing white matter integrity in the aging brain. Neurobiology of Aging. 2015;36(2):909–918.

3. Fazekas F, Kleinert R, Offenbacher H,et al. Pathologic correlates of incidental MRI white matter signal hyperintensities. Neurology. 1993;43(9):1683–1683.

4. Brickman AM, Meier IB, Korgaonkar MS, et al. Testing the white matter retrogenesis hypothesis of cognitive aging. Neurobiology of Aging. 2012;33(8):1699–1715.

5. Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. 2015 May 18

6. Kamnitsas K, Ledig C, Newcombe VFJ, et al. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Medical Image Analysis. 2017;36:61–78.

7. Schmidt P, Gaser C, Arsic M, et al. An automated tool for detection of FLAIR-hyperintense white-matter lesions in Multiple Sclerosis. NeuroImage. 2012;59(4):3774–3783.

Figures