0850

Auto-encoded Latent Representations of White Matter Streamlines

Shenjun Zhong1, Zhaolin Chen1, and Gary Egan1,2

1Monash Biomedical Imaging, Monash University, Australia, Melbourne, Australia, 2School of Psychological Sciences, Monash University, Australia, Melbourne, Australia

1Monash Biomedical Imaging, Monash University, Australia, Melbourne, Australia, 2School of Psychological Sciences, Monash University, Australia, Melbourne, Australia

Synopsis

Clustering white matter streamlines is still a challenging task. The existing methods based on spatial coordinates rely on manually engineered features, and/or labeled dataset. This work introduced a novel method that solves the problem of streamline clustering without needing labeled data. This is achieved by training a deep LSTM-based autoencoder to learn and embed any lengths of streamlines into a fixed-length vector, i.e. latent representation, then perform clustering in an unsupervised learning manner.

Introduction

Diffusion tractography is a post-process technique that generates streamlines as a proxy to study the underlying white matter fiber networks. The streamlines are a series of 3D spatial positions and grouping them into meaningful bundles remains challenging. Conventional methods explicitly design spatial distance functions1,2 and anatomical similarity measures3 to manually engineer the features which are then used for cluster streamlines. More recently, neural networks are adopted for classifying streamlines into predefined bundle types, using MLP6, and CNN5,7, as supervised learning tasks. Alternative methods tackle the problem differently, by applying neural networks directly on diffusion MRI data to generate streamline segmentations8 or tract-specific fiber orientation distributions11. However, these methods require a large amount of labeled data for supervised learning, and fail to handle various streamline lengths.Unsupervised learning of streamline latent representations was also studied by finding a data-driven encoding space9,10 or dictionary learning4. We propose a new method for auto-encoding streamlines, similar to the concept of word2vec in Natural Language Processing i.e. embedding words in a generalized latent space12. To the best of our knowledge, this is the first type of work that attempts to learn latent representation of streamline, using RNN-like deep neural networks without requiring any labeled data.

Methods

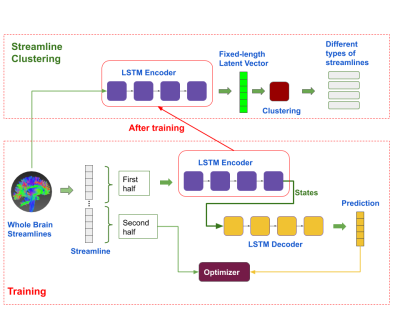

The dataset used in the study are from the “ISMRM 2015 Tractography Challenge” (http://www.tractometer.org/ismrm_2015_challenge), which contains 25 pre-defined streamline types, and in total 200,432 streamlines in the dataset, such as Corpus Callosum (CC), left and right Superior Cerebellar Peduncle (SCP), Uncinate Fasciculus (UF), Frontopontine Tracts (FPT), Superior Longitudinal Fasciculus (SLF), Cortico-Spinal Tract (CST), Inferior Longitudinal Fasciculus (ILF), Inferior Cerebellar Peduncle (ICP), Parieto-Occipital Pontine Tract (POPT), Optic Radiation (OR), Anterior Commissures (CA), Posterior Commissures (CP), Middle Cerebellar Peduncle (MCP) and Cingulum.As shown in Figure 1, during training, a LSTM-based autoencoder was used for encoding the first half of each streamline, and predicted the second half of the same streamline during decoding. The encoder and decoder networks both contain a single layer of LSTM, with 128 hidden dimensions. We used Adam optimizer to minimize the loss (i.e. Mean Square Loss) between the predicted and the real, using learning rate of 1e-3 and bach size of 128.

After training, the encoder was used for embedding all the streamlines into fixed-length latent vectors (which has a length of 128), i.e. any length of streamlines (a series of 3D vectors) were converted into their corresponding latent representations. Then, the streamlines were clustered in the latent space, using the basic Kmeans algorithm, and assigned to k clusters where k is customizable. The clustered streamlines were further converted back and stored as TCK files, which were used for visualization and down-stream tasks.

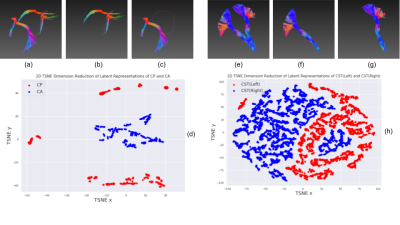

To validate the latent representations actually capture useful information about the streamlines, several experiments were conducted, including tasks that (i) cluster symmetric bundles, e.g. left/right SCP and ICP, anterior and posterior Commissures, and etc, and tests whether the model can separate left/right or anterior/posterior counterparts; (ii) reduce dimension of latent vectors into 2D using t-SNE algorithm and visually check the features are separable even in low dimension; (iii) decompose the bundles on the left hemisphere (excluding CC, Fornix and MCP) into 10 clusters and validate whether the clustered streamlines can be mapped back to the ground truth bundles.

Results and Discussion

The model was converged after training for ~2 epochs, and losses for both training and validation were achieved below 1. The best performed model was chosen with the lowest validation loss.As shown in Figure 2(a-c, e-g) and Figure 3, the model is able to distinguish spatially symmetric structures. For left-right structures, like UF, ILF, POPT, SLF, CST and OR are perfectly clustered, while the results for FPT are mostly correctly with minor bias. However, ICP, SCP and Cingulum are failed to be separated for left and right components. In Figure 2(d) and 2(h), they show the feature distributions of CA/CP and left/right CST after reducing dimensions from 128 to 2, and they are clearly separable. It indicates that the learned latent representations actually capture the necessary spatial information about the streamlines.

For a much more challenging task in decomposition of the streamlines on the entire left hemisphere into meaningful bundles, the model achieved a reasonably good results. As shown in figure 4, after clustering into 10 classes, the model produced major bundles with minor bias, including UF, CST, OR, SLF and Cingulum. Some of the bundles were overlapped, such as SCP and ICP, POPT and FPT, and ILF and OR. In Figure 5, it shows the 2D feature distributions of all the 10 bundles after dimension reduction with t-SNE. Surprisingly, it is clear that streamlines of the same bundle types were closer to each other, and separable from other types.

Conclusion

In this work, we introduce a novel RNN network to embed streamlines into latent representation, which enables streamline clustering in the latent features using unsupervised learning algorithms. The network is able to produce different types of bundles from whole brain streamline tracts without any extra post-processing. It can potentially be used to filter biased streamlines, extract and compare streamlines from normal and patients.Acknowledgements

No acknowledgement found.References

- Garyfallidis, E., Brett, M., Correia, M. M., Williams, G. B., & Nimmo-Smith, I. (2012). Quickbundles, a method for tractography simplification. Frontiers in neuroscience, 6, 175.

- Wang, J., & Shi, Y. (2019, June). A Fast Fiber k-Nearest-Neighbor Algorithm with Application to Group-Wise White Matter Topography Analysis. In International Conference on Information Processing in Medical Imaging (pp. 332-344). Springer, Cham.

- Siless, V., Chang, K., Fischl, B., & Yendiki, A. (2016, October). Hierarchical clustering of tractography streamlines based on anatomical similarity. In International Conference on Medical Image Computing and Computer-Assisted Intervention (pp. 184-191). Springer, Cham.

- Kumar, K., Siddiqi, K., & Desrosiers, C. (2019). White matter fiber analysis using kernel dictionary learning and sparsity priors. Pattern Recognition.

- Gupta, V., Thomopoulos, S. I., Corbin, C. K., Rashid, F., & Thompson, P. M. (2018, April). FiberNet 2.0: an automatic neural network based tool for clustering white matter fibers in the brain. In 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018) (pp. 708-711). IEEE.

- Lam, P. D. N., Belhomme, G., Ferrall, J., Patterson, B., Styner, M., & Prieto, J. C. (2018, March). TRAFIC: fiber tract classification using deep learning. In Medical Imaging 2018: Image Processing (Vol. 10574, p. 1057412). International Society for Optics and Photonics.

- Ugurlu, D., Firat, Z., Ture, U., & Unal, G. (2018, September). Supervised Classification of White Matter Fibers Based on Neighborhood Fiber Orientation Distributions Using an Ensemble of Neural Networks. In International Conference on Medical Image Computing and Computer-Assisted Intervention (pp. 143-154). Springer, Cham.

- Wasserthal, J., Neher, P., & Maier-Hein, K. H. (2018). Tractseg-fast and accurate white matter tract segmentation. NeuroImage, 183, 239-253.

- O’Donnell, L., & Westin, C. F. (2005, October). White matter tract clustering and correspondence in populations. In International Conference on Medical Image Computing and Computer-Assisted Intervention (pp. 140-147). Springer, Berlin, Heidelberg.

- Brun, A., Knutsson, H., Park, H. J., Shenton, M. E., & Westin, C. F. (2004, September). Clustering fiber traces using normalized cuts. In International Conference on Medical Image Computing and Computer-Assisted Intervention (pp. 368-375). Springer, Berlin, Heidelberg.

- Wasserthal, J., Neher, P. F., & Maier-Hein, K. H. (2018, September). Tract orientation mapping for bundle-specific tractography. In International Conference on Medical Image Computing and Computer-Assisted Intervention (pp. 36-44). Springer, Cham.

- Mikolov, T., Chen, K., Corrado, G., & Dean, J. (2013). Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781.

Figures

Figure 1. Overview of the Experiment Design and the Network Architecture.

Figure 2. Streamline Clustering of CA/CP and Left/Right CST, and their t-SNE Feature distributions.

Figure 3. Streamline Clustering for Left/Right Symmetric Bundles.

Figure 4. Decomposition of Left Hemisphere and the Clustered Bundles.

Figure 5. t-SNE Feature Distribution of All 10 Bundles on Left Hemisphere.