0807

Phase2Phase: Reconstruction of free-breathing MRI into multiple respiratory phases using deep learning without a ground truth1Washington University in St. Louis, Saint Louis, MO, United States

Synopsis

Radial MRI can be used for reconstructing multiple respiratory phases with retrospective binning. However, short acquisitions suffer from significant streaking artifacts. Compressed sensing (CS)-based methods are commonly used; nevertheless, CS is computational intensive and the image quality depends on the regularization parameters. We hereby propose a deep learning method that does not need an artifact-free target during training. The method can reconstruct high-quality volumes with ten respiratory phases, even for acquisitions close to 1 minute in length. The method outperforms CS for the same acquisition duration and can yield slightly better results than Unet3D trained using a surrogate ground truth.

INTRODUCTION

Respiratory motion compromises image quality in thoracic and abdominal magnetic resonance imaging (MRI). Recently, self-navigation techniques have been developed to detect respiratory motion from the data itself[1–4]. Binning the data into respiratory phases results in sets of undersampled k-space data, leading to poor signal-to-noise ratio (SNR) and streaking artifacts. To overcome these challenges, compressed sensing (CS)[5] reconstruction has been employed[2–4,6–8]. For CS methods, the selection of the regularization parameters is often empirical and can lead to sub-optimal results. Moreover, the iterative optimization is computationally intensive.More recently, deep learning (DL) methods [9,10] have been explored in MR image reconstruction [11–14]. For the usual DL, an artifact-free ground truth reference is required for training. However, such a reference can be difficult to obtain in practice. Recently, a new learning technique called Noise2Noise was introduced [15] that can be trained without a ground truth. This technique instead uses pairs of noisy images.

Respiratory binning leads to a different radial k-space coverage pattern for each respiratory phase, leading to different artifact patterns. On the other hand, the underlying patient anatomy remains similar across adjacent respiratory phases. Based on this observation, and inspired by the Noise2Noise approach, we developed a novel deep learning method that learns artifact-free MR volumes directly from noisy MR data with streaking artifacts, without the need of a ground truth. In this study, the odd respiratory phases were used as the input and the even phases were used as the training targets, and vice versa. We refer to our new technique as Phase2Phase (P2P) and applied it for acquisition times ranging from 1 to 5 minutes.

METHODS

The data was acquired using Consistently Acquired Projections for Tuned and Robust Estimation (CAPTURE)[4], which is based on a T1-weighted stack-of-stars 3D spoiled gradient-echo sequence with fat suppression[1,16], and comes with consistently acquired projections for a more robust detection of respiratory motion.All experiments were performed on a 3T simultaneous PET/MRI scanner (Siemens Biograph mMR; Siemens Healthcare, Erlangen, Germany). This Health Insurance Portability and Accountability Act (HIPAA)-compliant study was performed after the approval of our Institutional Review Board. 15 healthy volunteers and 17 cancer patients were recruited.

The default parameters for the CAPTURE acquisition were as follows: TE/TR = 1.69 ms/3.54 ms, matrix size = 320 x 320, FOV = 360 mm x 360 mm, slab thickness = 288 mm, number of partitions = 48, partial Fourier factor = 6/8 (giving a temporal resolution of 153.52 ms for the navigator), reconstructed slices per slab = 96 (yielding a slice thickness of 3 mm). The resulting voxel size was 1.125 x 1.125 x 3 mm3. The number of azimuthal angles was 2000, resulting in a total acquisition time of 5 minutes and 7 seconds, which was longer for large subjects with a larger number of slices.

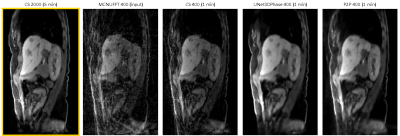

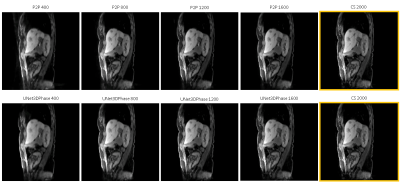

After the k-space data were binned into ten respiratory phases, four image reconstruction methods were used: (1) multi-coil non-uniform inverse fast Fourier transform (MCNUFFT), (2) Compressed Sensing (CS), (3) UNet3DPhase – a 3D U-net with the third dimension being the respiratory phase and the target being the 5-minute CS reconstruction, and (4) Phase2Phase (P2P), which was inspired by Noise2Noise and performs odd-to-even- as well as even-to-odd-phase learning without an artifact-free ground truth. Various numbers of radial spokes (400, 800, 1200, 1600), corresponding, respectively, to about 1-, 2-, 3- and 4-minute acquisitions, were used to reconstruct the images. Figure 1 details the training and testing of the deep learning methods. 8 healthy subjects were used for training, 1 was used for validation and 6 were used for testing together with 17 patients.

RESULTS

Figures 2 and 3 demonstrate reconstructions for 400 radial spokes (approximately 1 minute) for two different patients. The 2000-spoke CS reconstruction serves as the gold-standard reference. The 400-spoke CS could not remove all artifacts, while both Unet3DPhase and P2P images show much fewer artifacts. Moreover, P2P provided sharper reconstructions when compared to Unet3DPhase. Figures 4 and 5 show Unet3DPhase and P2P reconstructions for 400, 800, 1200 and 1600 radial spokes. There is a noticeable improvement in image quality for 800 spokes when compared to 400 spokes for both methods. However, the images qualities are similar for 800 spokes and beyond.It is worth noting that, although the training of the networks takes dozens of hours, it takes only 10 seconds to reconstruct the entire 4D data of size 320x320x96x10.

DISCUSSION

P2P can learn to reconstruct high-quality images without an artifact-free ground truth. Even the 1-minute acquisition images are of good quality. Furthermore, P2P can provide sharper images than UNet3DPhase. One possible limitation of P2P is that the assumption of little motion across adjacent phases may lead to image blurring. Finally, both Unet3DPhase and P2P outperformed the 400-spoke CS.CONCLUSION

In summary, we proposed a new deep learning method (P2P) that can learn to reconstruct artifact-free images without an artifact-free ground truth. Once trained, the P2P reconstructions are much faster than CS, making the method extremely suitable for clinical routine. Furthermore, no ground truth is needed, which saves additional time during training. Finally, the network trained on healthy subjects can work on patients with quite different lesion patterns as demonstrated here.Acknowledgements

No acknowledgement found.References

1. Grimm R, Fürst S, Souvatzoglou M, et al. Self-gated MRI motion modeling for respiratory motion compensation in integrated PET/MRI. Medical Image Analysis. 2015;19(1):110–120.

2. Feng L, Grimm R, Block KT, et al. Golden-angle radial sparse parallel MRI: combination of compressed sensing, parallel imaging, and golden-angle radial sampling for fast and flexible dynamic volumetric MRI. Magn Reson Med. 2014;72(3):707–717.

3. Feng L, Axel L, Chandarana H, et al. XD-GRASP: Golden-angle radial MRI with reconstruction of extra motion-state dimensions using compressed sensing. Magn. Reson. Med. 2016;75(2):775–788.

4. Eldeniz C, Fraum T, Salter A, et al. CAPTURE: Consistently Acquired Projections for Tuned and Robust EstimationA Self-Navigated Respiratory Motion Correction Approach. Investigative Radiology. 2018;53(5):293–305.

5. Donoho DL. Compressed sensing. IEEE Transactions on Information Theory. 2006;52(4):1289–1306.

6. Lustig M, Donoho D, Pauly JM. Sparse MRI: The application of compressed sensing for rapid MR imaging. Magn. Reson. Med. 2007;58(6):1182–1195.

7. Chandarana H, Feng L, Ream J, et al. Respiratory Motion-Resolved Compressed Sensing Reconstruction of Free-Breathing Radial Acquisition for Dynamic Liver Magnetic Resonance Imaging. Invest Radiol. 2015;50(11):749–756.

8. Huang J, Wang L, Zhu Y. Compressed Sensing MRI Reconstruction with Multiple Sparsity Constraints on Radial Sampling. Mathematical Problems in Engineering. 2019;2019:14.

9. Schmidhuber J. Deep Learning in Neural Networks: An Overview. Neural Networks. 2015;61:85–117.

10. Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv:1505.04597 [cs]. 2015. Available at: http://arxiv.org/abs/1505.04597. Accessed July 18, 2019.

11. Wang S, Su Z, Ying L, et al. Accelerating magnetic resonance imaging via deep learning. In: 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI).; 2016:514–517.

12. Han Y, Yoo J, Kim HH, et al. Deep learning with domain adaptation for accelerated projection-reconstruction MR. Magnetic Resonance in Medicine. 2018;80(3):1189–1205.

13. Lee D, Yoo J, Tak S, et al. Deep Residual Learning for Accelerated MRI Using Magnitude and Phase Networks. IEEE Transactions on Biomedical Engineering. 2018;65(9):1985–1995.

14. Lundervold AS, Lundervold A. An overview of deep learning in medical imaging focusing on MRI. Zeitschrift für Medizinische Physik. 2019;29(2):102–127.

15. Lehtinen J, Munkberg J, Hasselgren J, et al. Noise2Noise: Learning Image Restoration without Clean Data. arXiv:1803.04189 [cs, stat]. 2018. Available at: http://arxiv.org/abs/1803.04189. Accessed November 3, 2019.

16. Chandarana H, Block TK, Rosenkrantz AB, et al. Free-breathing radial 3D fat-suppressed T1-weighted gradient echo sequence: a viable alternative for contrast-enhanced liver imaging in patients unable to suspend respiration. Invest Radiol. 2011;46(10):648–653.

Figures