0706

Radio-pathomic mapping models trained with annotations from multiple pathologists reliably distinguish high-grade prostate cancer1Biophysics, Medical College of Wisconsin, Milwaukee, WI, United States, 2Radiology, Medical College of Wisconsin, Milwaukee, WI, United States, 3Pathology, Medical College of Wisconsin, Milwaukee, WI, United States, 4Urological Surgery, Medical College of Wisconsin, Milwaukee, WI, United States, 5Biostatistics, Medical College of Wisconsin, Milwaukee, WI, United States, 6Pathology, University of Wisconsin Madison, Madison, WI, United States, 7Pathology, University of Chicago, Chicago, IL, United States, 8Pathology, Chiang Mai University, Chiang Mai, Thailand

Synopsis

This study demonstrates that radio-pathomic maps of epithelium density derived from annotations performed by different pathologists distinguish high-grade prostate cancer from G3 and benign atrophy. In a test set of 5 patients epithelium density maps consistently demonstrate an AUC greater than 0.9 independent of which pathologist’s annotations trained the model or which pathologist’s annotations the model is applied to. The results in a larger test set largely mirror the results in the small test set. We also showed that radio-pathomic maps of epithelium density out-performed ADC maps independent of which observer was used to train the model.

Purpose

This study aims to quantify the downstream effects of inter-pathologist variability on a previously validated rad-path algorithm, radio-pathomic mapping, applied to a dataset of whole-mount prostate slides annotated by five pathologists from three institutions and subsequently aligned with the pre-surgical magnetic resonance imaging.Methods

Data from 48 prospectively recruited patients was retrospectively analyzed in this IRB approved study. Clinical imaging was acquired using a single 3T scanner with an endo-rectal coil approximately 2 weeks prior to radical prostatectomy. The multi-parametric protocol included T2 weighted imaging, dynamic contrast enhanced imaging, and FOCUS diffusion with 10 b-values (0, 10, 25, 50, 80, 100, 200, 500, 1000, and 2000).Post-surgery, prostate samples were fixed in formalin and sectioned using slicing jigs created to match the orientation and slice thickness of the T2 weighted image.(1, 2) Whole-mount tissue sections were paraffin embedded, hematoxylin and eosin (H&E) stained, and digitally scanned at 40x using a microscope with an automated stage.

Two datasets of whole-mount slides were used in this study: the single annotation (SA) set annotated by one pathologist, and a separate, non-overlapping set of slides annotated by multiple pathologists (MA). The SA dataset consisted of 123 slides taken from 20 patients; the MA dataset was annotated by five total pathologists and contained 33 slides from 28 patients. Slides were annotated using a stylus on a Microsoft Surface.

The digitized pathology was computationally segmented pixel-wise into lumen, epithelium, and other tissue. The slides were down-sampled and control point warped to match the corresponding axial slice on the clinical T2 weighted image.

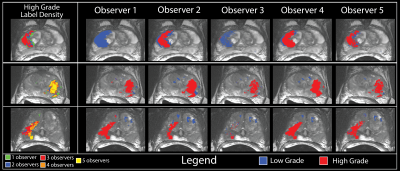

Inter-observer variability was measured on the MA dataset using the kappa statistic.(3, 4) Variability was measured pairwise, once using ROIs generated by observer A and once using ROIs generated by observer B (i.e. within observer A’s ROI is observer B in agreement, and within observer B’s ROIs is observer A in agreement). Each test evaluated 3 classes: unlabeled, low-grade (G3), or high-grade (G4+). This procedure was then repeated for each pair of observers, producing a matrix of kappa statistics describing the inter-rater reliability between each pathologist.

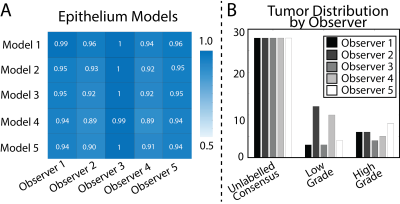

To demonstrate feasibility, we trained 5 separate pathologist specific epithelium density models using 28 slides from the MA dataset. Each observers model was then applied to the 5 held out slides from the MA dataset. Each observers model was evaluated using all five observers ROIs. Results were analyzed using a receiver operator characteristic (ROC).

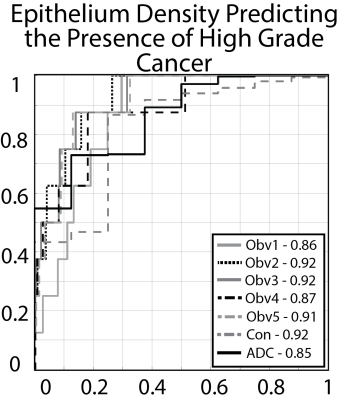

To test generalizability, models were trained on the full 33 slides from the MA dataset and applied to the SA dataset. Additionally, a consensus model was generated by averaging the pixel-wise prediction from all observers. Models were evaluated using a ROC, identical to the prior experiment, applied to the 123 slides in the SA dataset. Additionally, ADC (b=0,1000) was evaluated for comparison as the current clinical standard for distinguishing prostate cancer.

Results

Inter-observer variability ranged from moderate to high agreement (mean = 0.62 ± 0.25). On the small dataset epithelium models were stable, with AUCs consistently above 0.9 (0.93 ± 0.03) On the larger test set, epithelium models matched or outperformed apparent diffusion coefficient (AUC = 0.85). A consensus model reached an AUC = 0.92.Conclusion

We demonstrate that radio-pathomic mapping of prostate cancer features is an effective technique for distinguishing high-grade prostate cancer regardless of the pathologist-specific variability in gold-standard annotations. These findings will have broader implications for the radio-pathomic mapping, and machine learning community.Acknowledgements

The State of Wisconsin Tax Check off Program for Prostate Cancer Research, RO1CA218144, National Center for Advancing Translational Sciences, NIH UL1TR001436 and TL1TR001437 and R21CA231892.

References

1. McGarry SD, Hurrell SL, Iczkowski KA, Hall W, Kaczmarowski AL, Banerjee A, Keuter T, Jacobsohn K, Bukowy JD, Nevalainen MT, Hohenwalter MD, See WA, LaViolette PS. Radio-pathomic Maps of Epithelium and Lumen Density Predict the Location of High-Grade Prostate Cancer. Int J Radiat Oncol Biol Phys. 2018;101(5):1179-87.

2. Nguyen HS, Milbach N, Hurrell SL, Cochran E, Connelly J, Bovi JA, Schultz CJ, Mueller WM, Rand SD, Schmainda KM, LaViolette PS. Progressing Bevacizumab-Induced Diffusion Restriction Is Associated with Coagulative Necrosis Surrounded by Viable Tumor and Decreased Overall Survival in Patients with Recurrent Glioblastoma. AJNR Am J Neuroradiol. 2016;37(12):2201-8. 3. Ozkan TA, Eruyar AT, Cebeci OO, Memik O, Ozcan L, Kuskonmaz I. Interobserver variability in Gleason histological grading of prostate cancer. Scand J Urol. 2016;50(6):420-4.

4. Viera AJ, Garrett JM. Understanding interobserver agreement: the kappa statistic. Fam Med. 2005;37(5):360-3.

Figures