0704

A Comparison between Radiologists versus Deep Learning for Prostate Cancer Detection in Multi-parameter MRI

Ruiming Cao1, Xinran Zhong2, Sohrab Afshari Mirak3, Ely Felker3, Voraparee Suvannarerg3,4, Teeravut Tubtawee3,5, Fabien Scalzo6, Steve Raman3, and Kyunghyun Sung3

1Bioengineering, UC Berkeley, Berkeley, CA, United States, 2UT Southwestern, Dallas, TX, United States, 3Radiology, UCLA, Los Angeles, CA, United States, 4Radiology, Faculty of Medicine Siriraj Hospital, Mahidol University, Bangkok, Thailand, 5Radiology, Faculty of Medicine, Prince of Songkla University, Songkhla, Thailand, 6Computer Science, UCLA, Los Angeles, CA, United States

1Bioengineering, UC Berkeley, Berkeley, CA, United States, 2UT Southwestern, Dallas, TX, United States, 3Radiology, UCLA, Los Angeles, CA, United States, 4Radiology, Faculty of Medicine Siriraj Hospital, Mahidol University, Bangkok, Thailand, 5Radiology, Faculty of Medicine, Prince of Songkla University, Songkhla, Thailand, 6Computer Science, UCLA, Los Angeles, CA, United States

Synopsis

We evaluated our recently developed deep learning system, FocalNet, for prostate cancer detection in multi-parametric MRI (mpMRI). This study performed a head-to-head comparison between FocalNet and four genitourinary radiologists in an independent evaluation cohort consisting of 126 mpMRI scans untouched during the development. FocalNet demonstrated similar detection performance to radiologists under the high specificity condition or the high sensitivity condition, while radiologists outperformed FocalNet in moderate specificity and sensitivity.

Introduction

Deep learning has shown great promise for prostate cancer detection in multi-parametric MRI (mpMRI) to overcome the limitations of subjective and qualitative interpretation1,2,3. However, it is less clear to understand the diagnostic accuracy of a deep learning-based system, compared with radiologists. In this study, we evaluated our recently developed deep learning system, FocalNet3, with an independent testing set and compared it with four experienced genitourinary (GU) radiologists for prostate cancer detection sensitivity and specificity under the same reading and reporting setup.Materials and Methods

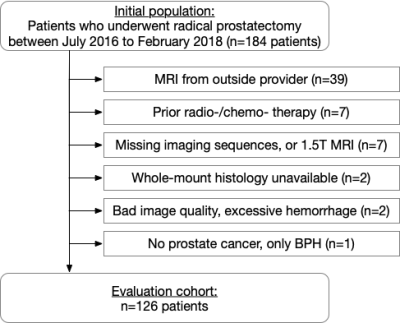

With IRB approval, we retrospectively collected in-house, pre-operative 3T MRI exams from 553 patients from October 2010 to February 2018 who underwent robotic-assisted laparoscopic prostatectomy without prior treatment. A development set of 427 patients from October 2010 to June 2016 was split into five folds for cross-validation for training and validation; after the system was trained and tuned, an independent evaluation set of 126 patients, continuous recruitment between July 2016 and February 2018, was used to evaluate our algorithm and compare with radiologists. Figure 2 illustrates the patient selection. T2-weighted imaging (T2WI) and maps of apparent diffusion coefficient (ADC) from diffusion-weighted imaging were used in this study for both FocalNet and radiologists. Prostate cancer lesion regions of interest (ROIs) were retrospectively identified by research fellows using the whole-mount histopathology after the reference and contoured on T2WI.FocalNet is a deep learning system developed for the detection of prostate cancer using the full volume of mpMRI3. FocalNet predicted the cancer probability map and identified detection points along with their confidence values from 0 to 1.

Four GU radiologists (20+ yr, 10+ yr, 12 yr, and 5 yr experience in clinical prostate MRI interpretation) independently read 126 evaluation cases for lesion detection. Each reader, blinded to all clinical information, was instructed to annotate lesion detection points on T2WI and score from 1 (least suspicious) to 5 (most suspicious) for each point. Readers were also informed about the evaluation setup, e.g., that multiple detection points on a single lesion or detection points on indolent prostate cancer would not be penalized, nor they would improve the detection sensitivity.

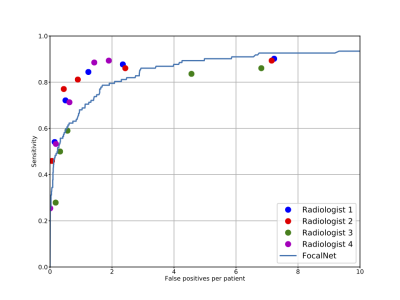

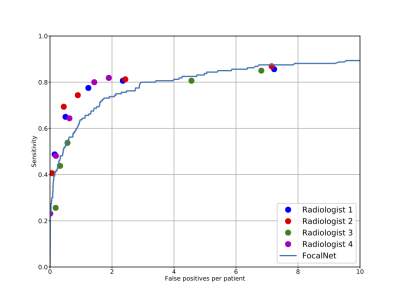

We evaluated the detection accuracy of FocalNet or radiologists using the free-response receiver operating characteristics analysis4, which measured detection sensitivity versus the number of false positives at different thresholding conditions. A given detection point in or within a 5mm margin of an annotated lesion contour was considered as a true positive, otherwise false positive. Lesions with Gleason Score greater than 6 or a pathological size larger or equal to 10mm were defined as clinically significant prostate cancer, and a lesion with the highest Gleason Score and the largest pathological size (if multiple lesions with same Gleason Score) was considered as the index lesion for a patient5.

Results

As in Figure 3, FocalNet had a detection sensitivity of 50% for the index lesion at the cost of 0.24 false-positive detections per patient on average, 80% sensitivity at 2.08 false-positive detections per patient, and 90% sensitivity at 4.98 false-positive detections per patient. In Figure 4, the detection sensitivity for clinically significant lesions was 50% at 0.43 false-positive detections per patient, 80% at 3.39 false-positive detections per patient, and 90% at 11.7 false-positive detections per patient.We evaluated radiologists’ performance in parallel with the evaluation of FocalNet using the same independent evaluation cohort. Each radiologist took on average 6.5±4.9 days to complete the reading. Radiologist’s performance was evaluated in the exact same way as for FocalNet. The detection performance for index lesions was illustrated in Figure 3. The detection of FocalNet was close to the performance of the readers in a high specificity condition when thresholding readers’ detection points on score 5 only. The detection sensitivity of readers was 0.4%±12.2% lower than FocalNet. If the threshold was on readers’ points with scores at least 3 or 4, the detection sensitivity from the readers was 8.1%±8.9% higher than FocalNet. For high sensitivity after including all points from readers, the sensitivity of FocalNet was marginally higher than readers. However, detections with score 1 or 2 were highly variable among readers, since giving suspiciousness scores of 1 or 2 was uncommon in clinical practice. The detection for clinically significant lesions was reported in Figure 4, and the radiologists’ detection sensitivity for clinically significant lesions was 5.3%±2.1% lower than the radiologists’ detection for index lesions.

Discussion and Conclusion

We evaluated our recently developed deep learning system, FocalNet, for prostate cancer detection in multi-parametric MRI. This study conducted a head-to-head comparison between FocalNet and four GU radiologists in an independent evaluation cohort consisting of 126 mpMRI scans untouched during the development. FocalNet demonstrated similar detection performance to readers under the high specificity condition or the high sensitivity condition, while readers outperformed FocalNet in moderate specificity and sensitivity.Acknowledgements

This work is supported by funds from the Integrated Diagnostics Program, Department of Radiological Sciences & Pathology, David Geffen School of Medicine at UCLA.References

- Ruprecht O, Weisser P, Bodelle B, Ackermann H, Vogl TJ. MRI of the prostate: interobserver agreement compared with histopathologic outcome after radical prostatectomy. Eur J Radiol. 2012;81(3):456–60

- Tsehay YK, Lay NS, Roth HR, Wang X, Kwak JT, Turkbey BI, et al. Convolutional neural network based deep-learning architecture for prostate cancer detection on multiparametric magnetic resonance images. In: Medical Imaging 2017: Computer-Aided Diagnosis. 2017

- Cao R, Bajgiran AM, Mirak SA, Shakeri S, Zhong X, Enzmann D, et al. Joint Prostate Cancer Detection and Gleason Score Prediction in mp-MRI via FocalNet. IEEE Trans Med Imaging. 2019

- Litjens G, Debats O, Barentsz J, Karssemeijer N, Huisman H. Computer-aided detection of prostate cancer in MRI. IEEE Trans Med Imaging. 2014

- Ploussard G, Epstein JI, Montironi R, Carroll PR, Wirth M, Grimm MO, et al. The contemporary concept of significant versus insignificant prostate cancer. European Urology. 2011

Figures

The illustration of FocalNet development & training and the evaluation comparing FocalNet’s and reader’s cancer detection performance.

Flowchart for study inclusion for the evaluation cohort.

Free response receiver operating characteristics analysis for index lesion detection for 126 patients in the evaluation cohort. X-axis shows the number of false positive detections for each patient on average, and y-axis shows the detection sensitivity for index lesions.

Free response receiver operating characteristics analysis for clinically significant lesion detection for 126 patients in the evaluation cohort. X-axis shows the number of false positive detections for each patient on average, and y-axis shows the detection sensitivity for clinically significant lesions.