0677

High-Performance Rapid Quantitative Imaging with Model-Based Deep Adversarial Learning1Gordon Center for Medical Imaging, Massachusetts General Hospital, Harvard Medical School, Boston, MA, United States, 2Radiology, University of Wisconsin-Madison, Madison, WI, United States, 3Biomedical Engineering and Imaging Institute and Radiology, Icahn School of Medicine at Mount Sinai, New York, NY, United States

Synopsis

The purpose of this work was to develop a novel deep learning-based reconstruction framework for rapid MR parameter mapping. Building upon our previously proposed Model-Augmented Neural neTwork with Incoherent k-space Sampling (MANTIS) technique combining efficient end-to-end CNN mapping and k-space consistency to enforce joint data and model fidelity, this new method further extends to incorporate the latest adversarial training (MANTIS-GAN), so that more realistic parameter maps can be directly estimated from highly-accelerated k-space data. The performance of MANTIS-GAN was demonstrated for fast T2 mapping. Our study showed that MANTIS-GAN represents a promising approach for efficient and accurate MR parameter mapping.

Introduction

Deep learning methods have been used for image reconstruction with promising results. Exemplary approaches using deep learning architectures to reimplement compressed sensing with improved reconstruction performance have achieved great success (1,2). Other approaches have also been proposed to directly reconstruct images from undersampled data using a direct end-to-end convolutional neural network (CNN) mapping (3–5). While these deep learning methods have focused on image reconstruction for conventional MR imaging, applications for accelerated parameter mapping have been limited. In our previous work, we proposed a deep learning-based framework for directly reconstructing rapid MR parameter mapping from undersampled k-space. This framework, called MANTIS, incorporates a tissue parameter signal model into end-to-end CNN mapping with k-space consistency using a cyclic loss to enforce data and model fidelity jointly. While this method has demonstrated promising results, certain image degradation was observed at high acceleration rate solely using a simple optimization loss function (l1/l2 loss) for training, particularly in organs with complex tissue structure. The purpose of this study was thus to improve further the performance of this framework using the latest generative adversarial training technique (GAN) and adversarial loss. We investigated and evaluated the feasibility of this framework, called MANTIS-GAN, for T2 mapping of the brain at a high acceleration rate.Methods

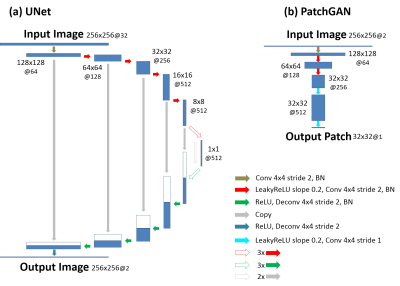

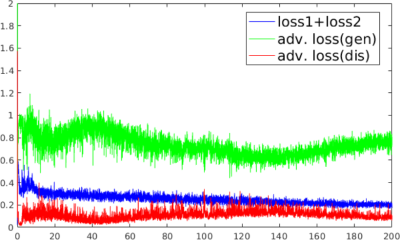

(a) MANTIS-GAN Imaging: MANTIS-GAN reconstructs MR parameter maps directly from undersampled k-space using CNN. In line with our original implementation of MANTIS for parameter mapping using CycleGAN with a focus on data and model consistency (6,7), the new MANTIS-GAN (Fig. 1) consists of two image objectives and one additional adversarial loss to enforce the preservation of perceptual image quality. The first loss term (loss 1) ensures that the undersampled images produce estimated parameter maps consistent with reference maps (i.e. supervised training). The second loss term (loss 2) ensures that the reconstructed parameter maps from end-to-end CNN mapping produce undersampled data matching the acquired k-space measurements (i.e., data and model consistency). With the guaranteed reconstruction fidelity from the first two losses, the third adversarial loss enables realistic and accurate parameter maps that resemble the same tissue features like the ones from fully sampled data at high acceleration rates.(b) Network Implementation: We used UNet as a convolutional encoder/decoder for performing efficient end-to-end CNN mapping, and implemented PatchGAN as a discriminator in the adversarial training similar to our previous reconstruction work in (8) (Fig. 2). The network was trained on an Nvidia Quadro P5000 card using adaptive gradient descent optimization with a learning rate of 0.0002 for 200 epochs.

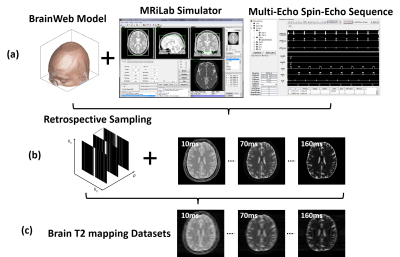

(c) Evaluation: The evaluation of T2 mapping was performed using image datasets simulated on a realistic MR simulator (open-source MRiLab(9) https://leoliuf.github.io/MRiLab/) using a multi-echo spin-echo T2 mapping sequence (total 16 echoes at 1.5T) in axial orientation on 20 brain models scanned at McGill BrainWeb project (https://brainweb.bic.mni.mcgill.ca/brainweb/).The training data were obtained by randomly selecting 18 subjects, with the rest two subjects used for evaluation. Undersampling was retrospectively simulated by retaining 5% of central k-space lines and selecting the remaining lines to achieve an acceleration rate (R) of 8 using a 1D variable density Cartesian pattern. The sampling pattern was randomly generated for each dynamic frame (Fig. 3). The training and reconstruction took ~20 hours and 3.2 sec/subject, respectively (Fig. 4).

Results

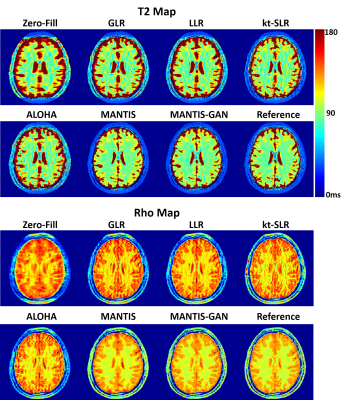

Fig. 5 showed exemplary results at R=8. Undersampling at this high acceleration rate with single-coil element prevented reliable reconstruction of a T2 map with simple inverse FFT (Zero-Filling), iterative global and local low rank constrained reconstruction methods (GLR and LLR) (10), and advanced iterative k-t compressed sensing methods including kt-SLR (11) and ALOHA (12). MANTIS successfully removed aliasing artifacts and preserved better tissue contrast that is similar to the reference but with some remaining blurring and loss of tissue texture. MANTIS-GAN with adversarial training and advanced perceptual loss provided not only accurate T2 contrast but also much-improved image sharpness and tissue details that are superior to all other methods. The highest degree of correspondence between MANTIS methods and the reference was confirmed by normalized root mean square error (nRMSE), which were 3.2% and 3.6% for MANTIS and MANTIS-GAN, and 7.2%, 6.2%, 5.1% and 7.1% for GLR, LLR, kt-SLR, and ALOHA, respectively. The proton density (Rho) map in Fig 5 also demonstrated that MANTIS and MANTIS-GAN could successfully remove aliasing artifacts, and MANTIS-GAN preserved the best image sharpness and tissue texture compared to other methods at R=8.Discussion

We proposed a novel deep learning-based reconstruction approach called MANTIS-GAN for rapid MR parameter mapping. With the incorporation of adversarial learning, MANTIS-GAN yielded superior results compared to traditional iterative sparsity-based methods and also outperformed original MANTIS at a high acceleration rate of brain T2 mapping. With a combination of efficient end-to-end CNN mapping, model-based data fidelity reinforcement, and incorporation of adversarial training strategy, MANTIS-GAN allows high-performance rapid parameter mapping directly from undersampled data.Acknowledgements

No acknowledgement found.References

1. Hammernik K, Klatzer T, Kobler E, Recht MP, Sodickson DK, Pock T, Knoll F. Learning a Variational Network for Reconstruction of Accelerated MRI Data. Magn. Reson. Med. [Internet] 2017;79:3055–3071. doi: 10.1002/mrm.26977.

2. Mardani M, Gong E, Cheng JY, Vasanawala SS, Zaharchuk G, Xing L, Pauly JM. Deep Generative Adversarial Neural Networks for Compressive Sensing (GANCS) MRI. IEEE Trans. Med. Imaging [Internet] 2018:1–1. doi: 10.1109/TMI.2018.2858752.

3. Wang S, Su Z, Ying L, Peng X, Zhu S, Liang F, Feng D, Liang D, Technologies I. ACCELERATING MAGNETIC RESONANCE IMAGING VIA DEEP LEARNING. In: 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI). IEEE; 2016. pp. 514–517. doi: 10.1109/ISBI.2016.7493320.

4. Schlemper J, Caballero J, Hajnal J V., Price A, Rueckert D. A Deep Cascade of Convolutional Neural Networks for Dynamic MR Image Reconstruction. IEEE Trans. Med. Imaging [Internet] 2017:1–1. doi: 10.1007/978-3-319-59050-9_51.

5. Zhu B, Liu JZ, Cauley SF, Rosen BR, Rosen MS. Image reconstruction by domain-transform manifold learning. Nature [Internet] 2018;555:487–492. doi: 10.1038/nature25988.

6. Liu F, Samsonov A. Data-Cycle-Consistent Adversarial Networks for High-Quality Reconstruction of Undersampled MRI Data. In: the ISMRM Machine Learning Workshop. ; 2018.

7. Liu F, Feng L, Kijowski R. MANTIS: Model-Augmented Neural neTwork with Incoherent k-space Sampling for efficient MR parameter mapping. Magn. Reson. Med. [Internet] 2019;82:174–188. doi: 10.1002/mrm.27707.

8. Liu F, Samsonov A, Chen L, Kijowski R, Feng L. SANTIS: Sampling-Augmented Neural neTwork with Incoherent Structure for MR image reconstruction. Magn. Reson. Med. [Internet] 2019:1–15. doi: 10.1002/mrm.27827.

9. Liu F, Velikina J V., Block WF, Kijowski R, Samsonov AA. Fast Realistic MRI Simulations Based on Generalized Multi-Pool Exchange Tissue Model. IEEE Trans. Med. Imaging [Internet] 2017;36:527–537. doi: 10.1109/TMI.2016.2620961.

10. Zhang T, Pauly JM, Levesque IR. Accelerating parameter mapping with a locally low rank constraint. Magn. Reson. Med. [Internet] 2015;73:655–661. doi: 10.1002/mrm.25161.

11. Lingala SG, Hu Y, DiBella E, Jacob M. Accelerated Dynamic MRI Exploiting Sparsity and Low-Rank Structure: k-t SLR. IEEE Trans. Med. Imaging [Internet] 2011;30:1042–1054. doi: 10.1109/TMI.2010.2100850.

12. Lee D, Jin KH, Kim EY, Park S-H, Ye JC. Acceleration of MR parameter mapping using annihilating filter-based low rank hankel matrix (ALOHA). Magn. Reson. Med. [Internet] 2016;76:1848–1864. doi: 10.1002/mrm.26081.

Figures