0670

An Unsupervised Deep Learning Method for Correcting the Susceptibility Artifacts in Reversed Phase-encoding EPIs1School of Electrical, Computer and Telecommunications Engineering, University of Wollongong, Wollongong, Australia, 2School of Psychology, University of Wollongong, Wollongong, Australia

Synopsis

We introduce a deep learning method, named S-Net, to correct the susceptibility artifacts in a pair of reversed phase-encoding (PE) echo-planar imaging images. The S-Net is trained in an unsupervised manner using a set of reversed-PE pairs. For a new reversed-PE pair, the corrected images are computed rapidly by evaluating the learned S-Net. Evaluation of three datasets demonstrates equally good correction performance as much lower computation time (1-3s) than state-of-the-art SAC methods such as AISAC (50-60s) or TOPUP (over 1000s). This fast performance provides a dramatic speedup for medical imaging processing pipelines and makes the real-time correction for MR-scanners feasible.

Introduction

Echo Planar Imaging (EPI) is the technique of choice for most functional MRI and diffusion-weighted imaging applications due to its fast imaging capability. Despite its popularity, EPI is sensitive to the susceptibility artifacts (SAs).6,10 The SAs include local image distortions and intensity modulations.3 They cause the misalignment to the underlying structural image, potentially resulting in the incorrectly localized analysis results. SAs are more severe at the high field strengths,7,8 which has become widely used.Since SAs are most noticeable along the PE direction of the EPI image,10 many SA correction (SAC) methods rely on a pair of reversed-PE images to estimate the displacement field. The estimated displacement field is then used to unwarp the distorted images. Traditional reversed-PE based SAC methods optimize an objective function, resulting in the displacement field. This approach is time-consuming for every pair of input images particular for those with large sizes or severe displacements. Consequently, these SAC methods are unfeasible for online implementation on an MR-scanner.

Methods

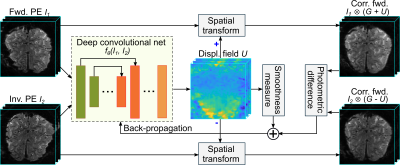

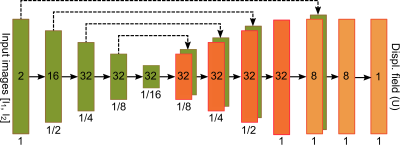

We proposed a deep learning model, called S-Net, to correct a reversed-PE image pair. Fig. 1 illustrates an overview of the proposed S-Net. The S-Net takes the two 3D reversed-PE images and evaluates the displacement field. In the training phase, the parameters are learned to provide the best displacement field, then the corrected images, through a spatial transformation, with the most similar to each other. In the testing or application phase, given new reversed-PE image pair, corrected images are obtained by evaluating directly the learned S-Net.Fig. 2 illustrates the network architecture that maps a reversed-PE image pair to the displacement field. This architecture is inspired by the U-Net9 and the VoxelMorph architecture.2 The network includes an encoder path (left side) and a decoder path (right side). The encoder path repeats 3D convolutional layers with a kernel of size 3 and a stride of 2, each followed by a leaky rectified linear unit (LeakyReLU). The decoder path repeats convolutional layers with a kernel of size 3 and a stride of 1, each followed by a LeakyReLU, then a 3D upsampling layer.

We train the S-Net by an unsupervised manner, i.e. no ground truth images are required. The loss function only qualifies the dissimilarity between the corrected images and the smoothness of the displacement field.

Results

The S-Net was evaluated by three datasets: UOW7TfMRI acquired on a 7T scanner (Siemens, University of Queenland) by our team, HCP3TfMRI and HCP3TDWI extracted from the Human Connectome Project (HCP) dataset.5 We compared the proposed S-Net with two state-of-the-art SAC methods, i.e. TOPUP1 and AISAC,4 in two criteria: running time and the similarity of the corrected image pair.We computed the average elapsed time of SAC methods for correcting a reversed-PE image pair with a size of 144x168x111. TOPUP requires more than 17 minutes to correct one pair, while AISAC requires nearly 1 minute. Correcting a pair with S-Net on CPU is roughly 3s, about 369 and 20 times faster than TOPUP and AISAC, respectively. When using the GPU, S-Net provides the corrected images in under a second.

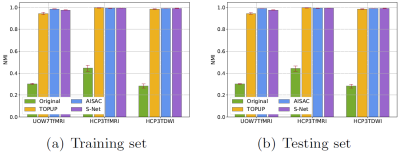

Fig. 3 shows the similarity of the uncorrected and corrected pairs, measured by the normalized mutual information (NMI), from the training set and test set. The NMIs of SAC corrected images are much higher (better similarity) than for uncorrected. The NMI of the S-Net's results is compatible with TOPUP and AISAC in both training and test sets.

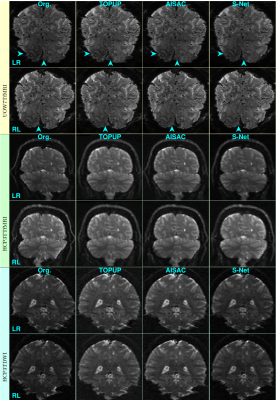

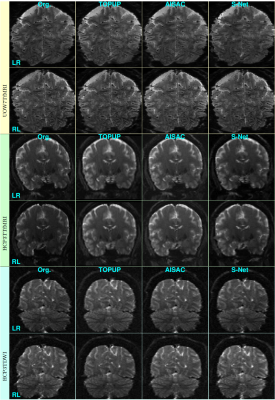

Figs. 4 and 5 show examples of reversed-PE slices without and with corrections by the three SAC methods from training and test sets, respectively. The corrections of S-Net in the UOW7TfMRI dataset from the training set are marginally better than the one of TOPUP and AISAC (see arrows).

Discussion

The experimental results indicate that the proposed S-Net method is able to learn the features of the reversed-PE images from the training set, and the learned model can be applied well to the unseen data. The S-Net can be trained from a very small scale dataset, like the UOW7TfMRI with only fifteen pairs of reversed-PE images, or with large dataset like the HCP datasets. The proposed S-Net is not limited by the type of acquisition sequences, such as spin-echo and gradient-echo. The S-Net method can be applied to any resolution and size of the EPI image and any scale of the dataset.The trained S-Net model provides the same performance of corrected images as the state-of-the-art SAC methods while running about 369 and 20 times faster than TOPUP and AISAC, respectively. Furthermore, it does not require an additional anatomical image for the correction like AISAC.

Conclusion

This paper introduced a novel approach, unsupervised deep learning S-Net, for correcting SAs in reversed-PE EPI images. Experimental results demonstrate that the S-Net method can provide corrected images that are compatible with the one provided by state-of-the-art SAC methods, i.e. TOPUP and AISAC. It runs much faster than the traditional SAC methods (about 3s on CPU and 1s on GPU). This promises real-time correction and the ability to install the S-Net method into the MRI scanner. Our proposed method also opens up a novel approach in the learning-based SAC.Acknowledgements

No acknowledgement found.References

1. J. L. R. Andersson, S. Skare, and J. Ashburner. How to correct susceptibility distortions in spin-echo echo-planar images: application to di_usion tensor imaging. NeuroImage, 20, pp. 870-888, 2003.

2. G. Balakrishnan, A. Zhao, M. R. Sabuncu, A. V. Dalca, and J. Guttag. An unsupervised learning model for deformable medical image registration. In Proc. IEEE Intern. Conf. on Comput. Vis. Pattern Recognit., pp. 9252-9260, 2018.

3. H. Chang and J. M. Fitzpatrick. A technique for accurate magnetic resonance imaging in the presence of field inhomogeneities. IEEE Trans. Imag. Process., 11(3), 1992.

4. S. T. M. Duong, M. M. Schira, S. L. Phung, A. Bouzerdoum, and H. G. B. Taylor. Anatomy-guided inverse-gradient susceptibility artefact correction method for high-resolution fmri. In Proc. IEEE Intern. Conf. on Acoust. Speech Signal Process., pp. 786-790, 2018.

5. D. C. V. Essen, K. Ugurbil, E. Auerbach, D. Barch, T. E. J. Behrens, R. Bucholz, A. Chang, L. Chen, M. Corbetta, S. W. Curtiss, S. D. Penna, D. Feinberg, M. F. Glasser, N. Harel, A. C. Heath, L. Larson-Prior, D. Marcus, G. Michalareas, S. Moeller, R. Oostenveld, S. E. Petersen, F. Prior, B. L. Schlaggar, S. M. Smith, A. Z. Snyder, J. Xu, and E. Yacoub. The human connectome project: a data acquisition perspective. NeuroImage, 62(4), pp. 2222-2231, 2012.

6. K. M. Ludeke, P. Roschmann, and R. Tischler. Susceptibility artifacts in NMR imaging. Magn. Reson. Imaging, 3, pp. 329-343, 1985.

7. S. Ogawa, T. M. Lee, A. R. Kay, and D. W. Tank. Brain magnetic resonance imaging with contrast dependent on blood oxygenation. In Proc. Natl. Acad. Sci. U.S.A., pp. 9868-9872, 1990.

8. J. R. Polimeni, V. Renvall, N. Zaretskaya, and B. Fischl. Analysis strategies for high-resolution UHF-fMRI data. NeuroImage, 168, pp. 296-320, 2018.

9. O. Ronneberger, P. Fischer, and T. Brox. U-net: convolutional networks for biomedical image segmentation. In N. Navab, J. Hornegger, W. M. Wells, and A. F. Frangi, editors, Proc. Med. Image. Comput. Assist. Interv., pp. 234-241. Springer International Publishing, 2015.

10. F. Schmitt. Echo-Planar Imaging, volume 1, book section 6, pp. 53-74. Academic Press, 2015.

Figures