0421

Computer-aided detection and segmentation of brain metastases in MRI for stereotactic radiosurgery via a deep learning ensemble1Imaging Physics, The University of Texas MD Anderson Cancer Center, Houston, TX, United States, 2Diagnostic Radiology, The University of Texas MD Anderson Cancer Center, Houston, TX, United States, 3Radiation Physics, The University of Texas MD Anderson Cancer Center, Houston, TX, United States, 4Cancer Systems Imaging, The University of Texas MD Anderson Cancer Center, Houston, TX, United States, 5Radiation Oncology, The University of Texas MD Anderson Cancer Center, Houston, TX, United States

Synopsis

Manual delineation of brain metastases for stereotactic radiosurgery (SRS) is time consuming and labor intensive. We successfully constructed a deep learning ensemble, including a single shot detector and U-Net, to detect and subsequently segment brain metastases in MRI for SRS treatment planning. Postcontrast 3D T1-weighted gradient echo MR images from 266 patients were randomly split by 212:54 for model training-validation and testing. For the testing group, an overall sensitivity of 80.4% (189/235 metastases) with 4 false positives per patient, and a median segmentation Dice of 77.9% (61.4% - 86.3%) for the detected metastases were achieved.

Introduction

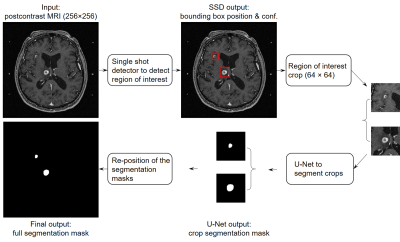

Manual identification and contouring of brain metastases in MRI, which are currently required for stereotactic radiosurgery (SRS) treatment planning, is time consuming and labor intensive. Several deep learning approaches based solely on semantic segmentation using fully convolutional networks were proposed for automated detection and segmentation of brain metastases. However, these approaches can lead to substantial false positives (up to 200 per patient), 1 and the segmentation performance was low (around 67% Dice).2 Herein, we constructed a deep learning ensemble for brain metastases segmentation that consists of two stages: (1) detection of the metastases using a single shot detector (SSD) 3, and (2) segmentation of the detected metastases using a U-Net 4 (Figure 1). With this approach, we hypothesized that false positives can be minimized while high detection sensitivity and subsequent segmentation of the metastases can be achieved.Methods

Postcontrast 3D T1-weighted gradient echo MRI from 266 patients undergoing SRS for brain metastases at our institution between January 2011 and August 2018 were retrospectively analyzed. Typical scan parameters were: TR/TE = 6.9/2.5 ms, NEX = 1.7, flip angle = 12°, matrix size = 256 × 256, FOV = 24 cm, voxel size = 0.94 × 0.94 × 1.00 mm. These images were randomly split into 80% (212/266) training and 20% (54/266) testing groups. For the training group, sub-sample split (20% of patient MR images) were used for model validation. Manual identification and contouring of the brain metastases by neuroradiologists and treating radiation oncologists, respectively, were considered as the ground truth. The metastasis size, which was measured as the largest cross-sectional dimension when projected onto a 2D plane in the craniocaudal direction, ranged from 2 to 52 mm.The detection and segmentation networks were separately constructed and trained on the same training group. An SSD was constructed for the detection stage of the ensemble (Figure 2). Inputs to the SSD were the axial MRI slices, and the outputs were the prediction bounding boxes encompassing brain metastases and the associated detection confidences. The SSD consisted of 16 convolutional layers for feature extraction, among which six layers were used for metastasis detection. The six detection layers had feature maps with matrix sizes of 128 × 128, 64 × 64, 32 × 32, 16 × 16, 8 × 8, and 4 × 4. The SSD loss, which is a weighted sum of the classification and localization losses, was used for the bounding box regression.

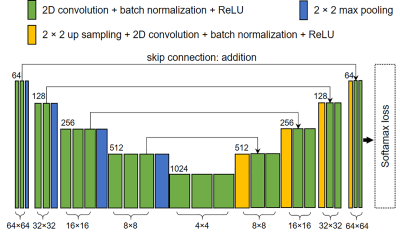

For segmentation, a U-Net was constructed using a VGG16 backbone as the convolutional encoder (Figure 3). Inputs to the U-Net were 64 × 64 slice crops covering entire cross-sections of brain metastases, with the centers of the crop and cross-section aligned, and the outputs were the segmentation masks. Matrix sizes of the feature maps were 64 × 64, 32 × 32, 16 × 16, 8 × 8, and 4 × 4. Cross-entropy loss was used for background and metastasis pixel-wise classification. For both SSD and U-Net, an Adam optimizer with an initial learning rate of 0.0002 was used to train the networks. Random affine transformation augmentations were applied during the training of both networks.

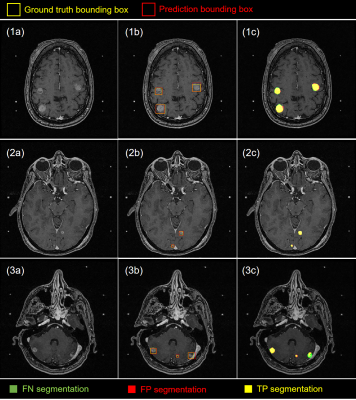

Potential regions of interest detected by the SSD with confidence ≥ 50% were cropped based on the predicted bounding box coordinates, and were input to the U-Net for segmentation. The final performance of the deep learning ensemble was evaluated across the entire brain volume. The adjacent output segmentation masks were first stacked to form a segmentation volume, which was then compared with the ground truth metastasis volumes. The ground truth volumes were considered true positives (TPs) if they had at least one voxel segmented; otherwise they were false negatives (FNs). The segmentation volumes were considered false positives (FPs) if they had no voxel overlap with any of the ground truth volumes. Free-response receiver operating characteristics (FROC) and boxplot were used to demonstrate the detection and segmentation performance of the ensemble (Figure 4).

Results

At the 50% confidence level, our method achieved an overall detection sensitivity of 80.4% (180/235) with approximately 4 FPs per patient, and a median segmentation Dice of 77.9% (61.4% - 86.3%) for the detected metastases. For metastases ≥ 6 mm, the sensitivity was 92.9% (131/141) and the median Dice was 81.9% (72.1% - 89.4%). The combined detection and segmentation for one patient took < 10 s on an NVIDIA DGX-1 workstation. Detection and segmentation examples from patients with metastases of different numbers, sizes, types and locations are shown in Figure 5.Discussion/conclusion

Our deep learning approach showed promising results to assist SRS treatment planning for brain metastases. Using a single MRI acquisition improves its feasibility for clinical applications. By lowering the detection confidence threshold, more metastases could be detected at a cost of precision. For segmentation, a large variation in the Dice coefficient due to different reasons was observed. For the smaller metastases, the poorly defined boundaries and lower contrast typically produced pixel-wise FPs, while for metastases with large necrotic regions, the low signal areas typically led to pixel-wise FNs, both of which reduced the Dice coefficient. Future work will involve improving the ensemble performance through ablation studies, hyperparameter searches, and inclusion of additional curated patient data.Acknowledgements

No acknowledgement found.References

[1] Charron O, Lallement A, Jarnet D, et al. Automatic detection and segmentation of brain metastases on multimodal MR images with a deep convolutional neural network. Comput Biol Med. 2018;95:43-54.

[2] Liu Y, Stojadinovic S, Hrycushko B, et al. A deep convolutional neural network-based automatic delineation strategy for multiple brain metastases stereotactic radiosurgery. PLoS One 12(10):e0185844.

[3] Liu W, Anguelov D, Erhan D, et al. SSD: Single shot multibox detector. In: Leibe B., Matas J., Sebe N., Welling M. (eds) Computer Vision – ECCV 2016. ECCV 2016. Lecture Notes in Computer Science, vol 9905. Springer, Cham.

[4] Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. In: Navab N, Hornegger J, Wells W, Frangi A. (eds) Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. MICCAI 2015. Lecture Notes in Computer Science, vol 9351. Springer, Cham.

Figures