0298

Acquisition Parameter Conditioned Generative Adversarial Network for Enhanced MR Image Synthesis1Pattern Recognition Lab, Department of Computer Science, Friedrich-Alexander-Universität Erlangen-Nürnberg, Erlangen, Germany, 2Technical University of Applied Sciences Amberg-Weiden, Amberg, Germany, 3Siemens Healthineers, Erlangen, Germany

Synopsis

Current approaches for the synthesis of MR images are only trained on MR images with a specific set of acquisition parameter values, limiting the clinical value of these methods. We therefore trained a generative adversarial network (GAN) to generate synthetic MR knee images conditioned on various acquisition parameters (TR, TE, imaging orientation). This enables us to synthesize MR images with adjustable image contrast. This work can support radiologists and technologists during the parameterization of MR sequences, can serve as a valuable tool for radiology training, and can be used for customized data generation to support AI training.

Introduction

Recent applications of GANs 1 for medical imaging 2 include cross-modality image synthesis (e.g. MRI → CT) 3, intra-modality image synthesis (e.g. 3T → 7T) 4, synthetic MR image data augmentation 5 and the use as a training tool for radiology education (e.g. generation of pelvic radiographs) 6. We leverage the power of GANs to develop a tool to aide MR sequence parameterization. Although MR sequence parameterization is supported by medical guidelines, it remains a complex task due to the manifold of acquisition parameters and high number of use cases and radiologists’ preferences. This leads to high variations in sequence configurations used in clinical daily practice, across different institutions but also within a single radiology department, and therefore to inefficiencies. Thus, a tool to support sequence parameterization by providing visual feedback on the image contrast yielded through the current acquisition parameter settings is needed. We therefore trained a GAN to generate synthetic MR knee images conditioned on different acquisition parameters.Material and Methods

For training and validation, we used the fastMRI dataset 7, a large-scale collection of both raw MR measurements and clinical MR images. It contains over 1.2 million clinical knee MR images from different MR scanners, with sufficient variation in the underlying acquisition parameter values.We used the six central image slices of all 1.5 T sagittal and coronal image series acquired on Siemens scanners (MAGNETOM Aera, MAGNETOM Symphony, MAGNETOM Sonata, MAGNETOM Espree, MAGNETOM Avanto, Siemens Healthcare, Erlangen, Germany) with a TR value between 2000 ms and 4200 ms to both limit dataset size and feature denseness. This results in a dataset of 68018 MR images of which we used 3000 images for validation and the rest for training. We split the data by DICOM series UID, such that the series UIDs of training and validation dataset are disjoint.

We trained a GAN by progressive growing (ProgGAN) 8 with a final resolution of 256 * 256 pixels. The GAN is extended by an auxiliary classifier (AC) that learns to determine the input acquisition parameters only from the MR image content. To be more precise, the AC is a multi-output classification and regression network that can handle both categorical and continuous targets (conditions). We deviated from the common AC-GAN architecture, where a single network is trained both on discriminating between real and fake images and on the conditions by training a separate network on the conditions (acquisition parameters) (see Figure 1). This enables us to use a wider range of data augmentation during training of the AC to avoid overfitting, whereas only limited data augmentation (mirroring, minor rotations) is used for training the GAN.

Results

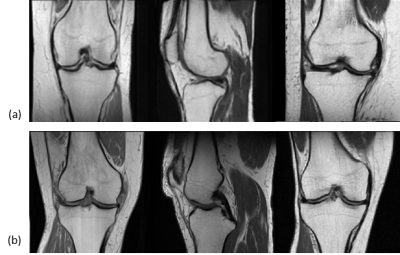

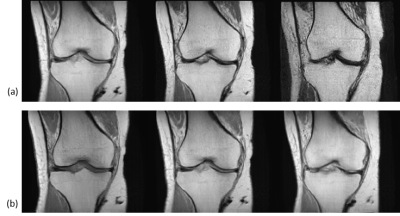

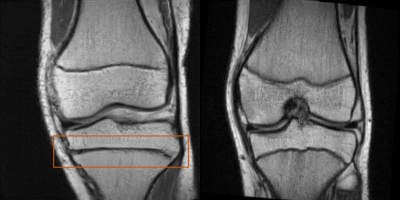

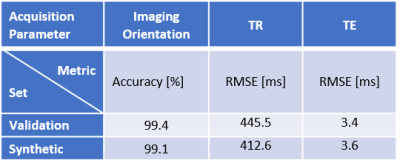

The generated images look realistic with sharp edges and contain high-frequency features. A technologist with more than 15 years’ experience in MRI labelled 250 randomly sampled real and synthetic MR images as real or synthetic. The achieved precision was 62.4% (recall 92.2%), showing that a significant share of images is indistinguishable between real and synthetic.Performance metrics of the AC on the validation dataset as well as on synthetic MR images are given in Table 1. Examples of real and synthetic MR images with varying acquisition parameters are provided in Figures 2 and 3.

Discussion and Conclusion

The AC achieved low validation errors on the determination of imaging orientation and TE, whereas the RMSE for TR is still significant. However, the generator also learns to adapt the image contrast depending on the input TR value (see Figure 3b).This work demonstrates promising results for the generation of synthetic MR images conditioned on several acquisition parameters; however, limitations exist. Some images were easily identified as synthetic by an expert (Figure 4). Improved conditioning on the acquisition parameters (through improved network architecture, more training data with a wider spread of acquisition parameter values, optimized hyperparameters) are anticipated to lead to more accurate contrast and anatomy synthesis.

Figure 3 shows that the latent space is not fully disentangled from the conditions leading to slightly varying anatomy if a single acquisition parameter is changed. Methods for feature disentanglement are anticipated to improve separating the anatomy from the contrast behavior. Additionally, the final resolution must be increased to mimic more realistic clinical settings. Moreover, additional image parameters are planned to be incorporated into the training process.

Different applications are conceivable on basis of this work. Radiologists and technologists can be supported during sequence parameterization through immediate feedback regarding yielded image contrast. It can be used as a training tool for radiologists’ and technologists’ education to demonstrate the impact of various acquisition parameters on the image contrast. As many AI applications are only trained on a specific sequence with specific acquisition parameter values, this method can support AI training through the generation of training data with specific acquisition parameter values. Additionally, when incorporating image-to-image translation techniques, this method can be used for enhanced contrast-to-contrast translation.

Acknowledgements

No acknowledgement found.References

1. Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets. Advances in neural information processing systems. 2014:2672-2680.

2. Yi X, Walia E, Babyn P. Generative adversarial network in medical imaging: A review. Medical image analysis. 2019;58.

3. Emami H, Dong M, Nejad-Davarani SP, Glide-Hurst CK. Generating synthetic CTs from magnetic resonance images using generative adversarial networks. Medical physics. 2018;45(8):3627-3636.

4. Nie D, Trullo R, Lian J, et al. Medical Image Synthesis with Deep Convolutional Adversarial Networks. IEEE transactions on bio-medical engineering. 2018;65(12):2720-2730.

5. Hoo-Chang Shin, Neil A. Tenenholtz, Jameson K. Rogers, et al. Medical Image Synthesis for Data Augmentation and Anonymization Using Generative Adversarial Networks. International Workshop on Simulation and Synthesis in Medical Imaging. 2018:1-11.

6. Finlayson SG, Lee H, Kohane IS, Oakden-Rayner L. Towards generative adversarial networks as a new paradigm for radiology education. arXiv preprint arXiv:1812.01547. 2018.

7. Zbontar J, Knoll F, Sriram A, et al. fastmri: An open dataset and benchmarks for accelerated mri. arXiv preprint arXiv:1811.08839. 2018.

8. Karras T, Aila T, Laine S, Lehtinen J. Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196. 2017.

Figures