0267

Artificial Intelligence for Predicting Pathological Complete Response to Neoadjuvant Chemotherapy from MRI and Prognostic Clinical Features1Stony Brook University, Stony Brook, NY, United States

Synopsis

Pathological complete response (pCR) is a measurement of the effectiveness of neoadjuvant chemotherapy (NAC). While there are several studies about predicting the pCR, no one system can fully automate this prediction process. We proposed a 3D convolutional neural network (CNN) system, integrating information on breast MRI images and prognostic clinical features, to predict pCR pre-NAC. This system achieved inspiring results in the ISPY1 Clinical Trial dataset, with 77% accuracy. This approach shows the potential in breast cancer diagnose and assessment. Furthermore, the mechanism of integrating images and features information can be used and generalized to other tasks.

Introduction

Neoadjuvant chemotherapy (NAC) is used in the treatment of breast cancer to reduce tumor size and reduce cancer metastasis before surgical excision. Pathological complete response (pCR) is the primary method of assessing treatment response. Early prediction of pCR can help clinicians in treatment planning.In the past, some studies have shown that patient demographics and biological markers such as receptor type and KI67 status are good predictors of pCR [1]. Additionally, a few studies have utilized imaging data to predict pCR with moderate success [2,3]. Most image-based prediction models used conventional statistic methods and required manual extraction of MRI features [2].

Artificial intelligence has gained dramatic popularity in many fields of study and achieved ground-breaking results in medical image analysis tasks. One AI technique, convolutional neural network, is highly suitable for this pCR prediction task due to its abilities in image-pattern recognition.

We designed a CNN system that can not only take standard MRI images as input but also incorporate prognostic clinical features such as patient demographics and information from pathology reports in order to achieve the most accurate and holistic prediction.

Methods

A total of 114 patients from the ISPY1 Clinical Trial dataset were used in this study. Pre-chemo T1 post-contrast breast MR images were used as well as seven corresponding prognostic clinical features: age, race, Estrogen Receptor Status, Progesterone Receptor Status, Her2 Status, pre-treatment, 3-level HR/Her2 category and Ki67. Tumor pCR is clinically used as a measure of treatment response to NAC and so is used as a reference standard in our study.For processing, all MRI image volumes were resized to 256*256*60. The main part of the CNN architecture, inherited from the classical VGG model [5], consists of 10 convolutional layers, 5 max-pooling layers, and 4 fully connected layers. Convolutional layers utilize 3*3 kernels and the number of channels is gradually increased from 64 to 128 to 256 for extracting high-level image patterns for prediction. We use 5 more fully connected layers to capture information from prognostic clinical features to guide MRI image volume pattern extraction.

The intermediate outputs multiply with corresponding feature maps generated from the main part of the system. All convolutional layers and fully connected layers are sequentially followed by one batch normalization layer and one activation layer. ReLU is used as the activation function. Fig 1 shows a detailed map of the system. Cross entropy is designated as the loss function. Stochastic gradient descent (SGD) is used as a learning strategy and the learning rate was initialed with 0.01.

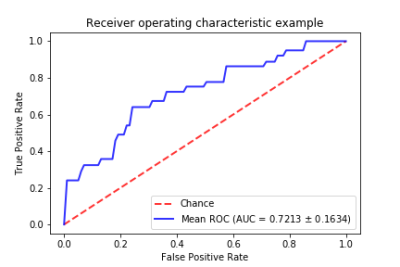

The proposed CNN system was trained in a server with one NVIDIA Tesla V100 GPU for 100 epochs and 5-fold cross-validation was used to fully utilize the dataset. Area under the curve (AUC) and accuracy were chosen as a measure of prediction performance.

Results

As we used 5 folds cross-validation, the dataset was randomly split into 5 non-overlap sets. In each fold, the For our 5-fold cross-validation, the dataset was randomly split into 5 non-overlapping sets. In each fold, the system was trained with the first 4 sets and tested with the last. This process was repeated 5 times until the system was tested in the whole dataset. The resulting AUC was 0.72 and accuracy was 77%. Fig 2 shows the receiver operating characteristic curves for our system. Fig 3 shows a performance comparison table of our integrated MRI plus prognostic clinical feature model with an MRI-only model. The integrated model outperforms in both accuracy and AUC. After training the model, it was found that race was the least predictive feature amongst the seven prognostic clinical features while the 3-level HR/Her2 category was the most. In terms of testing speed, the deep learning system could make predictions for 20 patients per second.Discussion

Until now, most studies predicting pCR relied on conventional statistical methods. Only a few models used CNN, which is proven to be much more efficient and accurate for interpreting imaging data [2]. A 2017 study by Antropova et al achieved an AUC of 0.89 with CNN [3]. However, their system is based solely on imaging data and cannot utilize information from clinical features to improve prediction. K. Ravichandran et al. proposed a CNN architecture to incorporate clinical information but their system required manual segmentation of the disease/tumor area, which is difficult and clinically impractical [4]. Moreover, they both utilize a 2D system, which can only process one single slice at one time and only assess a small scope of the total breast volume.The limitations of this study are 1) A small dataset. Training and testing on a larger dataset should improve the generalizability of the model. 2) Though we can predict pCR successfully, the system lacks interpretability and user intuitiveness, making it hard to know which image area or clinical features the system used most in its prediction.

Conclusion

We proposed a 3D deep learning system to accurately predict pCR. This approach is clinically practical and with improvement has the potential to become a powerful tool in breast cancer diagnosis and treatment. Furthermore, the mechanism proposed to integrate 3D medical images and clinical features can be used in other tasks and scenarios.Acknowledgements

No acknowledgment found.References

[1] Kim K I, Lee K H, Kim T R, et al. Ki-67 as a predictor of response to neoadjuvant chemotherapy in breast cancer patients[J]. Journal of breast cancer, 2014, 17(1): 40-46.

[2] Lobbes M B I, Prevos R, Smidt M, et al. The role of magnetic resonance imaging in assessing residual disease and pathologic complete response in breast cancer patients receiving neoadjuvant chemotherapy: a systematic review[J]. Insights into imaging, 2013, 4(2): 163-175.

[3] Antropova N, Huynh B Q, Giger M L. A deep feature fusion methodology for breast cancer diagnosis demonstrated on three imaging modality datasets[J]. Medical physics, 2017, 44(10): 5162-5171.

[4] Ravichandran K, Braman N, Janowczyk A, et al. A deep learning classifier for prediction of pathological complete response to neoadjuvant chemotherapy from baseline breast DCE-MRI[C]//Medical Imaging 2018: Computer-Aided Diagnosis. International Society for Optics and Photonics, 2018, 10575: 105750C.

[5] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition[J]. arXiv preprint arXiv:1409.1556, 2014.

Figures