0249

Deep learning-based thigh muscle segmentation for reproducible fat fraction quantification using fat-water decomposition MRI1Department of Diagnostic Radiology, Li Ka Shing Faculty of Medicine, The University of Hong Kong, Hong Kong, Hong Kong, 2Li Ka Shing Faculty of Medicine, The University of Hong Kong, Hong Kong, Hong Kong, 3Division of Neurology, Department of Medicine, Queen Mary Hospital, The University of Hong Kong, Hong Kong, Hong Kong, 4Department of Paediatrics and Adolescent Medicine, Li Ka Shing Faculty of Medicine, The University of Hong Kong, Hong Kong, Hong Kong

Synopsis

Time-efficient thigh muscle segmentation is a major challenge in moving from primarily qualitative assessment of thigh muscle MRI in clinical practice, to potentially more accurate and quantitative methods. In this work, we trained a convolutional neural network to automatically segment four clinically relevant muscle groups using fat-water MRI. Compared to cumbersome manual annotation which ordinarily takes at least 5-6 hours, this fully automated method provided sufficiently accurate segmentation within several seconds for each thigh volume. More importantly, it yielded more reproducible fat fraction estimations, which is extremely useful for quantifying fat infiltration in ageing and in diseases like neuromuscular disorders.

Introduction

Fat-water decomposition MRI provides an excellent approach for non-invasively evaluating the degree of fat infiltration in muscle tissues. However, the need for segmentation severely limits its application in quantitative fat assessment in clinical practice. The conventional methods for segmenting thigh muscles in current literature[1] rely on manually drawn regions-of-interest (ROIs), which is an extremely time-consuming and cumbersome process prone to subjective bias. Some previous studies performed manual segmentation on one or a few representative slices[2, 3], but such process can potentially lower the accuracy and reproducibility, which is also not feasible for clinical adoption. Although recent studies developed semi-automated or automated algorithms[4, 5], they are still difficult to apply in clinical settings[6]. A faster, simpler and fully automated thigh muscle segmentation method for reproducible fat fraction quantification is highly desired. The purpose of this work is to develop and validate a convolution neural network (CNN) for automatically segmenting four thigh muscle groups using a reference database (MyoSegmenTUM)[7]. We further evaluate its reproducibility in fat fraction estimation by comparing with manual segmentation.Methods

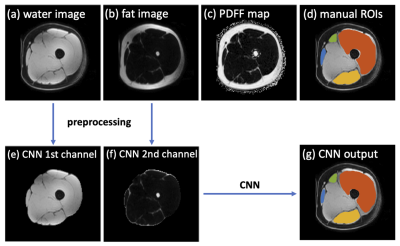

This study was based on the reference database MyoSegmenTUM[7], which consists of 25 fat-water decomposition MRI scans collected from 19 subjects using a 6-echo 3D spoiled gradient echo sequence. The manual segmentation masks of all scans were provided as ground truth. The manual ROIs delineated four clinically relevant muscle groups: quadriceps femoris muscle (ROI1), sartorius muscle (ROI2), gracilis muscle (ROI3) and hamstring muscles (ROI4), with an average segmentation time of ~6 hours per scan. Three subjects were scanned 3 times with repositioning, which were used as an independent testing for evaluating the segmentation accuracy and the reproducibility in fat fraction estimation. The remaining scans were used to train a CNN for thigh muscle segmentation using a U-net architecture[8].For all scans, the left and right side thighs were first separated for analysis, which also doubled the dataset size. As a preprocessing step, the subcutaneous fat was removed automatically on water images by Kmeans clustering followed by an order-statistic filtering (also remove the skin) and a dilation. The water and fat images covering only the thigh muscle regions were provided to the CNN as two input channels. The CNN was trained in 2D using a total of 3808 slices. The training objective was to minimize the categorical cross‐entropy loss between the CNN outputs and the manual segmentations.

The CNN was then tested using the independent set of MRI images acquired from the 3 subjects (6 thigh volumes) with 3 repeated scans. The segmentation accuracy was evaluated using Dice index. In addition, fat fraction quantification was performed on the proton density fat fraction (PDFF) maps. Pearson correlation coefficient was calculated between the mean of PDFF values (meanPDFF) using manual and automated CNN-derived ROIs of all 1st scans. The reproducibility in fat fraction estimation was determined by the intraclass correlation coefficients (ICCs) of meanPDFF using the 3 repeated measures.

Results

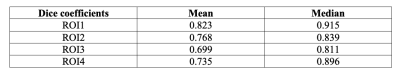

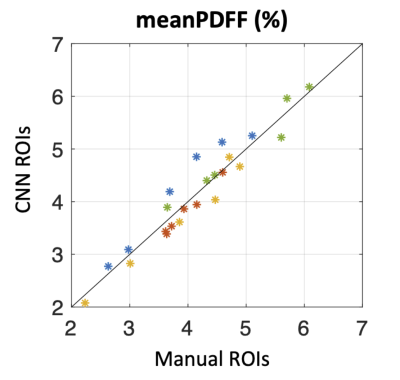

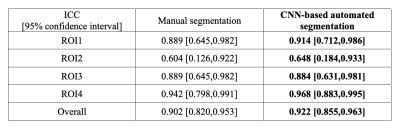

Figure 1 shows the MRI images and the segmentation results of a representative slice from the independent testing set. As shown, the manual and automated segmentation methods have satisfying agreement visually. Table 1 summarizes the mean and median Dice coefficients between manual and automated segmentations for ROI1~ROI4. It must be noted that some ROIs are very small, or some regions (particularly at either ends of muscle insertion/attachment) can be more inaccurate, which impacts the Dice coefficient. It is thought that Dice coefficient of 0.7 or more conforms to good agreement for such scenarios[9, 10]. In addition, our median Dice coefficients were >0.8 for all ROIs.Figure 2 shows that the meanPDFF of all 1st scans calculated using manual and automated masks were strongly correlated, with Pearson ρ=0.958 (p<<0.0001). Table 2 demonstrates the ICCs of meanPDFF in the four ROIs. Although both segmentations yielded moderate reproducibility in ROI2, our CNN-based automated segmentation method produced very comparable (in ROI3) and more reproducible (in other ROIs) fat fraction measures compared to manual segmentation.

Discussion

In this work, we adopt the U‐net architecture to train a CNN using fat-water decomposition MRI for thigh muscle segmentation. Compared to the time-consuming and labor-intensive manual segmentation, this fully automated segmentation method is able to delineate four clinically relevant muscle groups with encouraging accuracy in a few seconds per volume. In terms of fat fraction quantification, the automated segmentation not only generated fat fraction measures strongly correlated with those determined by manual segmentation, but more importantly, demonstrated overall higher reproducibility in fat fraction estimation.Conclusion

As the need for segmentation is a primary challenge in the quantitative analysis of thigh muscle MRI, the proposed CNN-based segmentation that provides reproducible fat fraction estimation would be beneficial for clinical practice such as quantifying fat in muscle associated with ageing, or in conditions such as neuromuscular disorders. Moreover, a reproducible measure allows serial monitoring which can be useful particularly if there is intervention to allow for detection of disease progression or for evaluation of treatment efficacy.Acknowledgements

No acknowledgement found.References

1. Burakiewicz J, Sinclair CD, Fischer D, Walter GA, Kan HE, Hollingsworth KGJJon. Quantifying fat replacement of muscle by quantitative MRI in muscular dystrophy. 2017;264(10):2053-2067.

2. Morrow JM, Sinclair CD, Fischmann A, et al. MRI biomarker assessment of neuromuscular disease progression: a prospective observational cohort study. 2016;15(1):65-77.

3. Wokke B, van den Bergen J, Aartsma-Rus A, Webb A, Verschuuren J, Kan H. Quantitative assessment of the inter-and intra-muscle fat fraction variability in Duchenne muscular dystrophy patients. Proceedings of the 19th Annual Meeting of ISMRM; 2011. p. 269.

4. Rodrigues R, Pinheiro AMJapa. Segmentation of Skeletal Muscle in Thigh Dixon MRI Based on Texture Analysis. 2019.

5. Mesbah S, Shalaby AM, Stills S, et al. Novel stochastic framework for automatic segmentation of human thigh MRI volumes and its applications in spinal cord injured individuals. 2019;14(5):e0216487.

6. de Mello R, Ma Y, Ji Y, Du J, Chang EYJAJoR. Quantitative MRI Musculoskeletal Techniques: An Update. 2019;213(3):524-533.

7. Schlaeger S, Freitag F, Klupp E, et al. Thigh muscle segmentation of chemical shift encoding-based water-fat magnetic resonance images: The reference database MyoSegmenTUM. 2018;13(6):e0198200.

8. Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. International Conference on Medical image computing and computer-assisted intervention: Springer; 2015. p. 234-241.

9. Spuhler KD, Ding J, Liu C, et al. Task‐based assessment of a convolutional neural network for segmenting breast lesions for radiomic analysis. Magnetic resonance in medicine 2019;82(2):786-795.

10. Carillo V, Cozzarini C, Perna L, et al. Contouring variability of the penile bulb on CT images: quantitative assessment using a generalized concordance index. 2012;84(3):841-846.

Figures