0152

Retrospective Fat Suppression for Lung Radiotherapy Planning with Deep Learning Convolutional Neural Networks1University of Manchester, Manchester, United Kingdom, 2The Christie NHS Trust, Manchester, United Kingdom

Synopsis

We investigated three different Deep Learning techniques for performing retrospective fat suppression in T2 weighted imaging of lung cancer. The methods considered were two U-nets, using an L1 cost function or a conditional GAN, and a CycleGAN. The networks were trained on 900 images and then 16 test images were scored by 3 oncologists and a research radiographer. The L1 U-net and CycleGAN were scored at 73% and 72% respectively, relative to a gold standard of 80% for prospectively fat saturated images, and the scorers indicated they would be happy to use the generated images for radiotherapy target delineation.

Introduction

The recent introduction of MR guided radiotherapy accelerators has driven a considerable increase in interest in MR based planning for radiotherapy treatments. There is mounting evidence that the combination of soft tissue contrasts available in MRI can improve the accuracy and consensus of tumour and organ at risk (OAR) delineation1, however this extra information comes at the cost of a long scanning session. As planning scans are typically performed in an uncomfortable treatment position, e.g. with arms above the head, or using restraints to prevent motion, it is essential to keep the scan time to a minimum for the patients to tolerate it.For lung radiotherapy planning, the Elekta MR-Linac consortium currently recommends the acquisition of several MR sequences which include T2 weighted images both with and without fat suppression (FS). The non fat-suppressed (NFS) T2 images are used for delineating various OARs within the mediastinum, but it is considered that the FS images are preferable for tumour delineation, particularly for central tumours which may border the mediastinal fat. However, apart from fat regions, contrast is similar for both. We therefore hypothesized that it might be possible to retrospectively generate FS images from the NFS ones, thereby removing the need to acquire FS images and shortening the planning exam.

Deep learning with convolutional neural networks (CNNs) has shown considerable promise in the domain of style transfer which is where the content of an image is translated from one representation to another, for example changing a photograph to a painting2. In this study, we evaluate three different CNNs for retrospective fat suppression in T2 lung images.

Methods

21 lung cancer patients were each scanned twice on a 1.5T Aera (Siemens, Erlangen). At each session, 2D TSE T2-weighted images (TE/TR=102/5200ms, 30x3.5mm slices with matrix 320x320, 1.25x1.25mm pixels, ~6 minutes) were acquired both with and without fat suppression. Not all patients completed both scans in both sessions, final number of FS/NFS slice pairs was 909. We selected 16 slice pairs containing tumour as the observer testing dataset, and the remaining 893 were used for training the network.In this study we employed the Pix2Pix U-net architecture2 with 8 convolution/deconvolution layers. We considered two different optimisation functions, one using a simple L1 norm between the generated image and the reference FS image, and one using a conditional Generative Adversarial Network (cGAN). We also evaluated a CycleGAN model3 with 9 ResNet blocks (figure 1).

To evaluate the performance of the methods a set of observers (3 lung oncologists and an experienced MR radiographer) were each shown the reference FS images along with retrospective FS images from the different algorithms. They scored each image for its suitability in delineating the tumour, relative to an assigned baseline of 80% for the reference image.

Results

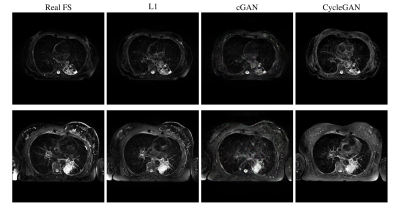

All three methods were able to produce images with plausible FS contrast. The L1 method was scored highest at 73±12% with the CycleGAN method a close second at 72±14%, while the cGAN approach scored 68±15%. The CycleGAN method actually produced the sharpest images, but two of the considered slices contained artefacts and achieved very low scores, while the L1 method was more robust across the complete set of slices. Figure 2 shows representative images from all three techniques. Figure 3 shows some examples of artefacts introduced by the CNNs.Discussion

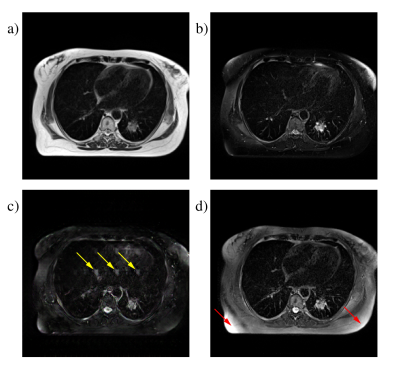

For CNNs trained using paired data, the quality of the match between images is the most important factor in the achievable performance. Our images were acquired in the same scan session and were respiratory triggered, so the match is good for most of the images, however there are inevitably some motion related differences and in particular cardiac motion is impossible to control.Mismatched pixels between the training image pairs reduce the quality of the generated images. For the L1 optimisation, the effect was to blur the generated images, as previously observed4. For the cGAN approach, the consequences were more severe: in many images we see "hallucinated" features where the CNN inserts fictitious but visually convincing elements into images (figure 3c).

CycleGAN produces the sharpest FS images. As shown in figure 1, applying the “Fat Suppression” and “Fat Restoration” generators in sequence has to reproduce the original NFS image, the intermediate FS image must preserve all the fine details. The fact that it does not use paired data also means that the mismatch problem does not arise. On the other hand, the library of images used to train the discriminator is extremely important. As a fraction of the true FS images contain imperfect fat saturation, the discriminator readily accepts generated FS images with the same artefact, leading to a much higher incidence than in real images, as in figure 3d. However, with a larger training image database it is possible to prune out images featuring artefacts, which could make the retrospective approach actually outperform real measurements by reducing the incidence of artefacts, rather than increasing it.

Conclusion

Retrospective fat suppression using CNNs can potentially replace prospective acquisitions in radiotherapy treatment planning. The L1 method and the CycleGAN both scored highly with oncologists: CycleGAN produces the sharpest results, but can introduce artefacts, while the L1 is overall more robust.Acknowledgements

We thank Anna-Maria Shiarli for leading the development of the imaging study where the data used in this project was obtained.References

1. Pathmanathan A, McNair H, Schmidt M et al. Comparison of prostate delineation on multimodality imaging for MR-guided radiotherapy. BJR 2019; 92(1096):20180948-6

2. Isola P, Zhu J, Zhou T and Efros A. Image-to-Image Translation with Conditional Adversarial Networks, 2016. arXiv cs.CV

3. Zhu J, Park T, Isola P and Efros A. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks, 2017. arXiv cs.CV

4. Pathak D, Krahenbuhl P, Donahue J et al. Context Encoders: Feature Learning by Inpainting, 2016. arXiv cs.CV

Figures