0136

Improving the reliability of pharmacokinetic parameters in dynamic contrast-enhanced MRI in gliomas: Deep learning approach1Korea Advanced Institute for Science and Technology, Daejeon, Korea, Republic of, 2Korea University College of Medicine, Seoul, Korea, Republic of, 3Seoul National University Hospital, Seoul, Korea, Republic of

Synopsis

AIFDCE has been known to be sensitive to noise, because of the relatively weak T1 contrast-enhanced MR signal intensity (SI) compared to the T2* SI of DSC-MRI, leading to PK parameters – Ktrans, Ve, and Vp – with low reliability. In this study, we developed a neural network model generating an AIF similar to the AIF obtained from DSC-MRI – AIFgenerated DSC – and demonstrated that the accuracy and reliability of Ktrans and Ve derived from AIFgenerated DSC can be improved compared to those from AIFDCE without obtaining DSC-MRI, not leading to an additional deposition of gadolinium in the brain.

Introduction

Dynamic contrast-enhanced (DCE) magnetic resonance imaging (MRI) has been validated for predicting grades of astrocytomas, and differentiating pseudoprogression from true progression1. More specifically, pharmacokinetic (PK) parameters such as volume transfer constant (Ktrans), volume of extravascular extracellular space (Ve), and volume of vascular plasma space (Vp), derived from DCE-MRI can evaluate not only tumor angiogenesis but also permeability of microcirculation. However, to derive the PK parameters robustly, a reliable arterial input function (AIF) that reflects the robust dynamic contrast concentration of blood plasma is crucial, because deriving the PK parameters from DCE-MRI is based on the dynamic distribution of intravenously injected gadolinium-based contrast-agent among tissue compartments, which is called PK modeling. However, DCE-MRI is known for the low reliability2, and most of which is caused by irreproducible arterial input function (AIF) measured from the DCE-MRI, or AIFDCE, due to the relatively low signal intensity of T1-contrast enhanced MR imaging compared to T2* signal intensity of dynamic susceptibility contrast (DSC) MR imaging, another perfusion MRI technique. This results in an AIF obtained from DCE-MRI, or AIFDCE, sensitive to noise, which leads to PK parameters with low reliability, despite recent advancements. However, dual acquisition of DSC and DCE-MRI requires higher-than-recommended dose of Gadolinium-based contrast agents, which can lead to brain deposition. The aim of this study was to generate more accurate and reliable arterial input function (AIF) using deep learning for DCE-MRI for astrocytomas without additional contrast agents.Methods

Three hundred eighty-six patients with histopathologically diagnosed astrocytomas who underwent both dynamic susceptibility contrast (DSC) and DCE-MRI preoperatively were enrolled in the study. The AIF was manually obtained from both sequences, AIFDSC and AIFDCE (twice for each to examine reproducibility), respectively. A pix2pix model3, one of conditional generative adversarial network (GAN), was developed with the training set (n=260) using AIFDCE as the input and AIFDSC as the target to generate a synthetic AIFgenerated DSC. Using AIFDCE, AIFDSC and AIFgenerated DSC, pharmacokinetic (PK) parameter maps – Ktrans, Ve, and Vp – were obtained from the tumor areas in the DCE-MRI images in the test set (n=126). To construct a generator, and a discriminator using convolutional neural network, time-course AIF signal was transformed into a spectrogram image using short-term Fourier transformation. The generator network, a convolutional encoder-decoder, denoise and transformed input images to target images, whereas the discriminator network, fully convolutional network, discriminates the generated images as real or fake (Fig.1). Data was augmented 8 folds, because the AIF was acquired twice for reliability analysis, adding white Gaussian noise, and flipping the spectrogram backward along the time axis. To stabilize training of the GAN model, conditional Wasserstein distance with gradient penalty4 was used as loss function. The diagnostic performance in differentiating high-grade from low-grade astrocytomas, obtained by receiver operating characteristic (ROC) analysis, and the reliability of the PK parameters, evaluated by intraclass correlation coefficient (ICC), were compared using the three different AIFs.Results

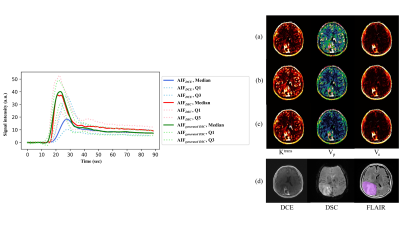

The AIFgenerated DSC was not different to corresponding AIFDSC, qualitatively (Fig.2). The AIFgenerated DSC-derived mean Ktrans and Ve significantly more accurately differentiated high-grade from low-grade astrocytomas than those derived from AIFDCE: the area under the ROC curve (AUC) for Ktrans, 0.879 vs 0.715, p = 0.0422; Ve, 0.866 vs 0.696, p = 0.0488, respectively (Fig.3). Ktrans and Ve showed higher ICCs for AIFgenerated DSC than for AIFDCE: Ktrans, 0.912 vs 0.383; Ve, 0.682 vs 0.655, respectively (Table 1).Conclusions

A neural network model can generate an accurate and reliable AIFgenerated DSC from DCE-MRI to obtain robust PK parameters for differentiating grades of astrocytoma.Acknowledgements

National Research Foundation of Korea (NRF-2016M3C7A1914448 to K.S.C., NRF-2017M3C7A1031331 to B.J., and NRF-2019K1A3A1A77079379 to S.H.C.); Creative-Pioneering Researchers Program through Seoul National University (SNU) to S.H.C; and Project Code (IBS-R006-D1) to S.H.C.References

1. Yun TJ, et al. Glioblastoma treated with concurrent radiation therapy and temozolomide chemotherapy: differentiation of true progression from pseudoprogression with quantitative dynamic contrast-enhanced MR imaging. Radiology 274, 830-840 (2015).

2. Heye T, et al. Reproducibility of dynamic contrast-enhanced MR imaging. Part I. Perfusion characteristics in the female pelvis by using multiple computer-aided diagnosis perfusion analysis solutions. Radiology 266, 801-811 (2013).

3. Isola P, Zhu J-Y, Zhou T, Efros AA. Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition) (2017).

4. Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville AC. Improved training of wasserstein gans. In: Advances in neural information processing systems) (2017).

Figures