0071

Fully Convolutional Networks for Adipose Tissue Segmentation Using Free-Breathing Abdominal MRI in Healthy and Overweight Children1Radiological Sciences, University of California, Los Angeles, Los Angeles, CA, United States, 2Bioengineering, University of California, Los Angeles, Los Angeles, CA, United States, 3Pediatrics, University of California, Los Angeles, Los Angeles, CA, United States

Synopsis

The volume and fat content of subcutaneous and visceral adipose tissue (SAT and VAT) have strong associations with metabolic diseases in overweight children. Currently, the gold standard to measure the SAT/VAT content is manual annotation, which is time-consuming. Although several studies showed promising results using machine and deep learning to segment SAT and VAT in adults, there is a lack of research on deep learning-based SAT and VAT segmentation in children. Here, we investigated the performance of 3 deep learning network architectures to segment SAT and VAT in children.

Introduction

Body composition analysis, especially adipose tissue quantification, provides biomarkers to detect future risks for cardiometabolic diseases such as hypertension, type 2 diabetes, and obesity [1-4]. The volume and fat content of subcutaneous and visceral adipose tissue (SAT and VAT) have strong associations with metabolic diseases [5]. Currently, SAT and VAT can be manually annotated on MR images or proton-density fat fraction (PDFF) maps, but this is time-consuming. Previous studies showed promising results using machine and deep learning to segment SAT and VAT in mice and adults [6-10]. However, there is a lack of research on deep learning-based SAT and VAT segmentation in children. Here, we investigated the performance of 3 deep learning networks to segment SAT and VAT on free-breathing (FB) abdominal PDFF maps in children.Methods

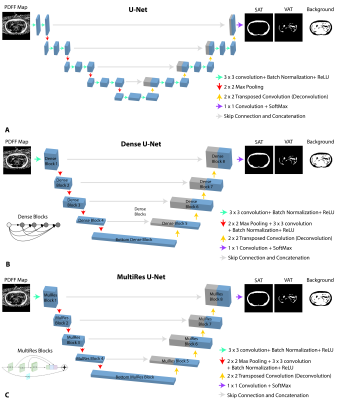

Networks: Three fully convolutional neural networks, 2D U-Net [13], 2D Dense U-Net [14] and 2D U-Net with MultiRes U-Net Blocks [15] (Figure 1) were trained using abdominal FB-MRI PDFF maps [5,11-12]. All networks used the same skip connections, concatenations, and number of channels used for filters were [32, 64, 128, 256, 512]. Since the number of pixels for SAT and VAT were imbalanced, a weighted multi-class Dice loss (WDL) was utilized [16] for the 3 classes (SAT, VAT and background).Datasets: PDFF maps from healthy and overweight children were manually annotated by a researcher, confirmed by a pediatric radiologist, and collected to form training (85% of the data) and testing (15% of the data) sets (Table 1A). The testing set only included overweight children. The imaging parameters used for FB-MRI scans are listed in Table 1B. The hyperparameter set (Table 1C) was tuned over a range of parameters (training batch size = [5- 10], learning rate = [0.0005- 0.01], number of epochs = [5- 20]) to minimize the training loss.

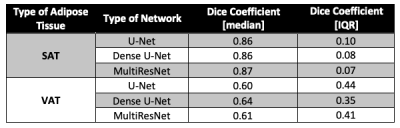

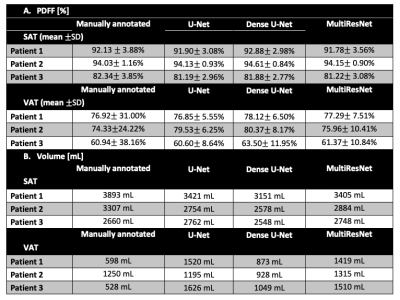

Evaluations: The Dice coefficient for SAT (DICESAT) and VAT (DICEVAT) were calculated separately for each 2D slice in the testing dataset and reported as median and interquartile range (IQR). The SAT and VAT volumes for each subject in the testing set were calculated. The mean±standard deviation of PDFF (PDFFSAT, PDFFVAT) were also calculated for each subject in the testing set.

Results

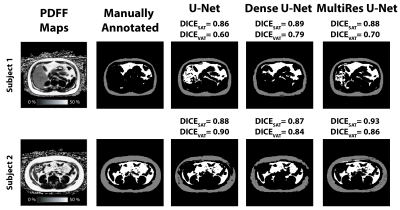

Figure 2 shows example results from all 3 networks for 2 overweight children. For the selected slice from subject 1, all 3 networks yielded similar DICESAT. DICEVAT was smaller for U-Net than those of Dense U-Net and MultiResNet. For the selected slice from subject 2, MultiRes U-Net achieved the largest DICESAT and U-Net achieved the largest DICEVAT. MultiRes U-Net and Dense U-Net achieved the largest median and smallest IQR for DICESAT and DICEVAT, respectively (Table 2). The mean PDFFSAT and PDFFVAT measured by all 3 network architectures show similar results and are close to the manually annotated reference for all testing subjects (Table 3A). The standard deviation PDFFSAT values also yield very similar results to the manual annotation. Table 3B shows the volume measurements for SAT/VAT. All 3 networks achieved comparable SAT volumes to the manual annotation. VAT volumes measured from all 3 network architectures yielded larger volumes than those of the manual annotations.Discussion

Previous studies evaluated the performance of various neural networks for SAT and VAT segmentation in mice [6] using water maps and in adults [7,10] using breath-hold PDFF maps. Here, we assessed the performance of U-Net, Dense U-Net, and MultiRes U-Net for SAT and VAT segmentation using abdominal FB-MRI PDFF maps in children.Dense U-Net and MultiRes U-Net slightly outperformed U-Net in terms of Dice coefficients. All 3 network architectures yielded very similar mean PDFFSAT and PDFFVAT values with the manual annotation. Overall, all 3 networks led to smaller Dice coefficients for VAT than those of SAT, indicating the difficulty to segment small structures and details. We also observed that the networks overestimated the VAT volume (Table 3B).

There were limitations to our study. Unlike adults, we observed larger variation of VAT distribution in children, therefore more data is needed to train the network. Even though WDL was used to consider the imbalanced nature of SAT and VAT, WDL might not fully reflect the VAT characteristics of children. Various loss functions that account more for the small unconnected structures might improve VAT segmentation [17]. Furthermore, the performance of the MultiRes U-Net can be improved with additional ResPath skip and concatenation connections that decrease the semantic gap between the low- and high-level features [14]. The 3D context of the slices can also be considered using 3D U-Net based networks [18], or 2D U-Net with recurrent neural networks. Lastly, k-fold cross validation can improve hyperparameter tuning.

Conclusion

We assessed the performance of 3 neural networks, U-Net, Dense U-Net and MultiRes U-Net to segment SAT and VAT on free-breathing abdominal PDFF maps acquired from healthy and overweight children. The current networks may already be useful for semi-automated segmentation of adipose tissue and measurement of mean PDFFSAT and PDFFVAT.Acknowledgements

This study was supported in part by an Exploratory Research Grant from the UCLA Department of Radiological Sciences. The authors thank Dr. Joanna Yeh, the study coordinators, and MRI technologists at UCLA.References

[1] Linge, Jennifer, et al. "Body composition profiling in the UK biobank imaging study." Obesity 26.11 (2018): 1785-1795.

[2] Després, Jean-Pierre, and Isabelle Lemieux. "Abdominal obesity and metabolic syndrome." Nature 444.7121 (2006): 881.

[3] Saelens, Brian E., et al. "Visceral abdominal fat is correlated with whole-body fat and physical activity among 8-y-old children at risk of obesity." The American journal of clinical nutrition 85.1 (2007): 46-53.

[4] Shuster, A., et al. "The clinical importance of visceral adiposity: a critical review of methods for visceral adipose tissue analysis." The British journal of radiology 85.1009 (2012): 1-10.

[5] Ly, Karrie V., et al. "Free-breathing Magnetic Resonance Imaging Assessment of Body Composition in Healthy and Overweight Children: An Observational Study." Journal of pediatric gastroenterology and nutrition 68.6 (2019): 782-787.

[6] Grainger, Andrew T., et al. "Deep learning-based quantification of abdominal fat on magnetic resonance images." PloS one 13.9 (2018): e0204071.

[7] Langner, Taro, et al. "Fully convolutional networks for automated segmentation of abdominal adipose tissue depots in multicenter water–fat MRI." Magnetic resonance in medicine 81.4 (2019): 2736-2745.

[8] Hui, Steve CN, et al. "Automated segmentation of abdominal subcutaneous adipose tissue and visceral adipose tissue in obese adolescent in MRI." Magnetic resonance imaging 45 (2018): 97-104.

[9] Sadananthan, Suresh Anand, et al. "Automated segmentation of visceral and subcutaneous (deep and superficial) adipose tissues in normal and overweight men." Journal of Magnetic Resonance Imaging 41.4 (2015): 924-934.

[10] Estrada, Santiago, et al. "FatSegNet: A Fully Automated Deep Learning Pipeline for Adipose Tissue Segmentation on Abdominal Dixon MRI." arXiv preprint arXiv:1904.02082 (2019).

[11] Armstrong, Tess, et al. "Free‐breathing liver fat quantification using a multiecho 3 D stack‐of‐radial technique." Magnetic resonance in medicine 79.1 (2018): 370-382.

[12] Armstrong, Tess, et al. "Free-breathing quantification of hepatic fat in healthy children and children with nonalcoholic fatty liver disease using a multi-echo 3-D stack-of-radial MRI technique." Pediatric radiology 48.7 (2018): 941-953.

[13] Ronneberger, Olaf, Philipp Fischer, and Thomas Brox. "U-net: Convolutional networks for biomedical image segmentation." International Conference on Medical image computing and computer-assisted intervention. Springer, Cham, 2015.

[14] Ibtehaz, Nabil, and M. Sohel Rahman. "MultiResUNet: Rethinking the U-Net architecture for multimodal biomedical image segmentation." Neural Networks 121 (2020): 74-87.

[15] Huang, Gao, et al. "Densely connected convolutional networks." Proceedings of the IEEE conference on computer vision and pattern recognition. 2017.

[16] Sudre, Carole H., et al. "Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations." Deep learning in medical image analysis and multimodal learning for clinical decision support. Springer, Cham, 2017. 240-248.

[17] Roy, Abhijit Guha, et al. "QuickNAT: A fully convolutional network for quick and accurate segmentation of neuroanatomy." NeuroImage 186 (2019): 713-727.[18] Çiçek, Özgün, et al. "3D U-Net: learning dense areatric segmentation from sparse annotation." International conference on medical image computing and computer-assisted intervention. Springer, Cham, 2016.

Figures