4647

LORAKI: Reconstruction of Undersampled k-space Data using Scan-Specific Autocalibrated Recurrent Neural Networks1Electrical Engineering, University of Southern California, Los Angeles, CA, United States, 2Electrical Engineering, Indian Institute of Technology (IIT) Kanpur, Kanpur, India

Synopsis

We introduce LORAKI, a novel MRI reconstruction method that bridges two powerful existing approaches (LORAKS and RAKI). Like RAKI (a deep learning extension of GRAPPA), LORAKI trains a scan-specific autocalibrated convolutional neural network (which only relies on autocalibration data, and does not require external training data) to interpolate missing k-space samples. However, unlike RAKI, LORAKI is based on a recurrent convolutional neural network architecture that is motivated by the iterated convolutional structure of a certain LORAKS algorithm. LORAKI is very flexible and can accommodate arbitrary k-space sampling patterns. Experimental results suggest LORAKI can have better reconstruction performance than state-of-the-art methods.

Introduction

Convolutional interpolation of unsampled k-space data is very successful in MRI reconstruction1-10 and is essential to methods like GRAPPA1, SPIRiT2, and LORAKS5-8. Recently, nonlinear convolutional k-space interpolation methods have drawn attention11-14, particularly in the form of deep neural networks12-14.

RAKI12 is a deep-learning extension of GRAPPA that trains a convolutional neural network (CNN) for undersampled k-space reconstruction. Unlike other deep learning approaches, RAKI has the advantage of being autocalibrated (the CNN is scan-specific and trained only from autocalibration (ACS) data, without needing external training data).

In this work, we develop a scan-specific autocalibrated deep-learning extension of a different convolutional interpolation method: LORAKS5-8. Unlike GRAPPA, LORAKS uses iterative reconstruction algorithms, which leads to a very different CNN architecture. Specifically, our new approach, called LORAKI, uses a recurrent neural network (RNN) structure instead of the more traditional CNN architecture used by RAKI. Compared to RAKI, one of the potential advantages of LORAKI is that it is more compatible with a wide range of different k-space sampling patterns. In addition, since LORAKS leverages more constraints than GRAPPA does (i.e., LORAKS leverages support, phase, and parallel imaging constraints simultaneously5-8), LORAKI also inherits these capabilities.

Theory and Methods

GRAPPA can be viewed as a single-layer CNN without any nonlinearities. The RAKI approach extends this idea by adding nonlinearities (rectified linear units, or ReLUs) and increasing the number of convolutional layers12.

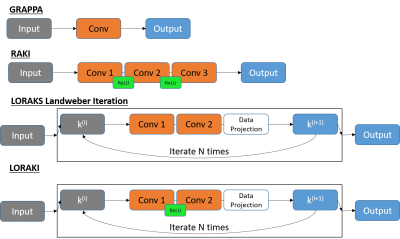

For the present work, a key observation is that if Autocalibrated-LORAKS (AC-LORAKS) reconstruction7 is performed using exact data consistency constraints together with the Landweber iterative algorithm15, then the resulting algorithm also has the structure of an iterated two-layer CNN (i.e., an RNN) without nonlinearities. Motivated by this structure, our new LORAKI approach is obtained by adding nonlinearities (ReLUs) to this network architecture. The network architectures for GRAPPA, RAKI, AC-LORAKS with Landweber iteration, and LORAKI are shown in Fig. 1.

Because complex numbers are not supported very well by existing deep learning software frameworks, the input data was separated into real and imaginary parts, which were treated as different channels. To take advantage of LORAKS' ability to model phase constraints5-8 for partial Fourier acquisitions without invoking the complexities associated with the LORAKS S-matrix (which imposes support, phase, and parallel imaging constraints), we also considered the use of virtual conjugate coils8,16-17 (VCCs).

In our illustrative preliminary implementation without VCC, the first LORAKI convolution layer was designed to have 2*(number of coils) input channels, 32 output channels, and 3x3 convolution kernels; and the second convolution layer was designed to have 32 input channels, 2*(number of coils) output channels, and 3x3 convolution kernels. Using VCC doubles the number of input and output channels for both layers. Paired sets (undersampled and fully sampled) of autocalibration data were used to train the RNN, and the number of RNN iterations was set to 5.

Performance evaluations were performed by retrospective undersampling of two fully-sampled brain datasets: a 12-channel 256x187 T2-weighted dataset and a 32-channel 208x256 MPRAGE dataset (coil compressed to 8 channels).

Results

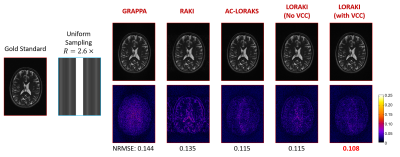

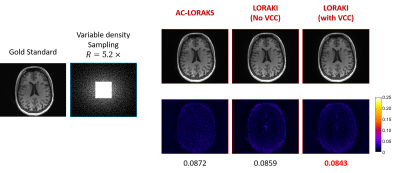

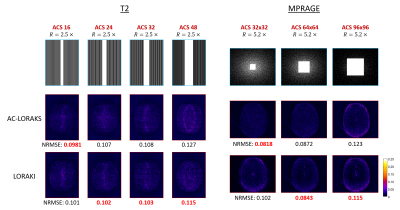

Figs. 2 and 3 show a performance comparison of different method for the two datasets. Fig. 2 shows reconstruction of the T2-weighted data from 4$$$\times$$$ uniform undersampling with 32 ACS lines (effective acceleration factor $$$R=2.6\times$$$), while Fig. 3 shows reconstruction of the MPRAGE data from variable density random sampling with 64$$$\times$$$64 ACS data (effective acceleration factor $$$R=5.2\times$$$). Results demonstrate that LORAKI can provide lower normalized root-mean-squared-error (NRMSE) than methods like GRAPPA, RAKI, and AC-LORAKS.

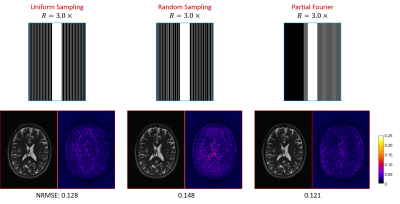

It should be noted that the nonuniform sampling pattern used in Fig. 3 is difficult to use with methods like GRAPPA and RAKI that typically assume uniform sampling, while LORAKS and LORAKI are both successful in this case. Fig. 4 further illustrates LORAKI's compatibility with a wide variety of sampling strategies (each case uses 32 ACS lines and effective acceleration factor $$$R=3\times$$$).

We observed that our current LORAKI implementation generally needs more ACS data than methods like LORAKS, which is consistent with previous observations that RAKI needs more ACS data than GRAPPA12. An example is shown in Fig. 5. This is one of the main limitations of our current implementation, although may be potentially mitigated using different RNN parameters.

Conclusion

LORAKI is a novel RNN-based deep learning k-space reconstruction method that is inspired by the scan-specific autocalibrated CNN characteristics of RAKI, together with the structure of Landweber iteration for data-consistent AC-LORAKS. Experimental results suggest that LORAKI can have advantages over previous methods like RAKI and AC-LORAKS, and possesses good reconstruction performance and good compatibility with a wide range of sampling patterns.Acknowledgements

This work was supported in part by a USC Annenberg fellowship, a Kwanjeong educational foundation scholarship, the IUSSTF-Viterbi Program, and research grants NSF CCF-1350563, NIH R21-EB022951, NIH R01-MH116173, and NIH R01-NS074980.References

1. Griswold MA, Jakob PM, Heidemann RM, et al. Generalized autocalibrating partially parallel acquisitions (GRAPPA). Magn Reson Med. 2002;47:1202–1210.

2. Lustig M, Pauly JM. SPIRiT: iterative self‐consistent parallel imaging reconstruction from arbitrary k‐space. Magn Reson Med. 2010;64:457–471.

3. Liang ZP, Haacke EM, Thomas CW. High‐resolution inversion of finite Fourier transform data through a localised polynomial approximation. Inverse Prob. 1989;5:831–847.

4. Zhang J, Liu C, Moseley ME. Parallel reconstruction using null operations. Magn Reson Med. 2011;66:1241–1253.

5. Haldar JP. Low-rank modeling of local k-space neighborhoods (LORAKS) for constrained MRI. IEEE Trans Med Imag 2014;33:668–681.

6. Haldar JP, Zhuo J. P‐LORAKS: Low‐rank modeling of local k-space neighborhoods with parallel imaging data. Magn Reson Med. 2016;75:1499–1514.

7. Haldar JP. Autocalibrated LORAKS for Fast Constrained MRI Reconstruction. IEEE International Symposium on Biomedical Imaging: From Nano to Macro, New York City, 2015, pp. 910–913.

8. Haldar JP, Kim TH. Computational imaging with LORAKS: reconstructing linearly predictable signals using low‐rank matrix regularization. Proc Asilomar Conf Sig Sys Comp. 2017:1870–1874.

9. Jin KH, Lee D, Ye JC. A general framework for compressed sensing and parallel MRI using annihilating filter‐based low‐rank hankel matrix. IEEE Trans Comput Imaging. 2016;2:480–495.

10. Ongie G, Jacob M. Off‐the‐grid recovery of piecewise constant images from few Fourier samples. SIAM J Imag Sci. 2016;9:1004–1041.

11. Chang Y, Liang D, and Ying L. Nonlinear GRAPPA: A kernel approach to parallel MRI reconstruction. Magn Reson Med. 2012;68:730–740.

12. Akçakaya M, Moeller S, Weingärtner S, Uğurbil K. Scan‐specific robust artificial‐neural‐networks for k‐space interpolation (RAKI) reconstruction: Database‐free deep learning for fast imaging. Magn Reson Med. 2018; (early view) doi:10.1002/mrm.27420.

13. Han Y, Ye JC. k-Space Deep Learning for Accelerated MRI. Preprint, 2018, arXiv:1805.03779.

14. Cheng JY, Mardani M, Alley MT, Pauly JM, Vasanawala. DeepSPIRiT: Generalized Parallel Imaging using Deep Convolutional Neural Networks. In Proceedings of the Joint Annual Meeting ISMRM-ESMRMB. Paris, France; 2018, p. 570.

15. Vogel CR. Computational methods for inverse problems. Philadelphia, PA: SIAM; 2002.

16. Blaimer M, Gutberlet M, Kellman P, Breuer FA, Kostler H, Griswold MA. Virtual coil concept for improved parallel MRI employing conjugate symmetric signals. Magn Reson Med. 2009;61:93-102.

17. Kim TH, Bilgic B, Polak D, Setsompop K, Haldar JP. Wave-LORAKS: Combining wave encoding with structured low-rank matrix modeling for more highly accelerated 3D imaging. Magn Reson Med. 2018; (early view) doi:10.1002/mrm.27511.

Figures