0670

A generalized deep learning framework for multi-landmark intelligent slice placement using standard tri-planar 2D localizers1GE Global Research, Bangalore, India, 2GE Global Research, Niskayuna, NY, United States, 3GE Healthcare, Rio de Janeiro, Brazil, 4GE Healthcare, Rochester, MN, United States, 5GE Healthcare, Waukesha, WI, United States

Synopsis

We demonstrate a deep learning-based MRI scan workflow for intelligent slice placement (ISP) for multiple brain landmarks (MSP, AC-PC, entire visual pathway, pituitary, IAC, hippocampus, TOF-Angiography) based on standard 2D tri-planar localizer images. Unlike prior approaches to automatic plane prescription, this method uses deep learning to determine all necessary planes without the need for explicit delineation of landmark structures and provides visual feedback to the user. For all the landmarks, we demonstrate that the proposed method can achieve landmark slice placement with mean distance error < 1 mm (N = 505) on localizer images itself and is comparable or better to slice placement obtained using higher resolution images. Results indicate excellent feasibility of the method for clinical usage.

Introduction:

MRI is inherently a multi-planar imaging modality. An automatic workflow for scan plane prescription of different landmarks and anatomies is desirable in clinical settings to reduce MRI exam time and improve image consistency, especially in longitudinal studies. Ideally, the plane prescription should be achieved with minimal disruption to existing clinical workflow, without the need for specialized 3D localizers or additional imaging data.

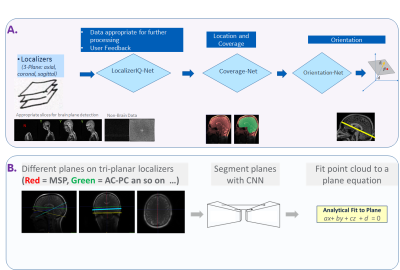

In this work, we demonstrate a generalized, deep learning (DL)-based framework for intelligent MRI slice placement (ISP) for multiple brain landmarks (mid-sagittal plane, anterior commissure-posterior commissure, entire visual pathway: orbits, optic nerve entire stretch, optic nerve post apex,left and right sagittal views of orbit and optic nerve entire, pituitary, IAC, hippocampus, TOF-Angiography) with standard tri-planar (i.e. axial, sagittal, coronal in scanner coordinates) localizer images (Fig.1A). Use of localizer quality network (Fig. 1A) ensures computational efficiency. Moreover, unlike previous approaches that have focused on landmark segmentation [1,2] or direct plane-estimation [3] on higher resolution images, we demonstrate a method using convolutional neural networks (CNN) to directly segment the planes on localizers (Fig.1B).

Methods:

ISP workflow consists of three DL-network components (Fig.1A) :

1) LocalizerIQ-Net: Identifies relevant brain data from tri-planar localizer images, guides subsequent processing and provides feedback in case localizer is not suitable for ISP. 2) Coverage-Net: Identifies anatomy coverage area. 3) Orientation-Net: Identifies scan plane orientation and location for given landmark.

Subjects: MRI data for the study came from following: Cohort A. 856 clinically negative subjects (11-50 years). Cohort B. 450 clinical datasets (positive and negative) obtained from multiple sites. Cohort A was used for DL training and quantitative evaluation of entire ISP workflow. Cohort B was used primarily for LocalizerIQ-Net and qualitative evaluation of ISP workflow on pathological cases (N = 8). All the studies were approved by IRB.

MRI Acquisition: Cohort A: Data was acquired on a GE 3T Discovery MR750 scanner using a dedicated head coil. Tri-planar Localizers: 2D SSFSE, TE/TR = 36 ms/820-830 ms, in-plane resolution = 0.58 mm x 0.58 mm, Slice Thickness = 10 mm, matrix = 512x512, slices = 7-12. Cohort B: Data was acquired on multiple scanners (GE 3T Discovery MR 750w, GE Signa HDxt 1.5T, GE 1.5T Optima 450w) and with different coil configurations (e.g.8-channel, 24-channel head coil, head-neck-spine array). Data in this cohort had variations in contrast, image resolution and matrix size across subjects.

Entire ISP workflow was implemented on clinical scanners (1.5T and 3T GE Signa MRI scanners).

LocalizerIQ Net labels: LocalizerIQ-Net was trained to identify relevant brain slices, images with artifacts and irrelevant slices. A total of 29000 images were used for training/validation (augmentation performed) and testing done on 700 images.

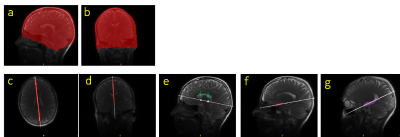

Data for Coverage-Net and Orientation-Net: Ground-truth (GT) labels are shown in Fig 2 and were verified by a trained radiologist. A total of 21770 volumes (758 cases with augmentation) were used for training/validation and 509 volumes were used for testing.

DL Methodology: DL-CNN classification and segmentation networks were adapted for ISP [4-7].

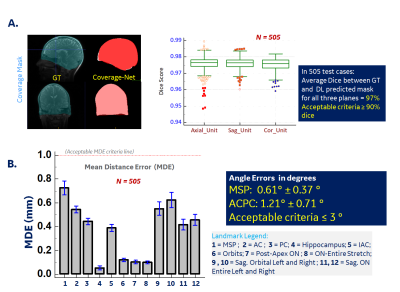

Accuracy Assessment: For LocalizerIQ-Net, label classification accuracy was used while for Coverage-Net, dice overlap ratio was the accuracy metric. For Orientation-Net, accuracy was assessed by calculating mean absolute distance error (MDE) between GT and DL predicted planes for all the landmarks. Additionally, angle errors between GT and DL-predicted planes were calculated for MSP (it propagates) and ACPC (to compare with prior-art). MDE < 1 mm and angle error < 3° was considered as acceptable for ISP [1].

Results and Discussion:

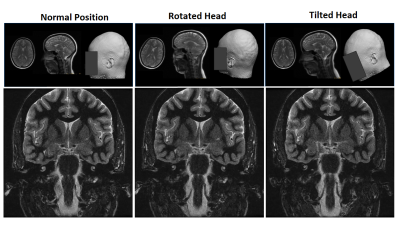

For LocalizerIQ-Net, classification accuracy was 99.2%. ISP can provide accurate slice placement for various landmarks planes on localizer images (Fig. 3). Since plane segmentation is guided by richer set of DL features from entire imaging FOV, accurate slice placement is obtained even when the structure is affected by pathology. Notice accurate MSP placement (nose to sinus) even with significant mid-line shift (Fig. 3B). Overall, Coverage-Net and Orientation-Net accuracy are within the acceptable criteria (Fig. 4). Moreover, they compare similarly or better with prior-art plane estimation methods done using higher resolution images (Fig.4). Fig.5 demonstrates utility of ISP in a scenario where subject’s head is randomly rotated, but acquired data is consistent across different head poses.Conclusion

We introduce a new DL-based intelligent slice placement framework based on standard tri-planar localizer images and demonstrate prescription for multiple brain landmarks. Results indicate very high accuracy for slice placement on localizer images itself, with similar or improved results reported in prior-art with higher-resolution data. We noticed robust performance of ISP even in presence of pathology and variable head positions. The generalized ISP workflow can be extended to other anatomies as well.Acknowledgements

No acknowledgement found.References

1. Liu Y, Dawant BM. IEEE J Biomed Health Inform. 2015;19(4):1362-74.

2. Wieslaw L. Nowinski et.al., Acad Radiol. 2006 May; 13(5): 652–663.

3. Alansary, Amir et.al;, Daniel. (2018). MICCAI 2018, pp.277-285.

4. https://keras.io/

5. Olaf Ronneberger, Philipp Fischer, Thomas Brox, Medical Image Computing and Computer-Assisted Intervention (MICCAI), Springer, LNCS, Vol.9351: 234--241, 2015

6. Milletari, Fausto & Navab, Nassir & Ahmadi, Seyed-Ahmad. (2016). V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. 565-571. 10.1109/3DV.2016.79.

7. Kaiming He et.al., “Deep Residual Learning for Image Recognition”, arXiv:1512.03385

Figures

Figure 4. Performance metrics for Coverage-Net (A) and Orientation-Net (B). For Orientation-Net, mean angle errors for MSP (= 0.67°) and ACPC plane (= 1.21°) are less than cut-off value (= 3°). For all landmarks, mean MDE is < 1 mm (e.g. MSP = 0.72 mm, ACPC = 0.49 mm). These metrics compare similar or improved to those reported in prior-art from higher resolution datasets: using landmark features (MSP = 1.08°, ACPC = 0.55 mm as reported in [1] or MSP = 1°-2° as in [2]) or using direct plane estimation (MSP = 1.53mm,2.44° , ACPC = 1.98mm,4.48° as reported in [3]).