0665

Joint multi-contrast Variational Network reconstruction (jVN) with application to Wave-CAIPI acquisition for rapid imaging1Martinos Center for Biomedical Imaging, Charlestown, MA, United States, 2University of Heidelberg, Heidelberg, Germany, 3Siemens Healthcare GmbH, Erlangen, Germany, 4MIT, Cambridge, MA, United States, 5Harvard Medical School, Boston, MA, United States, 6German Cancer Research Center, Heidelberg, Germany, 7Harvard-MIT Health Sciences and Technology, Cambridge, MA, United States

Synopsis

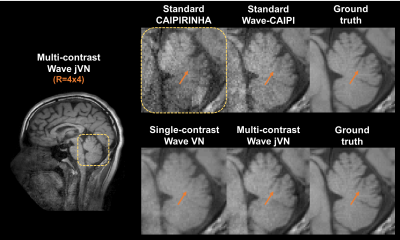

We introduce a joint Variational Network (jVN) to reconstruct multi-contrast data jointly from accelerated MRI acquisitions. By taking advantage of the shared structural information among different clinical contrasts, jVN better preserved small anatomical features when compared to standard single-contrast VN. Combining jVN with the efficient Wave-CAIPI acquisition scheme enabled rapid 3D volumetric scans at R=16x acceleration. This approach was evaluated at 3T using in-vivo data from three clinical contrasts, resulting in up to a 54% reduction in RMSE when compared to standard Wave-CAIPI reconstructions. The jVN reconstructions preserved both high spatial resolution and good image quality.

Introduction

Deep Learning (DL) has been used to improve the reconstruction quality of highly undersampled MRI data [1][2]. Recent publications have also demonstrated the benefit of jointly reconstructing data from multiple contrasts using DL [3][4] to take advantage of the shared structural information. In this contribution, we augment the Variational Network architecture [1] to jointly reconstruct multi-contrast data (jVN) and demonstrate the significant improvement of our approach over standard VN reconstruction, particularly in preserving subtle anatomical features. Moreover, we adapted the proposed jVN reconstruction to enable reconstruction of 3D Wave-CAIPI data with only a small memory footprint. High-quality results were demonstrated at 16x acceleration for clinical 3D volumetric brain scans.Methods

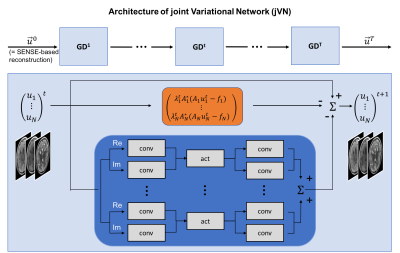

Our proposed joint Variational Network (jVN) is based on the network architecture from [1] (Fig. 1), where the input image is now composed of multiple imaging contrasts stacked within the channel dimension of the network. The CNN layers (shown in blue) mix the different input contrasts which allows for shared image information to be exchanged between the different structural scans. The data fidelity term of the network (orange) helps to preserve each imaging contrast by promoting agreement with the acquired k-space data individually for each scan.

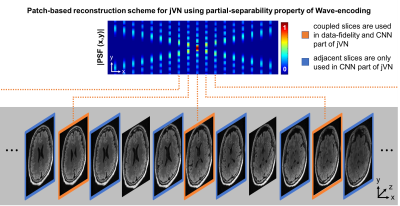

We have also applied jVN to 3D imaging using the efficient Wave-CAIPI sampling scheme. The corkscrew k-space trajectory of Wave-CAIPI spreads the aliasing in all three spatial dimensions to improve the conditioning of the reconstruction, at a cost of increased spatial-coupling in the reconstruction (i.e. slice-by-slice reconstruction is not possible). Reconstructing the entire 3D Wave-CAIPI dataset at once using jVN is infeasible on standard GPUs due to memory limitations. To ameliorate this problem we developed a patch-based reconstruction scheme that takes advantage of the partial-separability property of Wave-encoding (Fig. 2).

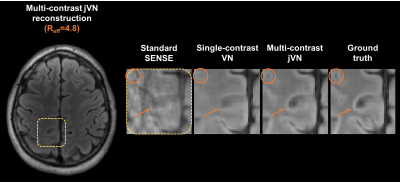

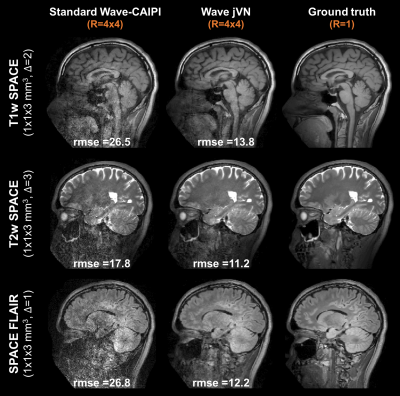

We first assessed the performance of jVN when compared to standard VN for 2D imaging with 1D acceleration at Reff=4.8 (1x1x4 mm3 resolution). The results of this preliminary evaluation are shown in Fig. 3. Next, evaluation of jVN reconstruction on Wave-CAIPI datasets was performed using data from 10 healthy subjects. Standard T1w-SPACE, T2w-SPACE and FLAIR-SPACE were acquired at 3T (MAGNETOM Prisma/Skyra, Siemens Healthcare, Erlangen Germany) without undersampling. For each subject, the datasets were first registered channel-by-channel using FSL FLIRT [7] and Wave-CAIPI encoding was then synthesized by convolving the datasets with a Wave point-spread-function corresponding to 4 sinusoidal cycles (16 mT/m gradient amplitude and 592 Hz/px bandwidth) which has been shown to work robustly for in-vivo acquisitions. All datasets were then retrospectively undersampled at R=4x4 acceleration with varying CAIPIRINHA shifts (1,2 or 3) to achieve complementary k-space sampling. The input images u0 were generated from standard parallel imaging reconstruction without Machine Learning. The training of the Wave jVN network (T=5, 48 filters per GD step with filter size 5x5x5) was performed on 576 partitions from 9 subjects, and the dataset from the remaining subject was used for validation.

Results

Figure 3 shows the performance of jVN in comparison to standard VN for 1D acceleration at Reff=4.8. Both ML approaches produced reasonable quality at this high acceleration, nevertheless the jVN better preserved the spatial resolution. This improvement is exemplified at the gray-matter/white-matter boundary indicated by the orange circle. Similarly, small anatomical features such as the CSF region in the upper left corner remained visible in jVN but were mostly smoothed out in single-contrast VN.

Figures 4 and 5 show the results from standard parallel imaging and jVN reconstructions of Wave-CAIPI data at R=4x4. At this high acceleration, the parallel imaging reconstruction of the Wave-CAIPI data starts to breakdown with significant sqrt(R) and g-factor noise penalty and artifacts. This can best be seen in the central brain region of the SPACE FLAIR acquisition, where the sensitivity encoding ability of the 32ch head coil is limited. In contrast, the jVN is able to mitigate most of this noise enhancement while providing an accurate representation of the underlying anatomy, where up to 54% of RMSE reduction was observed.

Discussion

We introduced a jVN to jointly reconstruct multiple clinical imaging sequences and demonstrated significant improvement over VN reconstruction. We demonstrated the ability to better preserve subtle imaging features at high accelerations. The jVN reconstruction was also optimized for Wave-CAIPI 3D acquisitions, to achieve reconstruction with a small enough memory footprint to be suitable for GPU implementation. Using this approach, high quality reconstruction of 16-fold accelerated clinical 3D brain sequences with Wave-CAIPI encoding was achieved with up to 54% reduction in RMSE when compared to standard parallel imaging reconstruction.Acknowledgements

This work was supported in part by NIH research grants: R01MH116173, R01EB020613,R01EB019437, U01EB025162, P41EB015896, and the shared instrumentation grants: S10RR023401, S10RR019307, S10RR019254, S10RR023043References

[1] K. Hammernik, T. Klatzer, E. Kobler, M. P. Recht, D. K. Sodickson, T. Pock, and F. Knoll, “Learning a variational network for reconstruction of accelerated MRI data,” Magn. Reson. Med., vol. 79, no. 6, pp. 3055–3071, 2018.

[2] J. Y. Cheng, F. Chen, M. T. Alley, J. M. Pauly, and S. S. Vasanawala, “Highly Scalable Image Reconstruction using Deep Neural Networks with Bandpass Filtering,” arXiv:1805.03300 [cs.CV], 2018.

[3] E. Gong, G. Zaharchuk, and J. Pauly, “Improving the PI+CS Reconstruction for Highly Undersampled Multi-contrast MRI using Local Deep Network,” Proc Intl Soc Mag Reson Med, vol. 25, p. 5663, 2017.

[4] S. U. H. Dar, M. Yurt, M. Shahdloo, M. E. Ildiz, and T. Çukur, “Synergistic Reconstruction and Synthesis via Generative Adversarial Networks for Accelerated Multi-Contrast {MRI},” CoRR, vol. abs/1805.1, 2018.

[5] B. Bilgic, T. H. Kim, C. Liao, M. K. Manhard, L. L. Wald, J. P. Haldar, and K. Setsompop, “Improving parallel imaging by jointly reconstructing multi-contrast data,” Magn. Reson. Med., 2018.#

[6] B. Bilgic, B. A. Gagoski, S. F. Cauley, A. P. Fan, J. R. Polimeni, P. E. Grant, L. L. Wald, and K. Setsompop, “Wave-CAIPI for highly accelerated 3D imaging,” Magn. Reson. Med., vol. 73, no. 6, pp. 2152–2162, 2015.

[7] M. Jenkinson and S. Smith, “A global optimisation method for robust affine registration of brain images,” Med. Image Anal., 2001.

Figures