5198

Fully-Automated Segmentation of Knee Joint Anatomy using Deep Convolutional Neural Network Approach1Department of Radiology, University of Wisconsin-Madison, Madison, WI, United States, 2Department of Biomedical Engineering, University of Minnesota, Minneapolis, MN, United States

Synopsis

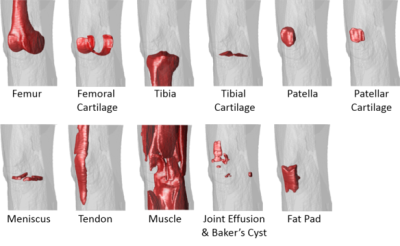

A new fully-automated approach was proposed using a deep convolutional encoder-decoder (CED) network combined with 3D fully-connected conditional random field (CRF) and 3D simplex modeling for performing efficient and accurate multi-class musculoskeletal tissue segmentation from MR images. The deep learning-based segmentation method could be used to create 3D rendered models of all knee joint structures including cartilage, bone, tendon, meniscus, muscle, infrapatellar fat pad, and joint effusion and Baker’s cyst which may be sources of pain in patients with knee osteoarthritis. The results of our study serve as a first step to provide quantitative MR measures of musculoskeletal tissue degeneration in a highly time efficient manner which would be practical for use in large population-based osteoarthritis research studies.

Introduction

Osteoarthritis (OA) is one of the most prevalent chronic joint disease(1, 2). Segmentation is the crucial first step in the processing pipeline to acquire quantitative measures of joint degeneration from MR images. OA is now considered to be a “whole-organ” disease(3). While fully-automated segmentation was investigated primarily focusing on bone and cartilage, the approach capable of whole knee joint segmentation is lacking. In this study, we propose a fully-automated method combining a deep convolutional neural network (CNN), 3D fully-connected conditional random filed (CRF), and 3D simplex deformable modeling to perform comprehensive tissue segmentation of the knee joint.Methods

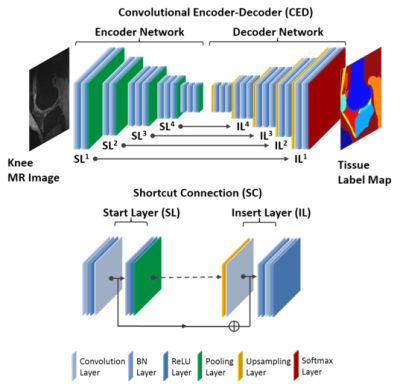

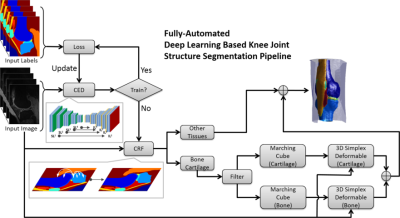

The key component is a deep convolutional encoder-decoder (CED) network which is an adaptation of a network structure used in studies by Liu et.al. for segmenting cartilage and bone in musculoskeletal MR images(4). An illustration of the CED network is shown in Figure 1. This network is composed of an encoder network and a decoder network. The encoder network is essentially the same as the 13 convolutional layers of the VGG16 network(5). The decoder network is a reverse process of the encoder and consists of up-sampling layers to recover high resolution pixel-wise labels. The final layer of decoder network is a multi-class soft-max classifier for producing voxel-wise class probabilities. In addition, a symmetric shortcut connection (SC) between the encoder and decoder network is added to improve the CED network by promoting the labeling performance(6). To optimize the label assignment for voxels with similar image intensity values and to take into account the 3D contextual relationships among voxels, a fully-connected 3D conditional random field (CRF) is applied to fine-tune the segmentation results from the CED networks(7). Finally, 3D deformable modeling is implemented for cartilage and bone refinement to maintain a smooth and well-defined boundary. The full segmentation algorithm is summarized in Figure 2. The segmentation method was evaluated on clinical knee image dataset (20 sagittal frequency selective fat-suppressed 3D fast spin-echo (3D-FSE) images, leave-one-out cross validation) for segmenting 13 classes (12 different knee joint structures and one background class). To evaluate the accuracy of tissue segmentation, the Dice’s coefficient was used for each individual joint structures to compare the segmentation result against manual labeling.Results

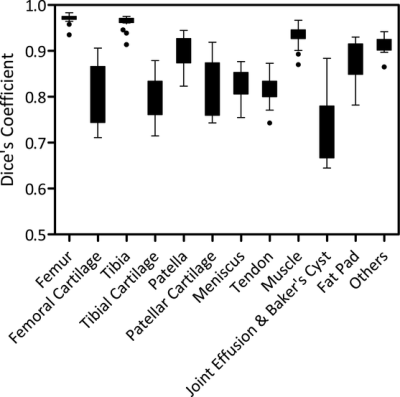

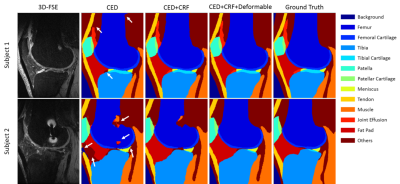

The total training time was approximately 1.5 hours for each fold in the leave-one-out cross-validation. However, tissue segmentation was rather fast with a mean computing time of 0.2 min, 0.8 min, and 3 min for the CED, the 3D fully-connected CRF, and the 3D deformable modeling process respectively for each image volume. Figure 3 shows the Turkey box-and-whiskers plot of the Dice’s coefficient values for each individual segmented joint structure in the 20 subjects with knee OA. There were four tissue types with high mean Dice’s coefficients above 0.9 including the femur, tibia, muscle, and other non-specified tissues. There were seven tissue types with mean Dice’s coefficients between 0.8 and 0.9 including the femoral cartilage, tibial cartilage, patella, patellar cartilage, meniscus, quadriceps and patellar tendon, and infrapatellar fat pad. There was one tissue type with a mean Dice’s coefficient between 0.7 and 0.8 for joint effusion and Baker’s cyst. Figure 4 shows examples of tissue segmentation performed on the 3D-FSE images of the knee joint in two subjects using the CED network only, the CED network combined with 3D fully-connected CRF, and the CED network combined with both CRF and 3D deformable modeling. Figure 5 shows examples of 3D rendered models for all joint structures in a 46 year old female with mild knee OA created using the segmented tissue masks. The 3D rendered models demonstrated good estimation of the complex anatomy for both regular shaped structures such as bone, cartilage, meniscus, and tendon, and irregular shaped structures such as joint effusion and Baker’s cyst.Discussion

Our study demonstrated the feasibility of using a fully-automated deep learning-based approach for efficient and accurate segmentation of all joint structures in patients with knee OA. Our method incorporated a deep CED network combined with pixel-wise label refinement using 3D fully-connected CRF and contour-based 3D deformable refinement using 3D simplex modeling. Our study showed that the deep learning-based approach could achieve high segmentation efficiency and accuracy not only for cartilage and bone (4) but also for other joints structures which may be sources of pain in patients with knee OA including tendon, meniscus, muscle, infrapatellar fat pad, and joint effusion and Baker’s cyst. The results of our study serve as a first step to provide quantitative MR measures of musculoskeletal tissue degeneration in a highly time efficient manner which would be practical for use in large population-based OA research studies.Acknowledgements

We acknowledge support from NIH R01-AR068373.References

1. Oliveria SA, Felson DT, Reed JI, Cirillo PA, Walker AM: Incidence of symptomatic hand, hip, and knee osteoarthritis among patients in a health maintenance organization. Arthritis Rheum 1995; 38:1134–1141.

2. Felson DT, Zhang Y: An update on the epidemiology of knee and hip osteoarthritis with a view to prevention. Arthritis Rheum 1998; 41:1343–1355.

3. Peterfy C, Woodworth T, Altman R: Workshop for Consensus on Osteoarthritis Imaging: MRI of the knee. Osteoarthr Cartil 2006; 14:44–45.

4. Liu F, Zhou Z, Jang H, Samsonov A, Zhao G, Kijowski R: Deep Convolutional Neural Network and 3D Deformable Approach for Tissue Segmentation in Musculoskeletal Magnetic Resonance Imaging. Magn Reson Med 2017:DOI: 10.1002/mrm.26841.

5. Simonyan K, Zisserman A: Very Deep Convolutional Networks for Large-Scale Image Recognition. ArXiv e-prints 2014.

6. He K, Zhang X, Ren S, Sun J: Identity Mappings in Deep Residual Networks. ArXiv e-prints 2016.

7. He X, Zemel RSRS, Carreira-Perpinan MAA, et al.: Multiscale conditional random fields for image labeling. Volume 2. IEEE; 2004.

Figures