4952

Eye motion artifacts in T2-weighted images, identified by deep neural networks, correlate with concussion1Radiology, Medical College of Wisconsin, Milwaukee, WI, United States, 2Physiology, Medical College of Wisconsin, Milwaukee, WI, United States, 3Neurosurgery, Medical College of Wisconsin, Milwaukee, WI, United States

Synopsis

Oculomotor deficits occur with traumatic brain injury, and eye motion yields artifacts in the phase encoding directions of common MRI acquisitions. Here we quantify motion artifacts in regions of interest of T2-weighted MRI head images in concussed and healthy high school and collegiate athletes. Regions of interest over eyes, and inner ear structures as a control, were automatically generated using a convolutional neural network. Acute and sub-acute injury was found to yield significantly increased motion artifact compared to controls in ROIs covering eyes. No differences in motion artifacts covering inner ear structures were found. These results indicate that anatomical MRI following traumatic brain injury may offer increased diagnostic or prognostic information through artifact resulting from eye motion associated with injury.

Introduction

A number of studies have shown oculomotor deficits in patients with acute concussion [1-3]. In MRI, motion introduces artifacts in the phase encoding direction of standard acquisitions [4]. Motion of the eyes during anatomical acquisitions introduces such artifacts. We hypothesize that eye motion artifact in anatomical brain acquisitions is greater in patients in the acute phase of concussion recovery than in healthy subjects and that artifact returns to normal with recovery. While anatomical MRI is certainly not the preferred method for the assessment of oculomotor deficits, if eye motion information is available in the images, it can serve as an additional piece of information for diagnosis, prognosis, or research evaluation of patients imaged for mild traumatic brain injury.Methods

Images were acquired as part of a study of sports related concussion in high school and college athletes using a 32-channel head coil and a GE Healthcare Discovery MR750 3.0T MRI. All data analyzed in this work is gathered from a T2-weighted 3D Cube acquisition. The acquisition parameters are: 2500 ms TR, 64 ms TE, 140 echo train length, sagittal slab selection, frequency encoding in the S-I direction, 180 slices of 1 mm thickness, 256 acquisition matrix with 25.6 cm FOV and 90% phase encoding (R-L) FOV. Prospective motion correction, PROMO [5], was utilized to minimize artifact from bulk motion. Imaging was performed at four time points, including under 48-hours post-injury, as well as 7 days, 14 days, and 45 days post-injury, and at matched time points in control subjects.

To ensure consistency of region of interest (ROI) placement, a convolutional neural network was developed to automatically locate the eyes in the acquired T2-weighted images. The neural network architecture is shown in Figure 1, and is loosely based upon the AlexNet architecture [6]. Modifications with respect to AlexNet include the expansion from 2D to 3D convolution, and changes in image size. All ROIs were inspected to ensure proper placement identification with this technique.

Eye motion artifact was quantified through the image intensity variance in 31x31x31 mm^3 regions of interest centered around the center of mass of each eye in the T2-weighted images. Specifically, the motion variance metric was calculated as the difference between the variance in the frequency encoding direction, and the average variance in the two phase encoding directions of the 3D acquisition.

Matching control ROIs, identified with the same algorithm trained on different data, were also selected over the cochlea and semi-circular canals, as these structures are well defined in the T2-weighted images and are fixed with respect to the head. Bulk head motion artifact, in addition to yielding artifact in eye regions of interest, would be expected to create artifact with these high-contrast inner ear structures.

Results

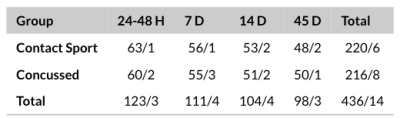

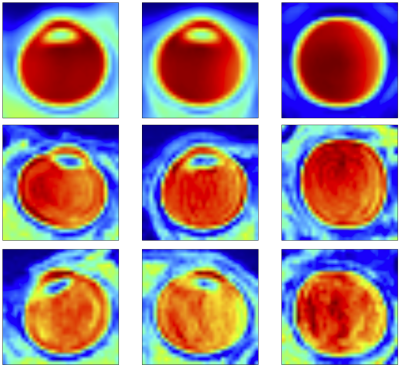

Regions of interest were generated from 450 T2-weighted imaging datasets, as described in Figure 2. ROI generation failed in 14 cases, including three cases of RF coil failure, repeated scans of two subjects with hydrocephalus, and repeated scans of one subject with a congenital cyst. In all other cases, regions of interest were properly generated around the eyes and inner ear structures. The average of all eye ROIs, as well as 95th and 5th percentile motion artifact eyes are shown in Figure 3.

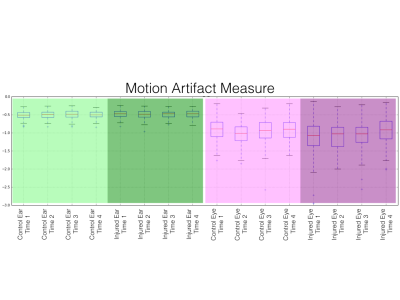

Plots of motion artifact metrics are shown in Figure 4. In both the control and injured groups, motion artifact quantification in the control region of interest was consistent and low, with no significant (p<0.05) difference. Motion artifacts in eye regions of interest were greater than in ear regions of interest (p<1.00x10-128). Increased eye motion artifact was observed in the at the acute time point (p<1.25x10-6), first subacute time point (p<3.35x10-5), and second subacute time point (p<0.004). No significant difference (p<0.05) between control eye motion artifact and injured eye motion artifact was observed at the 45 day time point.

Discussion

It was observed that a convolutional neural network can robustly identify consistent regions of interest over eyes and inner ear structures in T2-weighted images of the human head. Motion artifacts associated with structures that are rigidly affixed to the skull were minimal, and were unchanged between injured and control groups. Significantly increased eye motion artifacts were observed in athletes following concussion, and the eye motion artifacts returned to normal by the point of the 45 day post-injury imaging session.Conclusion

These data suggest that oculomotor deficits associated with concussion may manifest as eye motion artifacts in anatomical MRIs. These artifacts can be quantified through an automated process, and may offer additional information regarding injury in standard imaging protocols for mild traumatic brain injury.Acknowledgements

This work was supported by the Defense Health Program under the Department of Defense Broad Agency Announcement for Extramural Medical Research through Award No. W81XWH-14-1-0561. Opinions, interpretations, conclusions and recommendations are those of the author and are not necessarily endorsed by the Department of Defense.References

1. Ciuffreda, K. et al. Occurence of oculomotor dysfunctions in acquired brain injury: A retrospective analysis. Optometry 78:155-161 (2007).

2. Samadani, U. et al. Eye tracking detects disconjugate eye movements associated with structural traumatic brain injury and concussion. J. Neurotrauma 32:548-566 (2015).

3. Balaban, C. et al. Oculomotor, vestibular and reaction time tests in mild traumatic brain injury. PLoS One 11(9):e0162168 (2016).

4. Bernstein, et al. Handbook of MRI Pulse Sequences. Elsevier (2004).

5. White, N. et al. PROMO: Real-time prospective motion correction in MRI using image-based tracking. Magn Reson Med 63(1):91-105 (2010)

6. Krizhevsky, A. et al. ImageNet Classification with Deep Convolutional Neural Networks. Advances in Neural Information Processing Systems 25 (2012).

Figures