4857

Deep-learned 3D black-blood imaging using automatic labeling technique and 3D convolutional neural networks for detection of metastatic brain tumors1Electrical and Electronic Engineering, Yonsei University, Seoul, Republic of Korea, 2Philips Korea, Seoul, Republic of Korea, 3Radiology and Research Institute of Radiological Science, Yonsei University College of Medicine, Seoul, Republic of Korea, 4Department of Radiology, Ewha Womans University College of Medicine, Seoul, Republic of Korea

Synopsis

Black-blood (BB) imaging has complementary roles in addition to contrast-enhanced 3D gradient-echo (CE 3D-GRE) imaging for detection of brain metastases. We proposed deep-learned 3D BB imaging with an auto-labeling technique and 3D convolutional neural networks (

Introduction

Black-blood (BB) magnetic resonance imaging selectively suppresses blood signals using various methods and has been reported to improve diagnostic performance in detecting brain metastases1-3. However, blood signal suppression is not always perfect even at low velocity-encoding setting and may cause false positive results. Therefore, BB imaging has complementary roles in addition to contrast-enhanced 3D gradient-echo (CE 3D-GRE) imaging4, which requires additional scan time of approximately 5 min to 7 min covering the whole brain. Recently, researches to try out medical images generation or transformation using convolutional neural networks (CNNs)5-6, which are one of the deep learning architectures, were proposed. However, there might be a problem with transforming CE 3D-GRE into BB images straight using CNNs because of contrast and quality of the BB images. To solve the label problem of BB images, we developed an auto-labeling technique that automatically performs the labeling of synthetic BB images for CNNs which can produce vessel-suppressed images while retaining metastases by using pre-acquired CE 3D-GRE and BB images for efficient training of CNNs. Then, we propose deep-learned 3D BB imaging using 3D CNNs which is trained with CE 3D-GRE and synthetic BB images.Methods

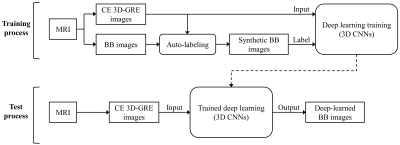

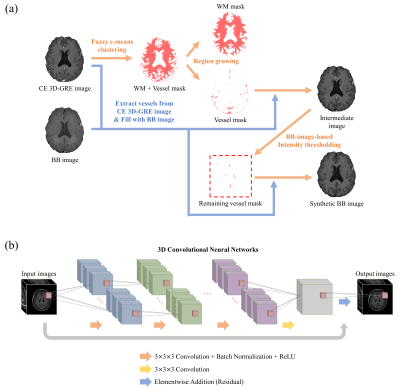

Proposed deep-learned 3D BB imaging is divided into 1) a training process for the deep learning model and 2) a test process for obtaining results using the trained deep learning model (Fig. 1). In the auto-labeling technique, blood vessels were extracted from CE 3D-GRE images using fuzzy c-means clustering, region growing and BB-image-based intensity thresholding methods (Fig. 2a). With the help of BB images, it was possible to make complete vessel suppressed images (synthetic BB images) while other tissues or metastases were retained, which enabled the efficient learning from CE 3D-GRE images to generate the deep-learned BB images. The deep learning architecture is shown in Fig. 2b. 3D CNNs were used as a deep learning architecture. A total of 20 patients were used as training data, 9 patients as validation data, and 36 patients as test data. To evaluate the blood vessel suppression efficiency, we defined the vessel suppression ratio Rs as follows: $$R_s = \frac{SI_{WM}}{0.5\left(SI_{WM}+SI_{vessel}\right)}$$ where SIWM is the average signal of WM region of interest (ROI), and SIvessel is the average signal of three vessel ROIs in one vessel type. We classified vessel signals into three types. Three ROIs (type 1) were selected from the anterior cerebral artery, superior sagittal sinus, and vein of Galen. Other three (type 2) were selected from middle cerebral arteries and the others (type 3) were selected from small cortical branches.Results

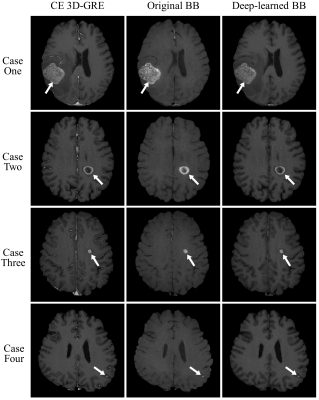

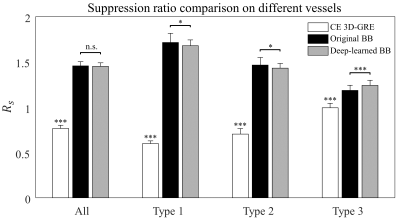

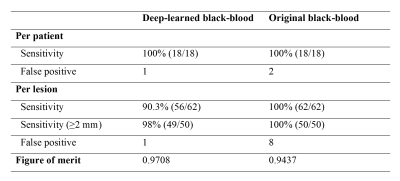

Fig. 3 shows CE 3D-GRE images, BB images, and deep-learned BB images of the deep learning output from left to right. The images in the first case were those with metastasis size 30 mm, the second case with 15 mm, the third case with 6 mm, and the last case with 3 mm. As seen in the third column, blood vessels were well suppressed and metastases of different sizes were retained in deep-learned BB images. Fig. 4 shows the vessel suppression ratio Rs comparison on different vessels among CE 3D-GRE images, original BB images, and deep-learned BB images with 36 patients. Both original BB and deep-learned BB images had significantly higher ratio compared to CE 3D-GRE images across all vessels (p<0.001). Original BB images have higher ratio compared to deep-learned BB images in type 1 and type 2 (p<0.05). However, in type 3, deep-learned BB images have significantly higher ratio compared to original BB images (p<0.001). In overall, original BB images and deep-learned BB images have no statistically different vessel suppression ratio (p>0.05). Among 36 patients in the test set, 18 patients had 62 metastatic tumors in the brain. According to per patient analysis, sensitivities were 100% for both deep-learned and original BB imaging, whereas there were 2 patients with false positive lesions seen on original BB imaging (Fig. 5). According to per lesion analysis, overall sensitivities were 90.3% for deep-learned BB and 100% for original BB. In subgroup analysis with nodules ≥2 mm, deep-learned BB missed only one lesion with sensitivity of 98%. There were eight false positive nodules seen on original BB, whereas there was only one false positive nodule seen on deep-learned BB. According to JAFROC analysis7, figure of merits (FOM) were 0.9708 with deep-learned BB and 0.9437 with BB, which did not differ significantly (p>0.05).Conclusion

In conclusion, our proposed deep-learned 3D BB imaging using an automatic labeling technique and 3D CNNs can be effectively used for detecting metastatic tumors in the brain.Acknowledgements

This research was supported by the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIP) (2016R1A2R4015016) and Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT & Future Planning (2017R1D1A1B03030440).References

[1] Nagao, E. et al. 3D turbo spin-echo sequence with motion-sensitized driven-equilibrium preparation for detection of brain metastases on 3T MR imaging. Am. J. Neuroradiol. 2011;32(4):664-670.

[2] Park, J. et al. Detection of small metastatic brain tumors: comparison of 3D contrast-enhanced whole-brain black-blood imaging and MP-RAGE imaging. Invest. Radiol. 2012;47(2):136-141.

[3] Lee, S., Park, D. W., Lee, J. Y., Lee, Y.-J. & Kim, T. Improved motion-sensitized driven-equilibrium preparation for 3D turbo spin echo T 1 weighted imaging after gadolinium administration for the detection of brain metastases on 3T MRI. Br. J. Radiol. 2016;89(1063):20150176.

[4] Takeda, T. et al. Gadolinium-enhanced three-dimensional magnetization-prepared rapid gradient-echo (3D MP-RAGE) imaging is superior to spin-echo imaging in delineating brain metastases. Acta Radiol. 2008;49(10):1167-1173.

[5] Bahrami, K., Shi, F., Rekik, I. & Shen, D. Convolutional neural network for reconstruction of 7T-like images from 3T MRI using appearance and anatomical features. In Proceedings of the 1st International Workshop on Large-Scale Annotation of Biomedical Data and Expert Label Synthesis (LABELS’16), 2016;39-47.

[6] Nie, D., Cao, X., Gao, Y., Wang, L. & Shen, D. Estimating CT image from MRI data using 3D fully convolutional networks. In Proceedings of the 1st International Workshop on Large-Scale Annotation of Biomedical Data and Expert Label Synthesis (LABELS’16), 2016;170-178.

[7] Chakraborty, D. P. Analysis of location specific observer performance data: validated extensions of the jackknife free-response (JAFROC) method. Acad. Radiol. 2006;13(10):1187-1193.

Figures