4783

Deep Learning and Compressed Sensing: Automated Image Quality Assessment in Iterative Respiratory-Resolved Whole-Heart MRI1Advanced Clinical Imaging Technology, Siemens Healthcare AG, Lausanne, Switzerland, 2Institute of Bioengineering/Center for Neuroprosthetics, École Polytechnique Fédérale de Lausanne (EPFL), Lausanne, Switzerland, 3Department of Radiology, University Hospital (CHUV) and University of Lausanne (UNIL), Lausanne, Switzerland, 4Center for Biomedical Imaging (CIBM), Lausanne, Switzerland, 5LTS5, École Polytechnique Fédérale de Lausanne (EPFL), Lausanne, Switzerland, 6Division of Cardiology and Cardiac MR Center, University Hospital of Lausanne (CHUV), Lausanne, Switzerland, 7Department of Radiology and Medical Informatics, University Hospital of Geneva (HUG), Geneva, Switzerland

Synopsis

We aim at creating a link between compressed sensing (CS) reconstruction and automated image quality (IQ) assessment using deep learning. An automated image quality assessment algorithm based on a deep convolutional neural regression network trained to evaluate the quality of whole-heart MRI datasets is used to assess IQ at every iteration of a respiratory motion-resolved CS reconstruction. Not only IQ evolution as assessed by the network visually correlates with the CS cost function, but the neural network is able to distinguish the image quality of different respiratory phases with high correlation to visual expert assessment.

Introduction

Image quality (IQ) is a central metric in medical imaging. A fast and reliable IQ assessment may become especially important when iterative reconstruction algorithms are involved as is the case with compressed sensing (CS) [1,2]. At each iteration, CS algorithms try to minimize a certain objective cost function, which usually takes into account a data consistency term and a mathematical regularization term with the final goal of consistently converging to a “better image”. However, IQ is not always strictly related to the regularization criteria and the algorithm may converge to images that are too smooth or too noisy, depending on the selected parameter set. Deep convolutional neural networks can be trained to mimic the human assessment of the quality of medical images [3]. Here, we aim to test the hypothesis that a deep convolutional neural regression network trained on a patient database of isotropic 3D whole-heart MR image volumes can be used to assess the convergence of a respiratory motion-resolved CS reconstruction [4] (i.e. quantitatively measure IQ improvement). Finally, we also demonstrate that the same network can be used to automatically select the respiratory phase with highest IQ.Materials and Methods

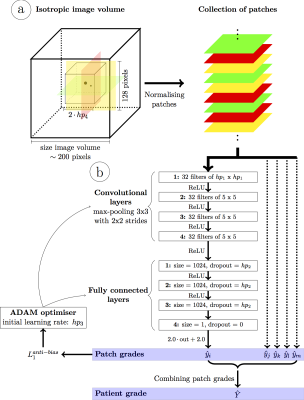

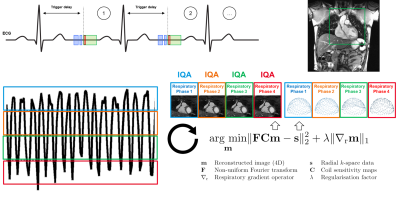

A deep convolutional neural network for automated image quality assessment [3] (Fig.1) that provides a quantitative IQ evaluation [5] as a continuous value ranging from 0 (poor quality) to 4 (excellent) was optimized, trained, and tested using a patient database of respiratory self-navigated whole heart isotropic 3D MR imaging volumes [6,7]. The network already showed a high correlation with the ground truth grading by expert readers and accuracy within the bounds of intra- and inter-observer variability [3]. In this work, N=12 randomly selected k-space datasets corresponding to patients from the test set of the network were reconstructed using a 4D respiratory-resolved reconstruction CS pipeline [4]. Reconstruction was performed with four respiratory phases using the XD-GRASP algorithm [2]. The neural network was used to both compare the quality of intermediate imaging volumes at subsequent iterations of the reconstruction and assess the differences in IQ among the different reconstructed respiratory phases (Fig.2). The resulting IQ evolution as assessed by the network was visually compared with the evolution of the mathematical objective cost function used by the CS algorithm. Furthermore, an expert reader visually ranked the reconstructed respiratory phases according to their image quality by comparing anonymized pairs of image volumes for all 12 datasets. The expert evaluation was compared with the IQ differences between phases as assessed by the automated image quality assessment network.Results

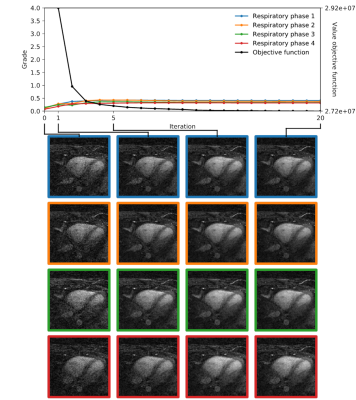

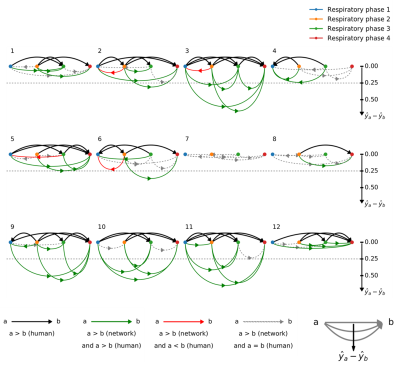

Fig.3 and Fig.4 display the IQ evolution assessed in all four respiratory phases as a function of the specific iteration number during respiratory-resolved reconstruction for two different datasets in direct comparison with the objective cost function (normalized for visual comparison). Iteration 0 corresponds to the first gridding step before the CS optimization actually starts. In the first case, a clear increase in estimated IQ is visible and the respiratory phases do not all converge to the same grade. In the second dataset (Fig.4), however, no considerable increase can be seen neither as a function of increasing iterations nor as a function of different respiratory levels. A visual correspondence between the evolution of the objective cost function and the IQ assessment was very high in all reconstructions. Fig.5 shows the result of the paired comparisons between respiratory phases. Green and red arrows correspond, respectively, to agreement and disagreement between the network and the expert reader. The figure shows 100% agreement between the two in all cases where the grade was deemed superior by the network by at least 0.25.

Discussion and Conclusion

A visual correspondence of IQ as assessed by the neural network and the objective cost function applied to the compressed sensing reconstruction seems to confirm that, in general, the mathematical features of the latter provide a reasonable quantitative measure of perceived image quality. In the limited database used here, the quality of the gridding reconstruction also seems to be predictive of the final result: e.g. when the initial quality is very low, there will be little improvement regardless of the respiratory level. Finally, the algorithm for automated IQ assessment can be used to compare the reconstructed respiratory phases. These results show that the neural network is not only able to assess the quality of images from different patients (as it was trained to do), but also differences in quality from different reconstructions of the same anatomy. This suggests that the algorithm uses other information than purely anatomical spatial information and could be particularly useful in comparative studies or even for direct insertion into the compressed sensing reconstruction pipeline as an addition to or even as a substitute of the cost function.Acknowledgements

No acknowledgement found.References

[1] Lustig M, et al. Sparse MRI: the application of compressed sensing for rapid MR imaging. Magn Reson Med. 2007; 58:1182-1195.

[2] Feng L, et al. XD-GRASP: golden-angle radial MRI with reconstruction of extra motion-state dimensions using compressed sensing. Magn Reson Med. 2016; 75:775-788.

[3] Demesmaeker R, et al. Image Quality through the Eyes of Deep Learning: A First Implementation in Coronary MRI. ISMRM Workshop on Magnetic Resonance Imaging of Cardiac Function, NYU Langone Medical Center, New York City, NY, USA.

[4] Piccini D, et al. Four-Dimensional Respiratory Motion-Resolved Whole Heart Coronary MR Angiography. Magn Reson Med. 2017; 77:1473-1484.

[5] Monney P, et al. Single Center Experience of the Application of Self-Navigated 3D Whole Heart Cardiovascular Magnetic Resonance for the Assessment of Cardiac Anatomy in Congenital Heart Disease. J Cardiovasc Magn Reson. 2015; 17:55-66.

[6] Piccini D, et al. Respiratory Self-Navigation for Whole-Heart Bright-Blood Coronary MRI: Methods for Robust Isolation and Automatic Segmentation of the Blood Pool. Magn Reson Med. 2012; 68:571-579.

[7] Piccini D, et al. Respiratory Self-Navigated Postcontrast Whole-Heart Coronary MR Angiography: Initial Experience in Patients. Radiology. 2014; 270:378-386.

Figures