4775

Robust tissue tracking from cardiac cine MRI with deep-learning-based fully automatic myocardium segmentation1Biomedical Engineering, University of Virginia, Charlottesville, VA, United States, 2Radiology, University of Virginia, Charlottesville, VA, United States, 3Medicine, University of Virginia, Charlottesville, VA, United States

Synopsis

Tissue tracking post processing from cardiac cine MRI can be used to calculate myocardial deformation parameters without additional scans. One major drawback of the processing is reduced reliability due to interference from blood and trabecular muscle signals and varying image contrast. Manual segmentation of LV myocardium can improve the robustness but is time consuming. We developed a deep convolutional neural network to automatically segment myocardium and used symmetric deformable registration to obtain the tracking information from the resulting binary masks. The segmentation and tracking worked reliably well, resulting in accurate pixel movement trajectories.

Introduction

Tissue tracking post processing from cardiac cine MRI can be used to calculate myocardial deformation parameters without additional scans 1. Optical flow, which aims to find pixel-to-pixel correspondence among frames, is generally used to obtain tracking information. When applied to 2D short-axis cine images, one major drawback is the poor reliability due to interference from blood and trabecular muscle signals and varying image contrast. Drawing accurate LV epi- and endocardial contours to eliminate non-myocardium pixels can significantly improve the robustness of the tracking, but it is impractical due to the large number of cardiac phases and slices. Deep learning models have shown great success in biomedical image segmentation 2 so that a neural network can be trained to reliably segment myocardium to assist tissue tracking. As symmetric deformable registration in 3, 4 is among the top performing registration methods to find pixel correspondence, it will be used in this study to calculate optical flow maps from masked images.Methods

A 3D U-Net structure 5 was used for epi- and endocardial segmentation. Compared with 2D, a 3D model can exploit through-slice information to improve segmentation accuracy. The network consisted of three encoding layers and three decoding layers with short-cut connections. To maximize specific network efficiency, two independent networks were trained to draw epi- and endocardial contours separately. The training and validation data was downloaded from the 2009 Cardiac MR Left Ventricle Segmentation Challenge 6, and it contained 15 subjects for training and 15 for validation. Subjects include normal volunteers and myocardial infarction and LV hypertrophy patients. The ground-truth contours were available for the end-diastole and end-systole phases. To reduce the effect of misalignment across slices, the original input data set is padded to 32 slices before feeding to the model. The in-slice data was also cropped to keep only the center region to accelerate the training process and improve accuracy. Extensive augmentation including 3D translation, scaling, rotation, shear and intensity jittering was used to compensate for the small number of training subjects. Post-processing included removing non-connected small contours, binary closing and convexification to fix obvious mistakes made by the model and combination of both contours after removing endocardial regions not encompassed by the epicardial contours.

After the myocardium was automatically segmented for all cardiac phases, the symmetric deformable registration was performed between the first frame and all subsequent frames on the binary masks with only myocardium as the foreground. The resulting deformation field was used to plot the tissue movement trajectories across the entire cardiac cycle.

Results and Discussions

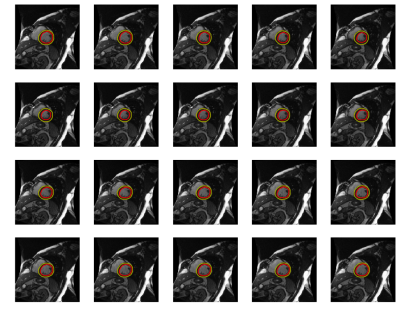

The developed 3D U-Net structure yields reliable and accurate segmentation. The mean dice score on the validation dataset for epicardial contour is 0.950±0.022 and endocardial contour is 0.905±0.067 against the ground-truth. Even though the training data contains only the ground-truth contours at end-diastole and end-systole phases, the data augmentation can mimic all other cardiac phases so that the model can produce accurate segmentation on all cardiac phases, as shown in Fig. 1. Out of 15 subjects, only one LV hypertrophy patient had sub-optimal endocardial segmentation during systole (dice score: 0.62) due to very small LV cavity and almost indistinguishable boundaries. Because the LV hypertrophy patients have more varied LV cavity sizes and shapes, the segmentation needs more training data for this patient group. The dice score for endocardial contour is 0.921±0.038 without LV hypertrophy patients.

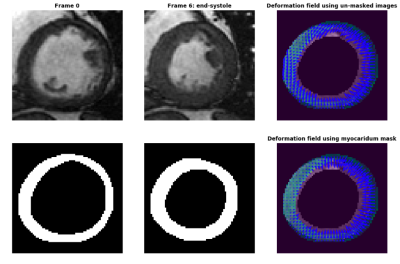

Figure 2 shows cardiac cine images at the initial frame (top left) and the end-systolic frame (top middle) with the automatically segmented myocardium masks (bottom left and bottom middle) and the deformation fields using the same deformable registration algorithm. Using the un-masked original images (top right) results in inaccurate tracking as the arrows on the top skew to the left and miss the edges. Using the binary masks yields more precise pixel correspondences. The deformation fields using the masked original images were also calculated and the results are almost the same as when using the binary masks. However, the computation using the binary masks is faster and more robust as it will not be affected by inhomogeneous myocardial signal. The subject had an infarction, as shown by the non-uniform strain. Further study will be performed to quantify the strain and compare it with DENSE.

Conclusion

The developed 3D U-Net can reliably segment epi- and endocardial regions to generate accurate myocardium masks. The masks can be used for robust tissue tracking to calculate regional and global strains to assist clinical diagnosis.Acknowledgements

UVa-Coulter Translational Research PartnershipReferences

1. Pedrizzetti G, Claus P, Kilner PJ, et al. Principles of cardiovascular magnetic resonance feature tracking and echocardiographic speckle tracking for informed clinical use. J Cardiovascular Magn Reson. 2016. 18:51

2. Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. arXiv:1505.04597 [cs.CV]. 2015.

3. Avants BB, Tustison NJ, Song G et al. A reproducible evaluation of ANTs similarity metric performance in brain image registration. Neuroimage. 2011. 54(3):2033-2044.

4. Avants BB, Tustison NJ, Stauffer M, et al. The insight toolkit image registration framework. Front Neuroinfom. 2014. 8:44.

5. Cicek O, Abdulkadir A, Lienkamp SS, et al. 3D U-Net: learning dense volumetric segmentation from sparse annotation. arXiv:1606.06650 [cs.CV]. 2016.

6. http://smial.sri.utoronto.ca/LV_Challenge/Home.html

Figures