4614

Discriminative deep feature fusion of Contrast-enhanced MR for malignancy characterization of hepatocellular carcinoma1School of Medical Information Engineering, Guangzhou University of Chinese Medicine, Guangzhou, China, 2Department of Radiology, Guangdong General Hospital, Guangdong Academy of Medical Sciences, Guangzhou, China, 3Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China

Synopsis

The malignancy of hepatocellular carcinoma (HCC) is of great significance to prognosis. Recently, deep feature in the arterial phase of Contrast-enhanced MR has been shown to be superior to texture features for malignancy characterization of HCCs. However, only arterial phase was used for deep feature extraction, ignoring the impact of other phases in Contrast-enhanced MR for malignancy characterization. In this work, we design a discriminative multimodal deep feature fusion framework to both extract correlation and separation of deep features between Contrast-enhanced MR images for malignancy characterization of HCC, which outperforms the simply concatenation and the recently proposed deep correlation model.

Introduction

Hepatocellular carcinoma (HCC) is the third leading cause of cancerous death worldwide, and the malignancy of HCC is crucial for patient prognosis1. Recently, deep feature in the arterial phase of Contrast-enhanced MR has been shown to be superior to texture features for malignancy characterization of HCCs2. However, only arterial phase was used for malignancy characterization, ignoring the impact of other phases in Contrast-enhanced MR for malignancy characterization. In order to better exploit Contrast-enhanced MR for malignancy characterization, we design a discriminative multimodal deep feature fusion framework to both extract correlation and separation of deep features between Contrast-enhanced MR images for malignancy characterization of HCC.Method

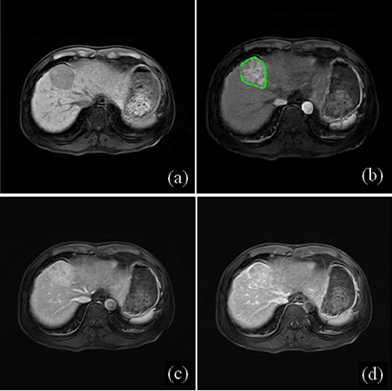

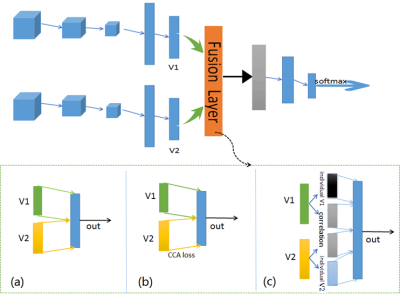

Forty-six histopathologically proved HCC in 46 patients were retrieved from September 2011 to October 2015. The ground truth of malignancy of HCCs was retrieved from the histology report. Gd-DTPA enhanced MR images including pre-contrast, arterial phase, portal vein phase and delayed phased images for each patient were acquired with a 3.0T MR scanner. Figure 1 showed representative Contrast-enhanced MR images of a 59 year-old man with pathologically confirmed low-grade HCCs (Edmonson II). In order to increase the training sample for deep learning, numerous 3D patches were extracted within limited number of tumors in multiple phases of Contrast-enhanced MR. 3D deep feature derived from 3D patches in Pre-contrast (PS), Arterial (AP) and Portal-vein (PV) phases were also fused for malignancy characterization of HCCs. Figure 2 depicted the framework of discriminative deep feature fusion of multiple phases (Figure 2c) in Contrast-enhanced MR, in which conventional concatenation and deep correlation model were also shown for comparison (Figure 2a and 2b). The objective of learning discriminative feature representation across multimodal in Figure 2c is to extract some shared features across modals and some model-specific features for each modal individually. Therefore, the fusion layer was designed as follows3: min ||V1X1-V2X2 ||2. By minimize the above objective function, the mapping matrix will eventually guarantee the similarity of correlated part from both modalities, and the model-specific feature is orthogonal to the correlated part. Values of characterization performance were denoted as mean±standard deviation as a result of four-folded cross-validation with 10 repetitions on the data set.Results

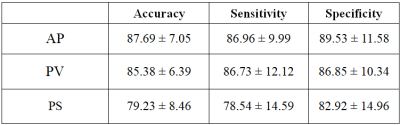

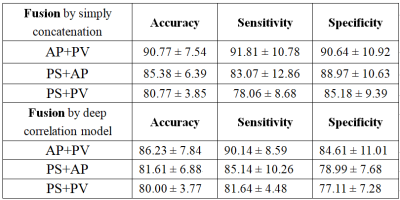

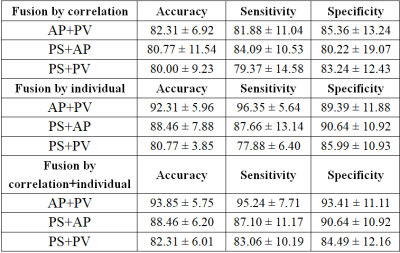

Table 1 showed the results of characterization performance of 3D deep features in pre-contrast (PS), arterial (AP) and portal vein phase (PV), respectively. It can be found that arterial phase yielded better results than portal vein and pre-contrast phase for malignancy characterization. Table 3 tabulated the results of characterization performance of 3D deep feature fusion by simply concatenation and deep correlation model. Clearly, fusion by simply concatenation improved the performance for malignancy characterization compared with that of individual phase. However, the performance of deep correlation model slightly decreased. Correspondingly, the results of fusion by correlation part generated by the proposed framework also decreased as shown in Table 3, implying that only using the correlation part between contrast-enhanced MR images may not be optimal for malignancy characterization. However, the results of fusion by individual part generated by the proposed framework remarkably improved the performance of malignancy characterization, suggesting that model-specific feature may be crucial for malignancy characterization. Finally, the results of fusion by correlation and individual part generated by the proposed framework yielded best results for malignancy characterization.Discussion

Conventional multimodal fusion methods just combine deep features from multiple models by simple concatenation4, without exploiting the correlated and separate information between modals. Deep correlation model5 has recently been proposed to extract maximum correlated representation of multimodal by canonical correlation analysis for lesion characterization. However, only shared or correlated representations of deep features between models are extracted for characterization, ignoring the influence of separation of deep features across modals. Therefore, the proposed discriminative multimodal deep feature fusion framework considers both correlation and separation of deep features between Contrast-enhanced MR images, outperforming the simply concatenation and the recently proposed deep correlation model. It is surprising that the correlation part of fusion by the deep correlation model or by the proposed discriminative multimodal deep feature fusion framework all decreases the performance of malignancy characterization. It might be explained that HCC may preserve the hepatocellular function and contrast agent uptake makes contrast-enhanced MR images remarkably distinctive in different phases, which makes correlation part between phases insufficient for malignancy characterization. Conversely, the individual part extracted from each specific modal in Contrast-enhanced MR indeed plays more important role in malignancy characterization. It can be believed that the proposed framework may be extremely advantageous to fuse multimodality with distinctive differences in clinical practice.Conclusion

Discriminative multi-model deep feature fusion that both extracts correlation and separation of deep features between Contrast-enhanced MR images is proved to effectively provide complementary information to improve performance of malignancy characterization of HCC.Acknowledgements

This research is supported by the grant from National Natural Science Foundation of China (NSFC: 81771920), in part by grants from National Natural Science Foundation of China (NSFC: 61302171 and U1301258) and Shenzhen Basic research project (No. JCYJ20150630114942291).References

1. Forner A, Llovet JM, Bruix J. Hepatocellular carcinoma. Lancet 2012; 379(9822): 1245-1255.

2. Wang Q, Zhang L, Xie Y, Zheng H, and Zhou W. Malignancy characterization of hepatocellular carcinoma using hybrid texture and deep feature. Proc.24th IEEE Int. Conf. Image Process, September 2017: 4162-4166.

3. Panagakis Y, Nicolaou M, Zafeiriou S, Pantic M. Robust Correlated and Individual Component Analysis. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2015, 38 (8) :1665-1678.

4. Setio AAA, Ciompi F, Litjens G, et al. Pulmonary nodule detection in CT images: False positive reduction using Multi-view Convolutional Network. IEEE Transactions on Medical Imaging, 2016, 35(5):1160-1169.

5. Yao J, Zhu X, Zhu F, Huang J. Deep correlation learning for survival prediction from multi-modality data. International Conference on Medical Image Computing and Computer-assisted Intervention, September 2017:406-414.

Figures