4613

Using deep learning to investigate the value of diffusion weighted images for malignancy characterization of hepatocellular carcinoma1School of Medical Information Engineering, Guangzhou University of Chinese Medicine, Guangzhou, China, 2Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China, 3Department of Radiology, Guangdong General Hospital, Guangdong Academy of Medical Sciences, Shenzhen, China

Synopsis

The apparent diffusion coefficient (ADC) derived from Diffusion-weighted imaging (DWI) has been widely used for lesion characterization. However, ADC is calculated from image intensities with different b values, which is a low-level image feature that might be insufficient to represent heterogeneous of neoplasm. Furthermore, ADC measurements are subject to the influence of motion and image artifacts. The deep feature based on the emerging deep learning technique has been considered to be superior to traditional low-level features. The purpose of this study is to effectively characterize the malignancy of HCC based on deep feature derived from DWI data using deep learning.

Introduction

Diffusion-weighted imaging (DWI) has been widely used for lesion characterization, from which the apparent diffusion coefficient (ADC) measurement has been reported for estimating the pathological grade of Hepatocellular carcinoma (HCC)1. However, ADC is derived from image intensities with different b values, which is a low-level image feature that might be insufficient to represent heterogeneous of neoplasm. Furthermore, ADC measurements are also subject to the influence of motion and image artifacts2. The deep feature based on the emerging deep learning technique is generally considered to be superior to traditional low-level features3. The purpose of this study is to effectively characterize the malignancy of HCC based on deep feature derived from DWI data using convolution neural network (CNN).Method

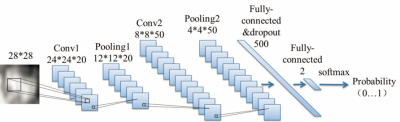

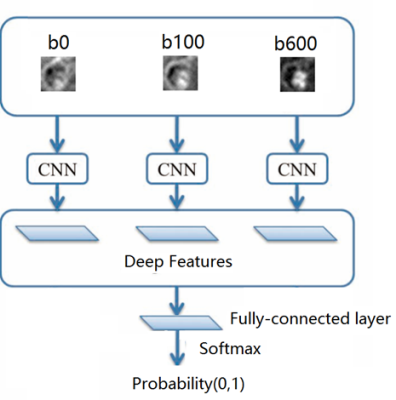

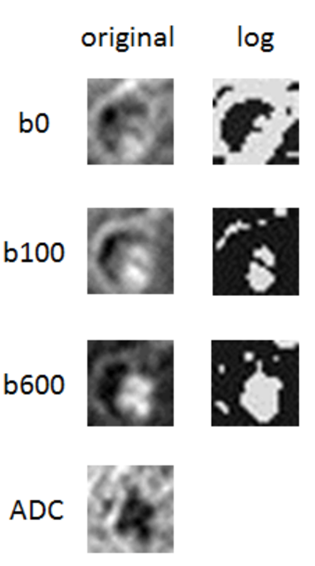

Forty-eight subjects with 48 pathologically confirmed HCC lesions from July 2012 to May 2017 were included in this retrospective study with diffusion-weighted imaging (DWI) performed for each subject with a 3.0T MR scanner. The histology grading of HCCs was retrieved from the archived clinical histology report, including twenty-five low grade and twenty-three high grade HCCs. DWI was performed in the axial view with three b values (0,100,600sec/mm2). The procedure of deep feature extraction from the three b-value images was illustrated as follows: (1) a resampling method was performed to extract multiple 2D axial planes of HCCs in each b value image to increase the dataset for training. (2) deep features of HCCs in three b-value images were extracted for malignancy characterization (Figure 1), respectively. (3) fusion of deep features derived from three b-value images was conducted for malignancy characterization (Figure 2). Furthermore, the Log map was also calculated by performing Logarithm operation for each b-value image (Figure 3), and the ADC map was also computed by mono-exponentially fitting the three b-value points from three b-value images (Figure 3). Deep features were also extracted from the Log map and ADC map for malignancy characterization, respectively. Values of characterization performance were denoted as mean±standard deviation as a result of four-folded cross-validation with 10 repetitions on the data set.Results

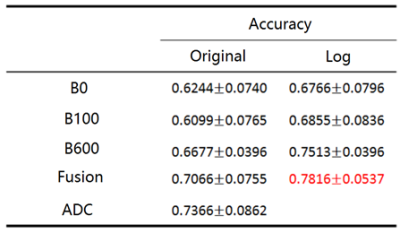

For deep features derived from original b0, b100 and b600 images, deep feature derived from b600 yields higher accuracy (0.6677±0.0396) for malignancy characterization. Fusion of deep features derived from original b0, b100 and b600 images results in improved performance (0.7066±0.0755). For comparison, deep feature derived from the ADC map yields even better performance (0.7366±0.0862) than those derived from original three b-value images or fusion of them. Furthermore, deep features derived from the Log map yield much better performance than those derived from the three b-value images or fusion of them. Specifically, fusion of deep features derived from Logb0, Logb100 and Logb600 generates best performance for malignancy characterization (0.7816±0.0537), which obviously outperforms to that of deep feature derived from the ADC map.Discussion

Our study suggests that deep feature derived from higher b value yields better performance for malignancy, implying that DWI imaging with higher b value is better for malignancy differentiation of neoplasm. As in heterogeneous tumor, the more cystic or necrotic fraction of the tumor will show greater signal attenuation on high b-value images because water diffusion is less restricted, resulting in better performance for tumor differentiation4. Furthermore, our study also demonstrates that deep feature derived from the ADC map is much better than those derived from original three b-value images, which may verify that ADC calculated from DWI data has the potential to differentiate malignancy in clinical practice. However, our study further demonstrates that deep features derived from Log map is superior to those derived from original three b-value images or the ADC map. As the ADC map was computed by mono-exponentially fitting the three b-value points with the following equation: ADC = -1/b* (ln(S0)- ln(S)), it can be found that the ADC map is exactly calculated based on pixel intensities of the Log map. Thus, the ADC map extracts low-level feature (intensities) from the three Log map, but the fusion of deep features derived from Logb0, Logb100 and Logb600 generates the representative high-level deep features derived from the three Log maps, resulting in better performance than that of deep feature from the ADC map for malignancy characterization. Note that the proposed deep learning model of DWI images does not need to control the motion during DWI imaging as the ADC calculation required.Conclusion

Our study suggests that fusion of deep features derived from the Log maps with respect to the three b-value images yields better performance for malignancy characterization of HCCs than those derived from the ADC map or the original three b-value images, which may be broadly used for kinds of lesion characterization using DWI images in clinical practice.Acknowledgements

This research is supported by the grant from National Natural Science Foundation of China (NSFC: 81771920), in part by grants from National Natural Science Foundation of China (NSFC: 61302171 and U1301258) and Shenzhen Basic research project (No. JCYJ20150630114942291).References

1. Nishie A, Tajima T, Asayama Y, et al.Diagnostic performance of apparent diffusion coefficient for predicting histological grade of hepatocellular carcinoma. Eur J Radiol,2011,80(2): e29-e33.

2. Guyader JM, Bernardin L, Douglas NH, et al. Influence of image registration on apparent diffusion coefficient images computed from free-breathing diffusion MR images of the abdomen. J Magn Reson Imaging. 2015, 42:315-330.

3. G. Litjens, T. Kooi, B.E. Bejnordi, et al. A survey on deep learning in medical image analysis. Medical Image Analysis, vol. 42, July 2017: 60-88.

4. Koh DM, Collins DJ. Diffusion-Weighted MRI in the Body: Applications and Challenges in Oncology. AJR: 188, June 2007:1622-1635.

Figures