4517

Delayed fMRI timing in the human auditory cortex in lexical processing is correlated with increased EEG power in beta band1Institute of Neuroscience, National Yang Ming University, Taipei, Taiwan, 2Institute of BIomedical Engineering, National Taiwan University, Taipei, Taiwan

Synopsis

Using fast fMRI (whole-brain 10 Hz sampling), we revealed the left hemisphere’s BOLD signal is more delayed by 500 ms when engaging a lexical discrimination task than a non-lexical discrimination task. EEG study on the same subjects suggested such BOLD signal delay is related to the oscillatory power in the beta band.

Introduction

Hemodynamic responses can exhibit fine timing modulations by the stimulus and behaviors. Previously it was shown that inter-regional BOLD signal is closely related to reaction time1 in mental chronometry tasks and to neuronal oscillatory activities in choice reaction-time tasks2. In the human visual cortex, it has been shown that the elicited BOLD signals are temporally linear up to 0.5 Hz3. Sustained visual stimulation can generate oscillatory BOLD signal up to 0.75 Hz4. Together, these results suggested that the existence of fine timing information in fMRI signal. However, fine temporal characteristics of the BOLD signal in human auditory cortex are less explored, particularly in cases with different top-down modulations.

Here we used ultra-fast fMRI with a 10-Hz sampling rate5 and EEG to measure the BOLD and electrophysiological signals at the human auditory cortex in response to speech sounds. In particular, our design delivered the same speech sounds in two conditions with and without a lexical context. We hypothesized that BOLD signal at the auditory cortex exhibits different temporal characteristics because it receives different context-dependent feedback information. The EEG data were used to probe the neural correlates of BOLD temporal characteristics.

Method

Subjects (n=14; 6 female; age: 22.7+/-1.8) participated in either a lexical tone or a color discrimination task after giving their written informed consents approved by the Institute Review Board. For “lexical” trials, subjects were asked to respond if the visual cue (digit) matched the tone of the presented Chinese speech sound. For “non-lexical” trials, subjects were asked to press a button after hearing the same sound stimulus if the visual cue was colored in red. The same experiment design was used for both fMRI and EEG data collection.

Data were collected on a 3T MRI scanner (Skyra, Siemens) using a 32-channel head coil array and the inverse imaging method (TR=0.1 s; TE=30 s; flip angle=30o)5. Two runs of data were collected for each task condition including about 90 trials in each run. Each run lasted 380 s. EEG were collected using a 64-channel cap and amplifier (Compumedics Neuroscan, USA) with the sampling rate of 1000 Hz. The vertical and horizontal eye movements were measured. The impedances of all electrodes were kept below 10kΩ during the experiment.

Functional MRI

were reconstructed with the minimum-norm estimates5. They were further analyzed by the General Linear Model, where hemodynamic

responses were modeled by finite impulse response functions. We chose the

time-to-half (TTH), the time reaching 50% of the maximal response as the fMRI

timing indices. EEG data were first re-referenced to the average of all

electrodes and then high-pass filtered (0.1 Hz). Time-frequency representation

(TFR) of the EEG signals were calculated using the Morlet wavelets (between 4

Hz and 80 Hz). The power of TFR was linearly detrended at each EEG electrode

and then summed across frequencies. The correlation between fMRI timing and EEG

power was estimated by bootstrap, where subjects were sampled with replacement

500 times to generate estimates of fMRI timing and EEG power for lexical and

non-lexical conditions separately. Linear regression was done over these bootstrap

samples to test the significance of the correlation.

Results

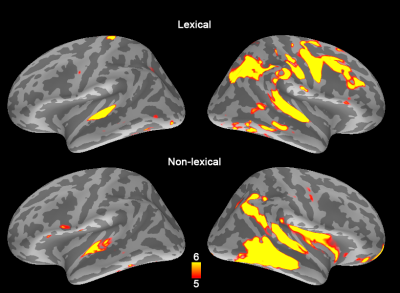

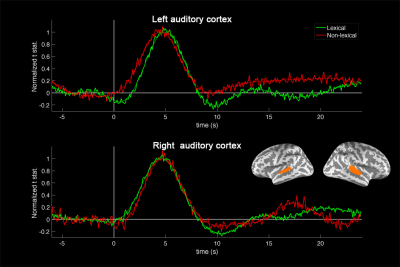

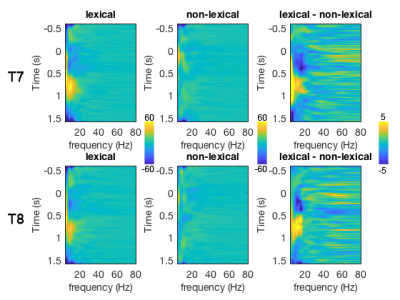

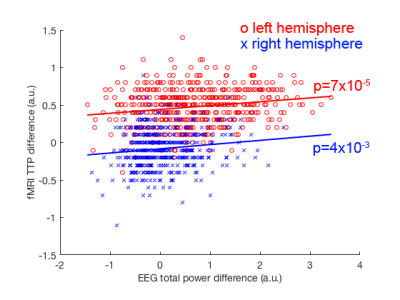

The TTH at left auditory cortex for lexical and non-lexical conditions were 3.2 s +/- 0.10 s and 2.7 s +/- 0.26 s, respectively. The TTH at right auditory cortex for lexical and non-lexical conditions were 3.0 s +/- 0.12 s and 3 s +/- 0.22 s, respectively. Only the left auditory cortex exhibited significant TTH delay (0.5 s) between conditions (p = 0.022). The BOLD signal distribution and time courses at auditory cortices were shown in Figures 1 and 2. Figure 3 shows the z-scores for TFR at T7 and T8 electrodes for lexical and non-lexical conditions. Significant EEG signal power was concentrated between 4 Hz and 20 Hz. Significant correlation between the difference of significant EEG power in the beta band and the difference of fMRI TTP was found for both left (p=7x10-5) and right (p=4x10-3) auditory cortex (Figure 4).Discussion

Our experiment revealed that the same speech sound elicited more delayed (500 ms) BOLD signals in lexical than non-lexical context at the left auditory cortex. The neuronal underpinnings for this delay was accounted by the beta band oscillation, which has been previously reported to be related to the BOLD signal in a resting state study6. The EEG-fMRI coupling reported here also corroborated the finding that top-down and bottom-up information transfer in the auditory cortex during sentence listening involves delta, alpha, and beta bands7.Acknowledgements

This work was partially supported by Ministry of Science and Technology, Taiwan (103-2628-B-002-002-MY3, 105-2221-E-002-104), and the Academy of Finland (No. 298131).References

1 Menon R. S., Luknowsky D. C. & Gati J. S.Proc Natl Acad Sci U S A.1998; 95:10902-10907.

2 Lin F.

H., Witzel T., Raij T. et al.Neuroimage.2013; 78:372-384.

3 Dale A. & Buckner R.Hum Brain Mapp.1997; 5:329-340.

4 Lewis L. D., Setsompop K., Rosen B. R. et al.Proc Natl Acad Sci U S A.2016; 113:E6679-E6685. 5 Lin F. H., Witzel T., Mandeville J. B. et al.Neuroimage.2008; 42:230-247.

6 Laufs H., Krakow K., Sterzer P. et al.Proc Natl Acad Sci U S A.2003; 100:11053-11058.

7 Fontolan L., Morillon B., Liegeois-Chauvel C. et al.Nat Commun.2014; 5:4694.

Figures