4414

Holographic Computing for MRI-based Visualization and Interactive Planning of Prostate Interventions1Department of Computer Science, University of Houston, Houston, TX, United States, 2Department of Surgery, Hamad Medical Corporation, Doha, Qatar, 3Department of Clinical Imaging, Hamad Medical Corporation, Doha, Qatar, 4Mechanical Engineering Department, Texas A&M University at Qatar, Doha, Qatar

Synopsis

A platform is presented for holographic visualization and planning of image-guided prostate interventions. Its pipeline includes modules dedicated for MRI segmentation, structure rendering, trajectory planning for biopsy or brachytherapy needles, and the spatial co-registration of the outcomes of these modules for augmented reality holographic rendering. The interface was implemented on a HoloLens smart-glasses enabling gesture and voice activation for interactive selection of the input parameters of any module. The work is motivated by the potential of holographic augmented reality to offer true 3D appreciation of the morphology of the area of the procedure and interactive planning of access trajectories.

Introduction

Image-guided targeted prostate interventions (e.g. biopsy and brachytherapy) are highly advanced due to MRI-ultrasound fused systems.1 MRI-US fusion provides operator with intraoperative US real-time guidance and detailed anatomical information from the preoperative MRI. In practice, the required hand-eye-coordination is challenging. These systems require the operator to: (i) manually perform the interventions on an orientation (including the intrarectal manipulation of the US probe), whereas (ii) visualize the intervention on a screen in different orientation. Moreover, the view of the area of procedure is 2D (i.e., fused real-time US image and corresponding MRI slice). There is a clinical need to interact, spatially merge, and visualize information from MR, US, and tracking needle trajectories, and align this information onto patient’s position during the interventions. Recent advancement in holographic technology may overcome these challenge by rendering an augmented/mixed reality environment to provide true 3D perception of the anatomy.2,3,4 Motivated by the potential of this emerging technology to improve patient outcome, we present a platform for holographic computing to visualize and interactively plan prostate interventions.Methods

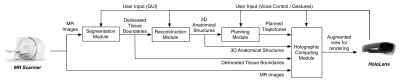

Figure 1 illustrates the architecture of the current version of the platform depicting its different modules and their interconnection in form of a pipeline. The input of the pipeline are MRI feeds (DICOM format) that are transferred off-line to a host PC. The segmentation module used band-pass thresholding and/or active contouring to delineate tissue boundaries on MR images. The operator can interactively adjust the segmentation parameters via a graphics user interface (GUI) presented on the HoloLens. The delineated tissue boundaries were then sent to the reconstruction module and the holographic computing module. The reconstruction module generates 3D anatomical structures of the delineated tissues by applying marching cube and smoothing algorithms. The module applies the same parameters to all the slices in a set. The planning module allows operator to assign a virtual trajectory for needle insertion on 3D anatomical structures generated by reconstruction module. In case of transperineal approach, the system requires selection of two points (i.e. a target point inside the prostate and an entrance point over the perineum), whereas in case of transrectal approach, a single point is sufficient (i.e. the target point inside the prostate and the rest of the trajectory is computed based on the position of the probe inserted inside the rectum). The holographic computing module receives and combines the information from all modules and generates rendering instruction for HoloLens interface. The planning module and holographic computing module, apart from taking input from GUI, enable the user to provide input via the HoloLens using voice control and gestures commands. This improves human-in-the-loop interaction with the information rendered by HoloLens. The platform was tested with images of an MR compatible prostate phantom (Model 048A; CIRS Inc., Norfolk, USA) collected on a Siemens 3T Skyra scanner with a T2 Turbo-Spin-Echo (TE: 101; TR: 3600; FS: 3; Slice Thickness: 3mm; Slice Spacing: 3.3mm; FOV: 200x200mm; 320x320)Results and Discussion

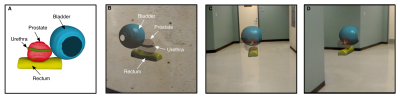

The augmented-reality view rendered by the holographic computing module was superior to desktop-based volume or three-dimension rendering in regards to structure detection and appreciation of spatial relationships (Figure 2 and 3). By inspecting the virtual trajectory superimposed with rendered structures and MR images, the operator was able to assess the collision of the needle intervention path with vital structures (e.g. Urethra) and adjust the trajectory accordingly (Figure 4). A steady frame rate of 60FPS was recorded while rendering all the information in augmented view. Slice traversal and contrast adjustment of MR images using HoloLens occurred at an interactive rate with a time delay of less than a second. The pixel data of a slice was received by the HoloLens from host PC in form of pre-processed texture. An initial setup time of 7 seconds was recorded as it involved reading multislice MR image data from a secondary storage and loading it in host PC’s memory. Future work is focused on using computer-vision techniques for tracking of needle position3 and projecting the augmented information on the patient4 using HoloLens. We also planned on disseminating an updated version of the software to prostate cancer sites and pursue quantifiable user studies to assess the value of this new technology in the clinical realm.Acknowledgements

This work was supported by NPRP award [NPRP 9-300-2-132] from the Qatar National Research Fund (a member of The Qatar Foundation). The statements made herein are solely the responsibility of the authors.References

[1] Puech P, Rouvière O, Renard-Penna R, et al. Prostate Cancer Diagnosis: Multiparametric MR-targeted Biopsy with Cognitive and Transrectal US-MR Fusion Guidance versus Systematic Biopsy-Prospective Multicenter Study. Radiology. 2013;268:461-9.

[2] Jang J, Addae G, Manning W, et al. Three-dimensional holographic visualization of high-resolution myocardial scar on HoloLens. Proc Intl Soc Mag Reson Med. 2017;25:0540.

[3] Lin M, Bae J, Srinivasan S, et al. MRI-guided Needle Biopsy using Augmented Reality. In Intl Soc Mag Reson Med. 2017.

[4] Leuze C, Srinivasan S, Lin M, et al. Holographic visualization of brain MRI with real-time alignment to a human subject. In Intl Soc Mag Reson Med. 2017.

Figures