4156

Feasibility of MRI image based synthetic CT generation in radiotherapy using deep convolutional neural network1Institute of Biomedical and Health Engineering, Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China, 2Department of Radiology, Shenzhen Second People's Hospital (the First Affiliated Hospital of Shenzhen University), Shenzhen, China

Synopsis

Generating electronic density information for MRI images is crucial for MRI-based dose calculation in a MRI-only workflow of radiotherapy. To address this problem, we proposed a deep convolutional neural network plus with an auto-context model to predict synthetic CT from MRI images of routine-sequence. The highly accuracy of generated synthetic CT results shows that the proposed method is effective and robust.

Introduction

Magnetic resonance imaging (MRI) has gained growing interest in radiotherapy as MRI provides superior precision of tumor and normal tissue delineation without unnecessary radiation dose as compared with CT. A radiotherapy workflow using MRI as the sole modality is expected to be safer and more simplified for which has excluded the systematic error induced by registering MR and CT. The major obstacle for MRI-only radiotherapy planning is the lacks of electron density information of MRI for dose calculation, which is the same issue for MRI-based attenuation correction in PET-MR system. In this work, we proposed a deep convolutional neural network (CNN) method to generate corresponding synthetic CT from MRI data to address this challenging problem.Methods

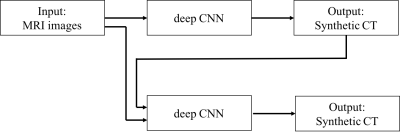

This method is based on a feature-capture and symmetric expanding CNN[1][2] to train a voxel-by-voxel mapping between MRI and CT images. The MRI data with original CT images as labels are fed to the network to extract features through the contracting path of the network architecture, and then the captured features are expanded symmetrically by the latter part of the network to generate synthetic-CT image. The network is further refined by an auto-context model[3], where the generated synthetic-CT results is concatenated with the preceding input data to train the network again. The proposed iterative process takes more context information which makes the network to output more refined mapping.Results

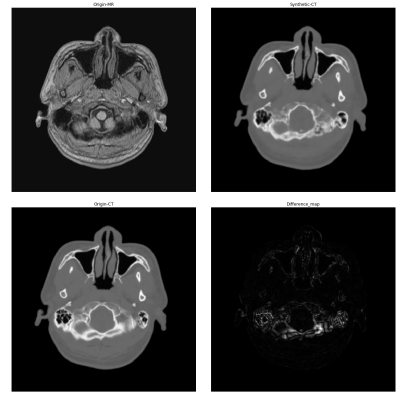

Our dataset contains a group of 8 patients with 1144 pairs of T1-weighted MRI images and corresponding CT images. The voxel size of MRI and CT data is 1*1*1 mm3 and 0.5*0.5*1 mm3 respectively. After rigid registration, MRI and CT images have the same matrix size of 512*512 and voxel size of 0.5*0.5*1 mm3. The experimental results with these registered images are showed in figure 3. The mean absolute error (MAE) of this synthetic CT generation method is less than 35 Hounsfield units as compared with the original CT images, much lower than that of the other research works have presented.Conclusion

A deep CNN plus an auto-context model is proposed to generate synthetic CT from MRI images of routine-sequence, and demonstrate much better accuracy and robust performance. The characteristic of using training data more efficiently enables the proposed method obtain satisfying results with fewer images.Acknowledgements

This work is supported in part by grants from Shenzhen Key Technical Research Project (JSGG20160229203812944), National Key Research and Develop Program of China (2016YFC0105102), National Science Foundation of Guangdong (2014A030312006), Beijing Center for Mathematics and Information Interdisciplinary Sciences and Department of Radiology, Shenzhen Second People's Hospital (the First Affiliated Hospital of Shenzhen University).References

[1] Ronneberger O., Fischer P., Brox T. (2015) U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Navab N., Hornegger J., Wells W., Frangi A. (eds) Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. Lecture Notes in Computer Science, vol 9351. Springer, Cham

[2] Han X. MR-based synthetic CT generation using a deep convolutional neural network method. Med Phys. 2017 Apr;44(4):1408-1419. [3] Zhuowen Tu and Xiang Bai. Auto-context and its application to high-level vision tasks and 3d brain image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 32(10):1744–1757, 2010.

[3] Zhuowen Tu and Xiang Bai. Auto-context and its application to high-level vision tasks and 3d brain image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 32(10):1744–1757, 2010.

Figures