4116

MR/PET motion correction using deep learningYasheng Chen1, Cihat Eldeniz1, Richard Laforest1, and Hongyu An1

1Washington Univ. School of Medicine, St. Louis, MO, United States

Synopsis

In abdominal imaging, the simultaneous acquisition of MR and PET provides a unique opportunity to take advantage of MR (which has high spatial and temporal resolution) to resolve the respiratory motion artefacts in PET. But this motion correction scheme requires MR motion scans during the whole PET session. To improve the imaging efficiency, we use simultaneously acquired MR/PET signal to train a PET re-binning motion correction classifier, which can be deployed to correct the motion in the PET scans without concurrently acquired MR motion detection. We have demonstrated the feasibility of this online motion correction scheme with a phantom study.

Introduction

In abdominal imaging, even though PET has high specificity in determining lesion viability, small lesions often lose contrast in SUV uptake or disappear completely due to respiratory motion. Simultaneous acquisition of MR/PET allows us to use the high SNR respiratory motion signal from MR to correct the motion in the concurrently acquired PET. However, for a prolonged PET scan, it is inefficient to dedicate the whole MR scan time to motion detection, while the same time can be used to acquire diagnostic scans. In this study, we propose to extend this motion correction capability of MR/PET to the PET scans not concurrently acquired with the MR motion scans.Method

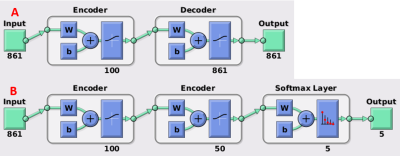

MR and PET motion correction was conducted through dividing respiratory signal into different phases for rebinning. MR respiratory motion curve was calculated with a consistently-acquired projections for tuned and robust motion estimation (CAPTURE), which covers k-space in with randomly distributed radial spokes. PET list mode data will be binned to sinogram with a time duration identical to the temporal resolution of CAPTURE (e.g. ~150 ms). The 3D sinogram was first compressed along z with single-slice rebinning, which was further compressed through summing the projections more aligned with the direction of the diaphragm motion. MR derived respiratory signal aids us to locate the sinogram voxels highly relevant to respiratory motion through correlation analysis. Thus, the PET rebinning classifier will only be trained and deployed using these highly motion relevant sinogram data. The selected PET sinogram data with respiratory re-binning labels (from MR) are used to train a deep neural network (DNN) based PET motion rebinning classifier, which consists of stacked auto-encoder layers and a softmax motion rebinning output. The temporal signals from all the selected sonogram voxels were included as the features for DNN. An auto-encoder is a neural network that can learn a compact representation of the original input (Figure 1A). We stacked two layers of pre-trained encoder and a softmax decision layer as the output (Figure 1B). Finally, the trained PET re-binning classifier will then be deployed to rebin the PET listmode data without the MR motion correction scans.Result

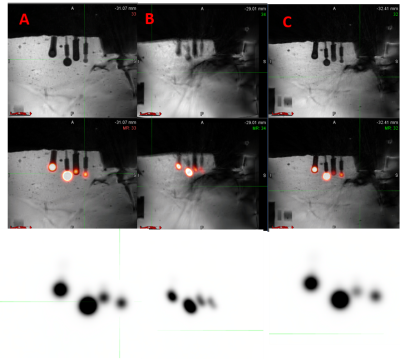

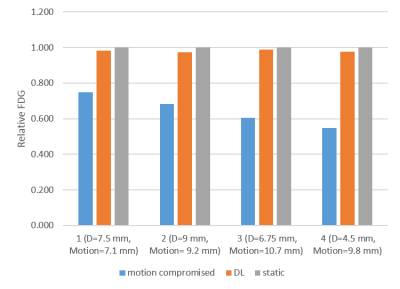

We used the first 2/3 list mode data of a 6min PET scan to train this three layered DNN to predict the motion binning in the remaining 1/3 of the data. The reconstructed images without motion correction, with the proposed deep learning motion correction and the static images are given in Figure 2. Our deep learning based motion can recover the FDG activities to 97.6-98.9% of the static PET in the four imbedded spheres simulating lesion (Figure 3).Conclusion

In this preliminary study, by taking advantage of the simultaneously acquired MR and PET, we are able to use deep neural network to learn a PET motion rebinning classifier to correct the motion in the PET scans not concurrently acquired with MR motion scans. Because of the high temporal solution in MR, we are able to rebin the PET list data acquired in a very short duration (~150ms). Furthermore, the proposed training is performed within the same imaging session, which has the potential to be implemented as an online approach to improve the quality and imaging efficiency in the joint MR/PET imaging.Acknowledgements

No acknowledgement found.References

[1] LeCun, Y., Y. Bengio, and G. Hinton, Deep learning. Nature, 2015. 521(7553): p. 436-44.Figures

The structure of the deep neural network used in the study.

The MR images and SUV maps of the phantom study from static acquisition

(A), no motion correction (B) and deep learning based motion correction (C).

Relative FDG from static acquisition, no motion correction, deep learning based motion correction and CAPTURE.