4114

Automatic Motion Artifact Detection as Scan-aided Tool in an Autonomous MRI Environment1HeartVista, Inc, Los Altos, CA, United States

Synopsis

Motion artifacts in MRI can be confused with pathology, and render the scans not diagnosable. Ideally during examination, scan operators should identify these artifacts immediately, and reacquire the scan. But it can be challenging to do so in a clinical time-constraint workflow. Motion artifacts are especially difficult to identify accurately, as they come in a wide variety. Here, we propose an autonomous scan control framework, based on deep learning, to detect motion artifacts immediately after reconstruction. The deep learning model was integrated into a real-time imaging system, and enabled an interactive scanning pipeline.

Introduction

Motion artifacts in MRI can be confused with pathology,1 and render the scans not diagnosable. Ideally during examination, scan operators should identify these artifacts immediately, and reacquire the scan with different imaging parameters to prevent motion artifacts. However, it can be challenging for scan operators to recognize all artifacts, in a clinical time-constraint workflow. Motion artifacts are especially difficult to identify accurately, as they come in a wide variety, depending on the sampling trajectories, imaging parameters, and underlying pathology.

Here, we propose an autonomous scan control framework, based on deep learning, to detect motion artifacts immediately after the scan is acquired. Once motion artifacts are detected, the system prompts prospective scan guidances, based on the scan parameters, to reduce these artifacts. The framework is integrated into a real-time imaging system. We demonstrate a high sensitivity of 86%, and specificity of 84.5% of detecting motion artifacts over 596 testing images.

Methods

Methods

To detect motion artifacts reliably, we trained a deep learning model based on a modified Inception 2 network in TensorFlow 3 using data from 500 clinical patient studies, acquired with the HeartVista Cardiac Package (HeartVista, Inc.; Los Altos, CA). 5962 images were randomly divided the into a training set of 5366 images and a test set of 596 images. An experienced operator manually labeled images into two categories: with or without motion artifacts.

To integrate the trained model into the scan, TensorFlow capabilities were added to HeartVista’s pipeline-based reconstruction engine to allow seamless bidirectional data transfer between MRI reconstruction nodes and TensorFlow graphs. The resulting predictive models were integrated into the HeartVista scan-control platform system to provide prospective scan guidance during automated scanning.

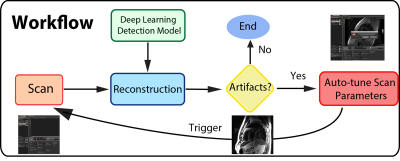

Figure 1 illustrates the workflow of the automatic artifacts detection: once images are reconstructed, the trained deep learning network is triggered to determined whether there are motion artifacts in the scans. If motion artifacts are detected, scan parameters are auto-tuned, and the user is prompted to re-acquire.Imaging acquisition was performed on a 1.5 T GE Signa TwinSpeed scanner with an 8-channel cardiac coil using the HeartVista autonomous MRI environment (HeartVista, Inc.; Los Altos, CA). Data was acquired with a multi-slice Double Inversion Fast Spin Echo (Black Blood) sequence with field of view ranging from 32×32 to 48x48 cm2, 256 x 96 to 168 matrix size, TE/TR/TI = 20-120 us/31.6 ms/758 us and echo train length = 24. SPIRiT parallel reconstruction 4 was performed.

Results

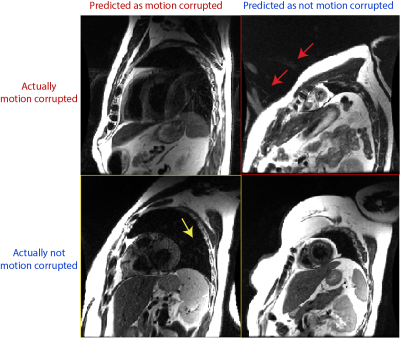

In the example of Black Blood sequence, motion artifacts behave like replicas in the phase encoding direction. The proposed model resulted in an overall accuracy = 85%, sensitivity = 86% and specificity = 84.5%. Figure 2 shows randomly selected examples of the confusion matrix. In general, the proposed deep learning pipeline misclassified results, when there are other artifact sources. For example, as pointed out by the yellow arrow and the red arrows, the false positive (yellow box) and false negative (red box) are misclassified when there are artifacts other than motion artifacts, such as aliasing, in the image. We expect motion artifact performance can be improved by training a multi-class network instead of single class (motion artifacts only).

We have integrated the prediction model into the autonomous MRI environment, shown in Figure 3, to trigger rescan operation. If the prediction indicates the current slice has artifacts, then the system prompts the operator to reacquire the scan.

Discussion

The proposed method illustrates the efficacy of automatically detecting motion artifacts. The proposed automated pipeline can be easily extendable to other kinds of artifacts. We are currently training and evaluating deep learning models that can recognize different artifacts and their source, e.g. flow artifacts, off-resonance artifacts, aliasing artifacts etc. Once detected, the system provides corresponding operative scan suggestions or even auto-tune the scan protocols to optimize the image quality based on the detected image artifact sources. For example, if the system detects aliasing artifacts, then it should increase FOV and automatically repeat the scan. Our results in motion artifact detection shows great promises toward this fully-autonomous MRI scanning modality.

Conclusion

A scan-aided artifact detection mechanism has been presented using deep learning framework, and was seamlessly integrated into the autonomous MRI system. It is able to provide effective scan aids and suggestions for scan operators.Acknowledgements

References

[1] Krupa K et al. Artifacts in Magnetic Resonance Imaging. Pol J Radiol. 2015; 80: 93–106.

[2] Szegedy C, et al. Rethinking the Inception Architecture for Computer Vision. 2015. https://arxiv.org/abs/1512.00567

[3] Abadi M et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. 2016. https://arxiv.org/abs/1603.04467

[4] Lustig M et al. SPIRiT: Iterative Self-consistent Parallel Imaging Reconstruction From Arbitrary k-Space. MRM 2010; 64(2):457-471

Figures