4092

Contact-Free Respiration Motion Monitoring Using a Markerless Structured Optical System in MRI1Tsinghua University, BEIJING, China, 2University of Washington, Seattle, WA, United States

Synopsis

Respiration motion artifacts remain a major problem in MRI, particularly at higher imaging resolution and field strength. In this study, we investigated the feasibility of using a MR-compatible in-bore camera system to perform contactless monitoring of respiratory information during MRI of human subjects. A good match to the respiratory data is apparent in terms of the timing of trough, and ICC between trigger intervals from the respiratory belt data and video-derived signals is 0.97, meaning that this optical system can monitor and correct in end-expiratory, in addition to simultaneously detecting random motion based on our previous work.

Purpose

Respiratory belt and navigator gated MR techniques have been widely used to monitor respiration motion. Navigator techniques are widely available, they increase scan times, are not compatible with all sequence types, or are only usable if the thorax/abdomen are within the field-of-view[1][2]. While respiratory belt avoids these problems, but has the disadvantage that a device must be attached to the subject and must be individually adjusted to function reliably, which interrupts patient workflow [3]. The aim of this work was to investigate the feasibility of using a MR-compatible in-bore optical system to perform contactless monitoring of respiratory information during MRI of human subjects.Methods

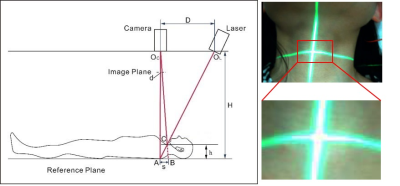

In Vivo Experiments: Video data were collected from six healthy volunteers (5 female and 1 male) placed inside the scanner bore (Philips Ingenia 3T, Best, the Netherland). A cross laser light was projected onto the neck area of the subject, which was captured by the camera (MRC, Germany, frame rate = 30 fps), with reference to Figure 1. This contact-free optical system was calibrated based on structured light theory before scanning. Data from respiratory belt were also acquired simultaneously, and logged for a 60s period: imaging with a 2D sequence (Gradient Echo, TR: 100ms, TE: 2ms, flip angle: 15 degrees, FOV = 375×375 mm2, slice thickness: 5 mm). Approximate synchronization was achieved manually by initiating logging simultaneously.

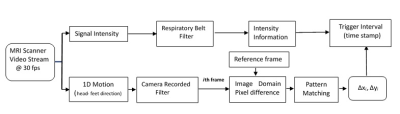

Video Processing and Validation: Processing applied to data for later comparison from the respiratory belt and camera video-derived is described in Figure 2. The x- and y-coordinate of the cross center was calculated and recorded in real time for each frame using pattern matching (LabVIEW, v2013). The respiratory filter module (Fig.2) is an infinite impulse response filter designed using a Butterworth (MATLAB, 2014a) to remove bulk motion and other high frequency noise like pulsation. The filter has the cutoff frequency of 0.1 Hz and 0.5 Hz, and filtering is applied in a forward–backward manner to the data, which results in zero phase filter, i.e., without a time shift in the filter output.

Comparison and Respiration Trigger Detection: The two reference signals from the respiratory belt and the camera video-derived were all scaled to cover a total range of 1 to allow easy comparison between them, because the scaling of all signals is already arbitrary, and they all simply provide relative magnitude. Trigger locations were found for each curve at the breathe-out time with a minimum value. These trigger locations were then used to calculate the trigger–trigger interval time.

Results and Discussion

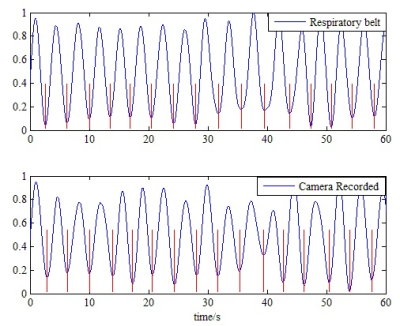

Figure 3 shows an example for a comparison of reference data from the respiratory belt and the video-derived signals. A good match to the respiratory data is apparent in terms of the timing of trough. The motion derived data, is clearly robust enough for reliable triggering, although the shape of the signals differ in several aspects, such as the more consistent peak height in the respiratory belt signal.

Trigger locations, computed as described in the Methods section, match well between the reference respiratory belt data and video-derived signals. Synchronization between the two signals was perfect in our setup, and there is a clear one- to- one mapping between triggers derived from the two signals. That is, every trigger detected in respiratory belt data stream was also detected in camera video-derived signals, and there were no extra triggers detected in video-derived signals that were not detected in the respiratory belt signal. This one-to-one mapping makes it possible to compare the time interval between triggers in end-expiratory, the Intraclass Correlation Coefficient (ICC) between trigger intervals from the respiratory belt data and video-derived signals is 0.97 (95% Confidence Interval: 0.83 to 0.99), meaning that motion based data correlates strongly the reference respiratory belt signals.

Conclusion

Our data indicate that it is clear and robust to obtain triggers in end-expiratory to the respiratory belt without physical contact to the subject for eliminating the effects of breathing. Also, our optical system has previously been demonstrated to be able to detect and correct random motion (e.g. cough and swallowing) and bulk shift motion [4-6], by projecting a laser light onto the neck of the subject.

In a word, using a markless structured light system in-bore is promising as an integrated motion detection solution, with both physiological motion and random motion detected simultaneously. The data shown here indicate that this is indeed the case.

Acknowledgements

No acknowledgement found.References

[1] Ehman RL, Felmlee JP. Adaptive technique for high-definition MR imaging of moving structures. Radiology 1989; 173:255–263.

[2] Pfeuffer J, Van de Moortele PF, Ugurbil K, Hu X, Glover GH. Correction of physiologically induced global off-resonance effects in dynamic echo-planar and spiral functional imaging. Magn Reson Med 2002; 47:344–353.

[3] Ehman RL, McNamara MT, Pallack M, Hricak H, Higgins CB. Magnetic resonance imaging with respiratory gating: techniques and advantages. AJR Am J Roentgenol 1984; 143:1175–1182.

[4] Liu J, Chen H, Zhou Z, Wang J, Yuan C. Motion Detection and Correction Using Non-marker-attached Optical System during MRI scanning. 23th Annual Meeting of ISMRM, 2015; Toronto, Canada. P1300.

[5] Chen H, Liu J, Zhou Z, Yuan C, Boernert P, Wang J. Artifact Removal in Carotid Imaging based on Motion Measurement using Structured Light, 23rd Annual Meeting of ISMRM2015; Toronto, Canada.

[6] Liu J, Chen H, Wang J, Balu N, Liu H, Yuan C. Motion Detection and Correction for Carotid Artery Wall Imaging using Structured Light. 24th Annual Meeting of ISMRM,2016; Singapore. p342.

Figures