4079

Comprehensive head motion modelling and correction using simultaneously acquired MR and PET data1Monash Biomedical Imaging, Melbourne, Australia, 2Monash Health, Melbourne, Australia, 3Institute of Neuroscience and Medicine, Forschungszentrum Jülich GmbH, Jülich, Germany, 4Department of Electrical and Computer Systems Engineering, Monash University, Melbourne, Australia, 5Australian Research Council Centre of Excellence for Integrative Brain Function, Monash University, Melbourne, Australia, 6Monash Institute of Cognitive and Clinical Neuroscience, Monash University, Melbourne, Australia

Synopsis

Head motion is one of the major issues in neuroimaging. With the introduction of MR-PET scanners, motion parameters can now be estimated from two independent modalities acquired simultaneously. In this work, we propose a new data-driven method that combines MR image registration and PET data driven approach to model head motion during the complete course of MR-PET examination. Without changing the MR-PET acquisition protocol, the proposed method provides motion estimates with a temporal resolution of ~2 secs. Results on a phantom dataset show that the proposed method can significantly reduce motion artefact in brain PET images and improve image sharpness compared with the MR based methods.

Introduction

Patient head motion is a common cause of image degradation in both clinical and research neuroimaging studies. The new fully integrated MR-PET scanners offer the possibility to model head movements taking advantage of the simultaneously acquired data from the two modalities. The most common approach is to use MR-extracted parameters to correct PET data. We have recently introduced an MR-based motion correction method using an optimised multi-contrast MR registration pipeline1. This method has been proven able to substantially reduce motion artefacts without lengthening the total acquisition time. However, one limitation of this method is the absence of intra MR sequences motion sampling, such as during T1 weighted scan. In this work, we present a new simultaneous MR-PET based motion correction method, extending the previous method1 by incorporating motion estimation from PET data to provide an enhanced motion estimation with improved temporal resolution. The inter-sequence motion is estimated by the multi-contrast MR based method1, while the intra-sequence motion is estimated using a Principal Component Analysis (PCA) analysis of dynamic PET data2. Experiments have shown the improved motion correction using the new method.Methods

Data acquisition:

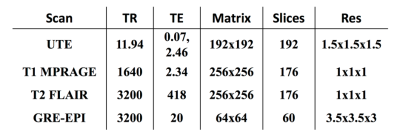

The IIDA brain phantom3 was scanned on a Siemens Biograph mMR, equipped with a 20-channel head and neck coil. PET list-mode data were continuously acquired for 45 minutes (dose ~70 MBq). Simultaneously, multiple MR scans were collected (see Table 1). Head movements were introduced during the dynamic fMRI scans as well as within structural scans.

Motion estimation:

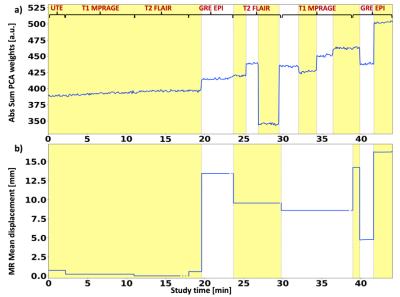

Inter-sequence: MR multi-contrast registration based method was previously described in Sforazzini et al1. Briefly, all the MR images were registered to a reference (T2 FLAIR) obtaining translational and rotational parameters, as well as mean displacement.

Intra-sequence: PET PCA-based method was performed as following. PET list-mode data were re-sampled into low spatial resolution, 2-second sinogram frame using in-house developed software. Global scaling was applied to have a consistent number of events in each frame. The sinogram frames were then masked to reduce background noise and normalised by applying Freeman-Tukey transformation. The matrix containing a vectorised sinogram in each row was finally decomposed using PCA analysis. The absolute sum of the first three principal components in every time point was used to identify motion occurrences.

Motion correction in PET images:

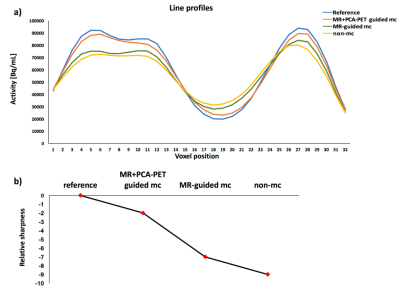

Multiple Acquisition Frame (MAF) algorithm4 was used to bin the PET list-mode data into multiple frames whenever movements occurred. Both the MR-calculated mean displacement and the PCA weights were used to bin the PET data. Specifically, a new PET frame was initialised each time the MR displacement exceeded a pre-defined threshold (2mm) and/or there was a discontinuity in the PET PCA temporal curve. Reconstructed PET frames were then re-aligned using the MR-derived motion parameters or by registration to the first one. Finally, a 60-minutes PET image was obtained by combining the motion corrected PET individual frames. Reconstructed PET images were further evaluated using a sharpness index calculated as the mean absolute Laplacian of Gaussian5.

Results

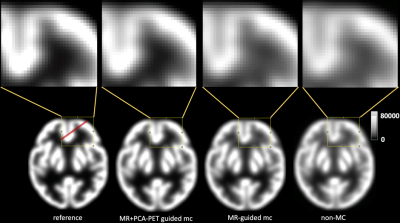

Figure 1 shows motion plots using PCA of PET data (a) and registration of MR images(b). As can be seen from Figure 1(a), the principle components of PET raw data identify motion occurring within T1 and T2 scans. A good agreement between the two techniques can be seen for motion introduced during GRE-EPI acquisition. The combination of both MR-based and PCA-PET based approaches provides an excellent temporal sampling of motion parameters. The better motion characterisation is reflected by the quality of the PET images. Figure 2 shows the motion correction results. Reconstructed images demonstrate that the combined MR and PCA-PET motion correction (second column, Figure 2) provide the most accurate motion corrected PET image reconstruction, with only minor difference with the reference image (first column, Figure 2). MR-guided motion corrected images (third column) and non-motion corrected images (fourth column) show less clear grey and white matter boundaries. Line profiles and relative sharpness in Figure 3 further corroborated those findings, with the combined MR and PCA-PET method outperforming all the others.Discussion and Conclusion

We presented a combined MR and PCA-PET based motion correction for PET image reconstruction. This method extends our previous MR multi-contrast image registration based motion correction method1 in the simultaneous MR-PET experiments. The proposed method extracts motion parameters from both MR and PET to provide an accurate motion estimation. Overall, this technique offers a comprehensive motion modelling with good temporal resolution and without changing the MR acquisition protocol, which may be valuable for both clinical and research MR-PET applications. We plan to integrate the PCA-PET based motion correction within our MR-based motion correction pipeline already available online (http://mbi-tools.erc.monash.edu/).Acknowledgements

No acknowledgement found.References

1. F. Sforazzini, Z. Chen, J. Baran, J. Bradley, A. Carey, N. J. Shah, and G. Egan, “MR-based attenuation map re-alignment and motion correction in simultaneous brain MR-PET imaging,” in 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), 2017, pp. 231–234.

2. K. Thielemans, P. Schleyer, J. Dunn, P. K. Marsden, and R. M. Manjeshwar, “Using PCA to detect head motion from PET list mode data,” in 2013 IEEE Nuclear Science Symposium and Medical Imaging Conference (2013 NSS/MIC), 2013, pp. 1–5.

3. H. Iida, Y. Hori, K. Ishida, E. Imabayashi, H. Matsuda, M. Takahashi, H. Maruno, A. Yamamoto, K. Koshino, J. Enmi, S. Iguchi, T. Moriguchi, H. Kawashima, and T. Zeniya, “Three-dimensional brain phantom containing bone and grey matter structures with a realistic head contour.,” Ann. Nucl. Med., vol. 27, no. 1, pp. 25–36, Jan. 2013.

4. Y. Picard and C. J. Thompson, “Motion correction of PET images using multiple acquisition frames,” IEEE Trans. Med. Imaging, vol. 16, no. 2, pp. 137–144, Apr. 1997.

5. P. J. Schleyer, J. T. Dunn, S. Reeves, S. Brownings, P. K. Marsden, and K. Thielemans, “Detecting and estimating head motion in brain PET acquisitions using raw time-of-flight PET data,” Phys. Med. Biol., vol. 60, no. 16, pp. 6441–6458, Aug. 2015.

Figures