3680

Ultra-low-dose Amyloid PET Reconstruction using Deep Learning with Multi-contrast MRI Inputs1Radiology, Stanford University, Stanford, CA, United States, 2Electrical Engineering, Stanford University, Stanford, CA, United States, 3Engineering Physics, Tsinghua University, Beijing, China, 4GE Healthcare, Menlo Park, CA, United States

Synopsis

Simultaneous PET/MRI is a powerful hybrid imaging modality that allows for perfectly correlated morphological and functional information. With deep learning methods, we propose to use multiple MR images and a noisy, ultra-low-dose amyloid PET image to synthesize a diagnostic-quality PET image resembling that acquired with typical injected dose. This technique can potentially increase the utility of hybrid amyloid PET/MR imaging in clinical diagnoses and longitudinal studies.

Introduction

The ability to provide complementary information about morphology and function in many neurological disorders has fueled interest in simultaneous positron emission tomography and magnetic resonance imaging (PET/MRI) over the last decade. For example, in dementia, simultaneous amyloid PET/MRI provides the opportunity of a “one-stop shop” imaging exam for early diagnosis1. Moreover, with perfect spatial and temporal registration of the two imaging datasets, the information derived from one modality can be used to improve the other2. While the strength of PET lies in the quantification of the radiotracer concentration, the scan subjects patients to radiation, limiting frequent usage of this technology.

With the advent of deep neural networks (DNNs) and increased computing power, it is now possible to use deep learning methods to produce high quality PET images from scan protocols with markedly reduced injected radiotracer dose. Previously, we have demonstrated the ability to reduce radiotracer dose by at least 100-fold using these approaches for 18F-fludeoxyglucose studies in glioblastoma patients3. Such methods should be transferable directly to PET scans using other radiotracers, such as the amyloid tracer 18F-florbetaben used in this work, relevant to Alzheimer’s disease (AD). This technique can potentially increase the utility of hybrid amyloid PET/MR imaging in clinical diagnoses and longitudinal studies.

Methods

PET/MR data acquisition

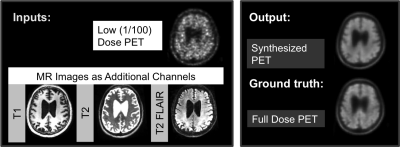

20 subjects (10 male, 10 female) were recruited for the study. MR and PET data were simultaneously acquired on an integrated PET/MR scanner with time-of-flight capabilities (SIGNA PET/MR, GE Healthcare). T1-weighted, T2-weighted, and T2 FLAIR MR images were acquired. 18F-florbetaben (325±39 MBq) imaging was acquired 90-110 minutes after injection. The raw list-mode PET data was reconstructed for the full-dose ground truth image and was also randomly undersampled by a factor of 100 and then reconstructed to produce a low-dose PET image.

DNN implementation

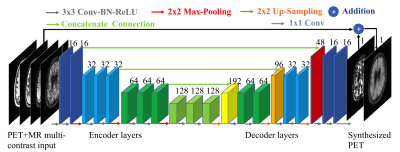

We trained a convolutional-deconvolutional DNN as shown in Figure 14,5. The inputs of the network are the multi-contrast MR images (T1-weighted, T2-weighted, T2 FLAIR) and the low-dose PET image. The network (“PET+MR model”) was trained on the full-dose PET image as the ground truth (Figure 2). A PET-only model without the MR images was also trained for comparison. 5-fold cross-validation was used to prevent training and testing on the same subjects (16 subjects for training, 4 subjects for testing per network trained).

Data analysis

Using the software FSL6, a brain mask derived from the T2 images of each subject was used for voxel-based analyses. For each axial slice of the volumes, the image quality of the synthesized PET image and the low-dose PET images within the brain mask were compared to the original full-dose image using the metrics peak signal-to-noise ratio (PSNR), structural similarity (SSIM), and root mean square error (RMSE). The synthesized PET images and the full-dose PET image of each subject were anonymized and a board certified neuroradiologist determined the amyloid uptake status (positive or negative) of each image. Cohen’s kappa was calculated to determine the agreement of the readings between the synthesized images and the full-dose image. For intra-reader reproducibility, the full-dose PET images were read by the same radiologist twice, three months apart.

Results and Discussion

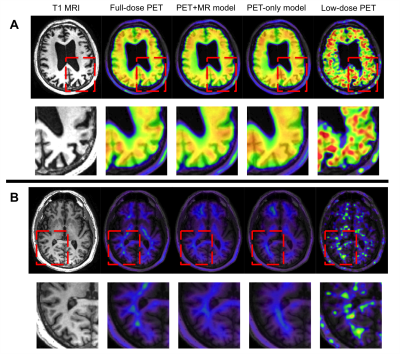

Qualitatively, the synthesized images show marked improvement in noise reduction to the low-dose image and resemble the ground truth image. Moreover, the model trained with multi-channel MR was better able to reflect the underlying anatomy in amyloid uptake (Figure 3). The readings of the full-dose image and the synthesized images show general agreement (κ=0.56, 95% confidence interval 0.18-0.95) and are comparable to the intra-reader reproducibility results (κ=0.78, 95% confidence interval 0.49-1). Quantitatively, the images synthesized from the PET+MR model has the highest PSNR in 19 out of 20 subjects as well as the highest SSIM and lowest RMSE in all subjects (Figure 4). The low-dose image performed the worst using all metrics. This shows that the PET+MR model is able to synthesize PET images with superior image quality as well as having better resemblance to the ground truth image than a PET-only model.Conclusion

This work has shown that diagnostic quality amyloid PET images can be generated using deep learning methods using simultaneously-acquired MR images and ultra-low-dose PET data.Acknowledgements

This project was made possible by the NIH grant P41-EB015891, the Stanford Alzheimer’s Disease Research Center, GE Healthcare, and Piramal Imaging.References

1. Drzezga A, Barthel H, Minoshima S, Sabri O. “Potential Clinical Applications of PET/MR Imaging in Neurodegenerative Diseases.” J Nucl Med. 2014 Jun 1;55(Supplement 2):47S-55S.

2. Catana C, Drzezga A, Heiss W-D, Rosen BR. “PET/MRI for Neurologic Applications.” J Nucl Med. 2012 Dec;53(12):1916-25.

3. Gong E, Guo J, Pauly J, Zaharchuk G, “Deep learning enables at least 100-fold dose reduction for PET imaging,” in Proceedings of the RSNA 2017, Chicago, IL, U.S.A.

4. Ronneberger O, Fischer P, Brox T. “U-Net: Convolutional Networks for Biomedical Image Segmentation.” arXiv:1505.04597 (2015).

5. Chen H et al. “Low-Dose CT with a Residual Encoder-Decoder Convolutional Neural Network (RED-CNN).” arXiv:1702.00288 (2017).

6. Jenkinson M, Beckmann CF, Behrens TE, Woolrich MW, Smith SM. “FSL.” Neuroimage. 2012 Aug 15;62(2):782-90.

Figures