3678

Learning-based attenuation correction for Head and Neck PET/MR1Centre de NeuroImagerie de Recherche (CENIR), Institut du Cerveau et de la Moelle Epinière (ICM), Paris, France, 2Department of Nuclear Medicine, Groupe Hospitalier Pitié-Salpêtrière C. Foix, Paris, France, 3Laboratoire d’Imagerie Biomédicale, Sorbonne Universités, UPMC Univ Paris 06, Inserm U 1146, CNRS UMR 7371, Paris, France, 4GE Global Research, Bangalore, India, 5Department of diagnostic and functional neuroradiology, Groupe Hospitalier Pitié-Salpêtrière C. Foix, Paris, France, 6GE Healthcare, Munich, Germany

Synopsis

PET/MR in head and neck cancer lacks accurate attenuation correction (AC). In this work we implemented and tested three PET/MR-AC methods: 1) Dixon-based AC as used in clinical routine ignoring facial and cervical bones (Dixon), 2) Zero TE (ZTE)-based AC for segmenting bone and combined with Dixon-based fat-water separation (hZTE), 3) a deep learning approach (DL), trained on CT-ZTE datasets. PET images were reconstructed on six patients testing three AC methods (Dixon, hZTE, DL) and compared to reference CT-AC. PET comparison showed underestimated SUV with Dixon-AC, decreased error with hZTE-AC compared to CT-AC and the lowest error with DL-AC.

Introduction

Head and neck (HN) cancer is the third most referred cancer in PET/MR.1 Intrinsic coregistration of simultaneous PET/MR outperforms separately acquired and software-based registered PET and MRI.2 Nevertheless, attenuation correction (AC) remains suboptimal in HN PET/MR. The method for AC currently available in clinical routine is based on fat/water segmentation of Dixon MR images. ZTE-AC was suggested as an alternative as it allows to account for bone attenuation. However, ZTE-AC typically assigns uniform soft tissue for brain. As neck region contains fat, a 4-class ZTE-AC created by combining air, fat and muscle segmented on Dixon MRI and bone segmented on ZTE MRI has been recently evaluated.3 Here, in addition to both former AC methods, we evaluated a learning-based approach to generate a synthetic CT (pseudoCT) for AC in PET/MR. It uses a database of CT and MR images, particularly ZTE MR to ensure contrast in bone, in order to train the DL model to segment ZTE images with high accuracy and to scale continuous bone AC coefficients. The AC methods and resulting PET images were compared to reference CT-AC for evaluation.Methods

Twelve patients (6 M, 6 F, mean age 64.2±14.6) examined with 18F-FDG-PET/MR (GE SIGNA PET/MR, Chicago, IL, USA) in the presurgical staging of cancers of the oral cavity were included in this study. A two-point LAVA-Flex MRI (pixel size of 1.95 x 1.95 mm2, 120 slices, slice thickness 5.2 mm with 2.6 mm overlap) used for Dixon-AC map generation as well as a ZTE MRI (3D center-out radial acquisition, voxel size 2.4 x 2.4 x 2.4 mm3, FOV 26.4 x 26.4 cm2, flip angle 0.8°, bandwidth ± 62.5kHz) were acquired during the PET acquisition. Six patients were used for training the convolution neural network (CNN) model and six were used for testing. Four AC maps were generated and used for PET image reconstructions for six testing patients:

- Dixon-AC: the method implemented on the scanner, used LAVA-Flex water and fat images to create a 3-segment AC map 4

- hZTE-AC: hybrid AC map generated by segmenting bone on ZTE MRI 5 and scaling bone intensity into CT intensity in Hounsfield Units (HU) then overlaying bone voxels on Dixon-AC

- DL-AC: HN Deep learning (DL) model for pseudoCT computation was built on ZTE images with corresponding co-registered CT image as the reference. Training was performed on 50 cases, of which six were part of the described protocol. The trained model was a 3D convolutional network in the U-Net architecture with: 8-layers, Adam optimizer and RMSE cost function. The end-to-end processing was implemented in Python using Keras 6 and Tensorflow 7 libraries. The model computes pseudoCT via image regression which is used for AC.

- CT-AC: CT was non-linearly registered to ZTE using a block-matching algorithm.8

Relative difference maps and relative difference in SUV in selected ROIs were studied between PET image corrected with each MR-based AC and the reference CT-AC.

Results

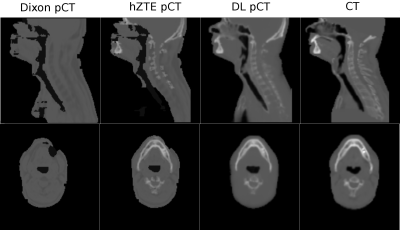

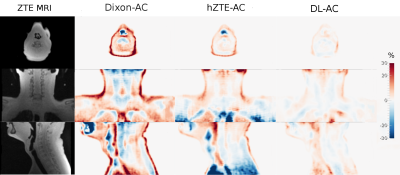

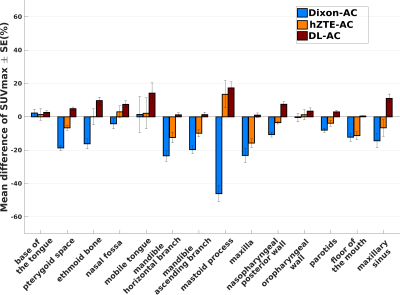

Visual comparison of AC maps yielded a good agreement between hZTE-AC (linear regression on joint histogram R2=0.27) and CT-AC and an excellent agreement between DL-AC and CT-AC (linear regression on joint histogram R2=0.77) (fig. 1). Relative difference maps demonstrate underestimation in Dixon-AC PET images compared to CT-AC (mandible SUVmax=-21.5±10.7%, mastoid SUVmax=-44.6±15.5%, maxilla SUVmax=-22.8± 16.3%, ethmoid bone SUVmax=-16.1±11.8%) and a local overestimation in air cavities and neck soft tissue (fig. 2). Averaged over all six patients, the PET error was decreased between hZTE-AC and CT-AC near bone structures. However, AC remained incorrect in nasal cavities and airways in hZTE-AC. Using DL-AC the error is reduced to below 10% in most bone structures (fig. 3).Conclusion/Discussion

In this work, we suggested an attenuation correction method using ZTE MRI and machine learning for head and neck cancer in PET/MR. We have evaluated and compared the quantitative PET performance of the learning-based method on PET images to the default Dixon-based method and to a combined ZTE-Dixon segmentation-based method on six patients while considering the measured CT as reference. hZTE-AC showed a moderate attenuation correction near bone structures whereas DL-AC improved the attenuation correction in all the images especially near bone structures decreasing the SUV error to less than 10% in most of them. DL-AC, if trained properly, can be a good candidate to generate AC maps in head and neck PET/MR. Future work will consider adding more cases to the learning model to achieve a more robust output and extend the study to more patients to evaluate its reproducibility.Acknowledgements

The research leading to these results received funding from the programs "Institut des neurosciences translationnelle" ANR-10- IAIHU-06 and "Infrastructure d’avenir en Biologie Santé" ANR- 11-INBS-0006.

We thank GE Healthcare for providing prototype pulse sequences and research tools.

We thank Grégoire Malandain (INRIA) for providing the block-matching registration software.

References

- Fendler W, Czernin J, Herrmann K, et al., Variations in PET/MRI operations: Results from an international survey among 39 active sites. J Nuc Med 2016; 57(12):2016-2021.

- Monti S, Cavaliere C, Covello M, et al. An Evaluation of the Benefits of Simultaneous Acquisition on PET/MR Coregistration in Head/Neck Imaging. J Healtc Eng 2017; vol. 2017, 7 pages.

- Khalifé M, de Laroche R, Bequé D, et al. ZTE-based attenuation correction in head and neck PET/MR. Proc. IEEE NSS/MIC 2017.

- Wollenweber S, Ambawani S, Lonn A, et al. Comparison of 4-class and continuous fat/water methods for whole-body, MR-based PET attenuation correction. IEEE Tran on Nuc Sc 2013; 60(5): 3391-3398.

- Wiesinger F, Sacolick L, Menini A, el al. Zero TE MR bone imaging in the head. Magn Res Med 2015; 75(1):107-114.

- Chollet F et al. Keras. 2015. https://github.com/fchollet/keras

- Abadi M, Agarwal A, Barham P et al. Tensorflow:Large-scale machine learning on heterogeneous distributed systems. arXiv preprint arXiv:1603.04467, 2016.

- Garcia V, Commowick O and Malandain G. A robust and efficient block matching framework for non linear registration of thoracic CT images. Grand Challenges in Medical Imaging Analysis (MICCAI workshop) 2010; p 1–10.

Figures